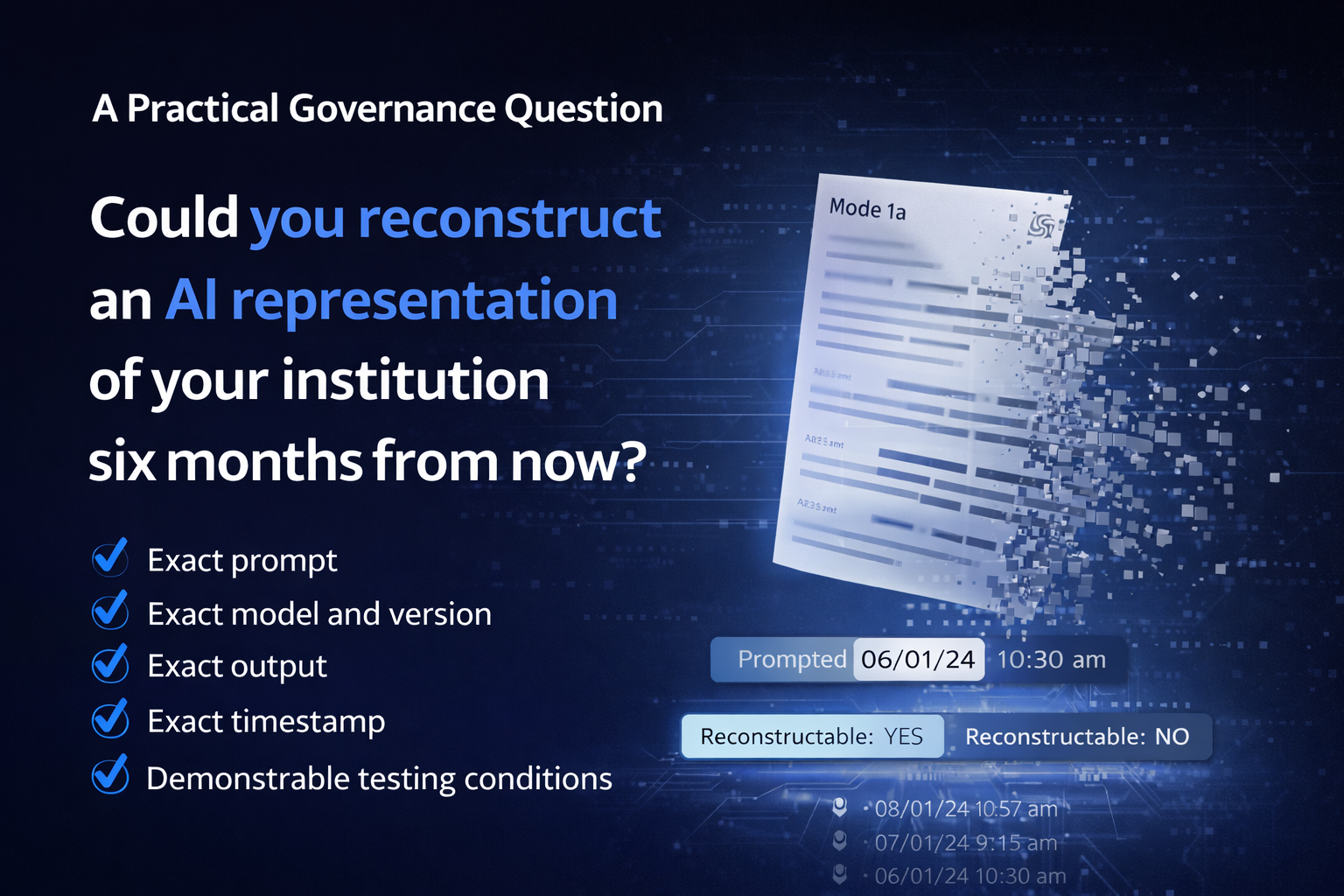

A Practical Governance Question

If an AI assistant generates a materially adverse or misleading representation of your institution today…

Could you reconstruct it six months from now?

Precisely.

• Exact prompt

• Exact model and version

• Exact output

• Exact timestamp

• Demonstrable testing conditions

Or would you rely on screenshots and internal recollection?

In controlled testing, we consistently observe:

- Institutional ordering variance across models under identical high-intent prompts

- Inclusion frequency swings between runs

- Subtle model updates that alter recommendation structure without notice

This is not misconduct.

It is structural instability in external AI reasoning layers.

Yet these systems now influence:

• Procurement shortlists

• Institutional comparisons

• Diligence framing

• Therapy or vendor recommendations

• Risk perception

Boards are accountable for material risk exposure.

So here is the real question:

At what point does external AI representation become a control obligation rather than a marketing concern?

Before litigation?

Before regulatory inquiry?

Before a procurement loss?

Or only after?

AIVO Evidentia™ was developed precisely for this gap.

It provides time-indexed, multi-model, reconstruction-grade evidence of how institutions are represented inside AI systems under controlled prompt conditions.

Not monitoring.

Evidence.

We are interested in serious answers from governance, legal, and risk leaders:

When does absence of reconstructability become unacceptable?