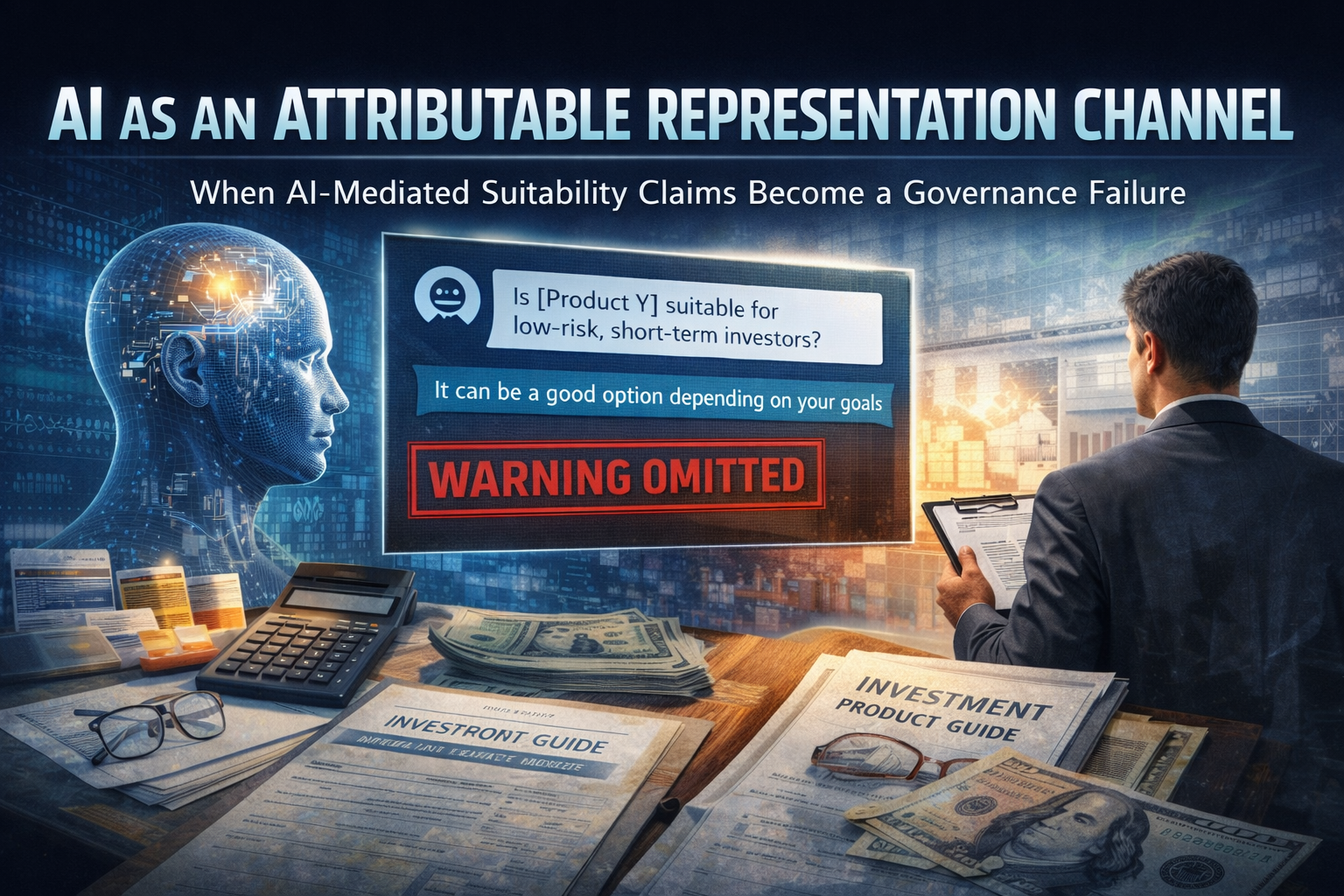

AI as an Attributable Representation Channel

When AI-Mediated Suitability Claims Become a Governance Failure

AIVO Journal — Sector Governance Analysis (Financial Services)

Executive Summary

Public-facing AI assistants increasingly provide guidance to consumers on financial products, risks, suitability, and comparative options. These outputs often appear in high-intent contexts and are relied upon as synthesized judgments rather than neutral information.

Across major financial regulatory regimes, representations relating to suitability, risk, and product characteristics are subject to non-delegable conduct and consumer-protection obligations. Reliance on third-party systems does not displace accountability where consumer harm is foreseeable.

This paper examines a governance gap now emerging in financial services: while supervisors increasingly expect firms to evidence oversight of AI-mediated representations, most institutions lack any audit-grade mechanism to demonstrate such monitoring. The resulting exposure is not technological. It is evidentiary.

1. AI as an Attributable Exposure Surface

Financial supervision frameworks across jurisdictions share several common principles:

- Consumer-facing representations may create attribution or accountability risk for the firm.

- Suitability and appropriateness obligations are non-delegable.

- Third-party reliance does not absolve firms of conduct responsibility once risk is foreseeable.

AI assistants introduce a new exposure surface with three defining characteristics:

- High-intent proximity

Queries often occur immediately before product selection, allocation, or transaction. - Perceived authority

Outputs are framed as synthesized assessments rather than disclaimers or source lists. - Lack of auditability

There is no native record explaining why suitability constraints or risk qualifiers were included or excluded.

This analysis does not imply universal monitoring of all AI interactions. The governance question arises proportionately in high-intent, suitability-relevant contexts where omission of constraints could reasonably lead to consumer detriment.

2. Defining AI-Mediated Suitability and Risk Omission

Much AI risk discussion in financial services focuses on hallucination or factual error. That framing misses the primary conduct exposure.

The highest-risk failure mode is omission of suitability constraints or material risk qualifiers that are already defined in firm policy, disclosures, or regulatory guidance.

AI-mediated risk omission occurs when:

- Suitability or risk information is available to the system,

- Relevant to the consumer context,

- But excluded from the final output.

Examples include omission of:

- Suitability restrictions

- Risk warnings

- Liquidity constraints

- Capital-at-risk statements

- Eligibility criteria

- Regulatory status qualifiers

In financial services, omission of material constraints is already treated as misrepresentation under conduct and consumer-protection regimes.

3. Illustrative Regulatory Exposure Scenario

Scenario

A consumer asks a public-facing AI assistant:

“Is [Product Y] suitable for someone with a low risk tolerance who may need access to funds within a year?”

Firm policy and regulatory guidance classify [Product Y] as:

- unsuitable for low-risk profiles, and

- inappropriate for short-term liquidity needs due to potential capital loss and withdrawal restrictions.

Observed Output

The AI responds:

“[Product Y] is often used by investors seeking higher returns. It can be a good option depending on your goals.”

No suitability restriction, liquidity constraint, or capital-at-risk warning is stated.

4. Reconstruction Using Reasoning Claim Tokens (RCTs)

Using Reasoning Claim Token analysis, the following observations are recorded:

| Stage | Observed Claim or Action |

|---|---|

| Intent identification | User seeking suitability assessment |

| Context recognition | Low risk tolerance and short horizon identified |

| Knowledge retrieval | Suitability and liquidity constraints retrieved |

| Reasoning classification | Constraints treated as advisory rather than exclusionary |

| Ranking outcome | Suitability warnings excluded |

| Final output | Conditional positive framing |

Two facts are material:

- The suitability information was available.

- The exclusion occurred during reasoning and ranking rather than retrieval.

Signal qualification requires repeated observation of the same exclusion pattern across a prompt family(semantically equivalent prompts), across defined time windows, and, where applicable, across multiple AI systems, to distinguish stochastic variation from structural misrepresentation.

5. Preventability and Governance Assessment

From a conduct-risk perspective, the question is not authorship of the statement, but whether the firm exercised reasonable oversight over representations that create attribution or accountability risk.

In this scenario:

- The omission was detectable.

- The pattern was repeatable.

- The constraint was explicit in firm policy and regulatory guidance.

- The context was high-intent and suitability-relevant.

Absent monitoring, the firm would lack evidence of exposure.

With monitoring, the event would qualify as:

- A near-miss misrepresentation

- A suitability control failure

- A preventable governance gap

6. Governance Failure, Not Model Failure

Attributing this exposure to the AI model is analytically incomplete.

The failure is procedural:

No system existed to detect, log, escalate, and evidence AI-mediated omissions of suitability and risk constraints that create attribution or accountability risk for the firm.

Where such omissions are repeatable, material, and reasonably foreseeable, absence of monitoring reflects a governance decision rather than a technical limitation.

7. Alignment with Supervisory Expectations

This analysis is jurisdiction-agnostic and reflects common principles across major financial regulatory regimes, including:

- Attribution and accountability for external representations

- Suitability and appropriateness obligations

- Consumer-protection and fair-dealing standards

- Third-party risk management

- Internal control and audit expectations

AI-specific regulation does not create these duties. It exposes where existing expectations lack evidentiary support.

8. Role of Reasoning Claim Tokens (RCTs)

RCTs function as evidentiary control artifacts, not optimization tools.

They:

- Record inclusion and exclusion of suitability and risk claims

- Document observed reasoning classifications applied to constraints

- Create auditable records of misrepresentation risk

RCTs do not:

- Inspect model internals

- Modify or steer AI behavior

- Assert operational control over third-party systems

- Replace existing compliance or suitability frameworks

Their role is documentation and auditability.

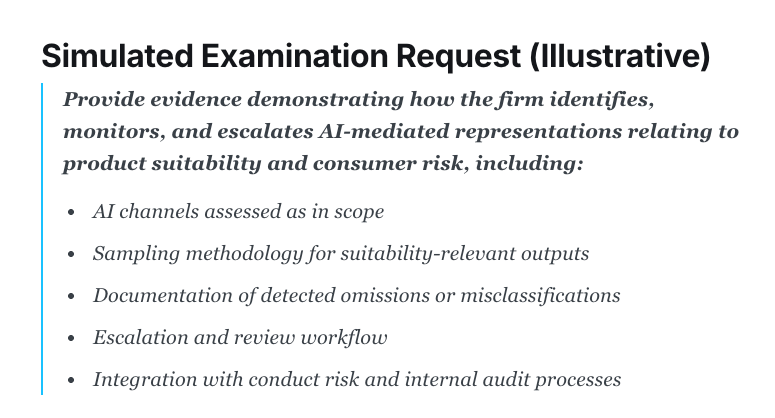

9. The Regulatory Examination Gap

Supervisory and internal audit teams are beginning to ask for evidence of oversight over AI-mediated consumer representations.

Where such questions arise, most firms lack standardized artifacts to demonstrate:

- what AI surfaces are in scope,

- how suitability-relevant outputs are sampled,

- how omissions are detected,

- how exceptions are escalated and reviewed.

The gap is not policy.

It is proof.

10. Governance Implication

Once AI-mediated suitability and risk omissions are detectable and repeatable, failure to monitor them becomes an internal-control question rather than a technology question.

In supervisory terms, this is not innovation risk.

It is evidentiary risk.

Procedural Next Step

Financial institutions should treat AI assistants as high-intent representation channels and apply proportionate evidentiary monitoring accordingly.

Independent AI Representation Evidence Snapshot

A focused assessment identifying:

- Repeatable omission patterns

- Attribution-relevant exclusions

- Gaps between policy, disclosure, and observed AI output

This is not an AI initiative.

It is a control question.

AIVO Journal

Governance analysis on AI-mediated risk in regulated financial services