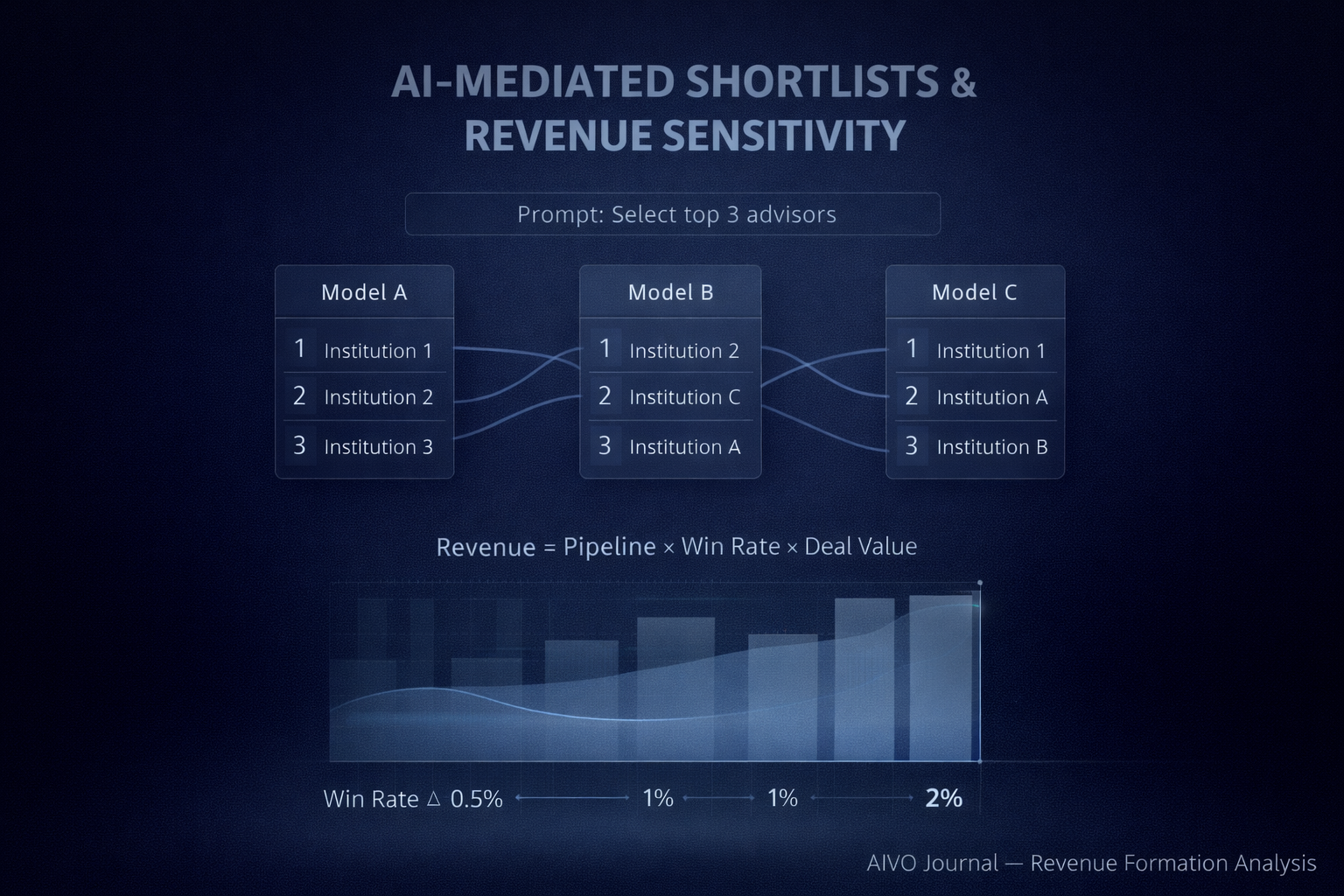

AI-Mediated Shortlists and Revenue Formation Sensitivity

A new variable is entering forecast models. It is not yet measured.

In most institutions, revenue forecasting rests on a stable identity:

Expected Revenue = Pipeline Volume × Win Probability × Average Deal Value

Pipeline is tracked.

Deal value is modeled.

Win probability is stress-tested.

What is rarely examined is whether the inputs to win probability are themselves stable.

A new external variable is emerging: AI-mediated shortlist formation.

The Structural Shift

AI systems are increasingly used in high-intent decision contexts:

- “Which Tier 1 bank should we start with?”

- “Which oncology partner has the strongest late-stage pipeline?”

- “Which advisory firm leads in cross-border M&A?”

These systems compress consideration sets before direct contact occurs.

They are not marketing channels.

They are early-stage decision filters.

Controlled cross-system testing across major models shows that institutional ordering at the final recommendation stage is not consistent.

Under identical prompt classes:

- Inclusion frequency varies

- Ranking position shifts

- Final recommendation anchors differ

This is not factual contradiction.

It is ordering instability.

Why Ordering Instability Matters

Win probability is influenced by shortlist inclusion.

Decades of procurement and behavioral research demonstrate that:

- First-position anchoring increases selection probability

- Early inclusion affects downstream evaluation

- Initial framing narrows competitive sets

If shortlist formation is partially mediated by external AI systems, and those systems produce non-uniform ordering, then win probability may exhibit variance.

Even marginal variance introduces forecast sensitivity.

Sensitivity, Not Speculation

The appropriate question is not:

“Is AI costing revenue today?”

The appropriate question is:

“What level of revenue sensitivity would arise from small shifts in win probability?”

Consider conservative bands:

- 0.5 percent change in win probability

- 1 percent change

- 2 percent change

Revenue Sensitivity =

Segment Revenue × Δ Win Probability

For a business segment generating 8bn in annual revenue:

- 1 percent variance equates to 80m in revenue sensitivity

This is not a claim of realized loss.

It is a modeling exercise.

Small probability shifts compound in high-value environments.

Why This Is a Governance Question

Two characteristics distinguish AI-mediated shortlists from traditional digital channels.

1. Non-reconstructability

External AI outputs are ephemeral.

Institutions do not log them.

They cannot be reconstructed retroactively.

If ordering instability increases over time, there is no internal historical baseline.

2. Non-linearity of Adoption

Current penetration may be modest.

However, decision-assist technologies historically follow non-linear adoption curves.

Reliance compounds once trust stabilizes.

Materiality often lags trajectory.

Monitoring typically begins too late.

The Measurement Gap

Most institutions monitor:

- Digital performance

- Brand equity

- Search visibility

- Social sentiment

Few monitor:

Cross-model decision-stage ordering stability.

Forecast models implicitly assume that win probability drivers remain stable.

AI-mediated shortlists introduce a potential new driver.

It is currently unmodeled.

What Remediation Actually Means

Remediation does not imply output control.

No institution controls model behavior.

What can be done:

- Benchmark revenue-critical prompt classes

- Measure cross-model ordering dispersion

- Track final recommendation resolution rates

- Monitor competitor ordering drift

- Reduce variance where structural signal incoherence contributes

The objective is not guaranteed ranking improvement.

The objective is variance reduction and slope tracking.

This is closer to risk calibration than optimization.

The Strategic Inflection Point

The most significant risk is not immediate revenue loss.

It is discovering several years from now that:

- AI-mediated decision flow has grown materially

- Ordering drift accelerated

- Competitive displacement patterns hardened

- No historical benchmark exists

Revenue formation would have incorporated an external variable without internal measurement.

That is a governance blind spot.

The Measured Position

This is not an alarmist claim.

It is a structural observation:

External AI systems now participate in early shortlist formation.

Shortlist ordering across systems is measurably unstable.

Win probability is sensitive to shortlist inclusion.

Revenue is sensitive to win probability.

The prudent response is early calibration.

Monitoring should precede materiality.

The Broader Capital Markets Implication

Revenue sensitivity affects:

- Forecast confidence

- Guidance dispersion

- Risk premium

- Valuation assumptions

If external decision-stage compression becomes structurally embedded in enterprise purchasing behavior, it will eventually register in capital markets models.

The institutions that measure slope early will understand variance sooner.

Those that do not will react later.

If you oversee revenue forecasting or financial controls, the appropriate question is not whether AI is material today, but whether win probability assumptions remain fully observable.

If not, baseline measurement should precede materiality.

AIVO Standard provides a structured framework for benchmarking ordering stability and translating dispersion into conservative sensitivity bands.