AI Recommendation Intelligence

The Infrastructure Layer for AI-Mediated Purchase Decisions

58% of buyers now use AI systems to choose between competing brands.

Not to research.

Not to browse.

To choose.

“Which CRM should we buy?”

“Book me a hotel in Paris.”

“What’s the best anti-aging serum?”

AI systems are answering these questions with brand selections — in real time, across sectors, before your analytics register intent and before your sales team knows a deal exists.

This is not a marketing trend.

It is decision infrastructure.

And it requires a new discipline.

The End of Visibility as a Proxy

For two decades, digital strategy optimized for visibility.

Rankings.

Traffic.

Impressions.

Citations.

AI systems collapse that model.

They do not list ten options.

They resolve.

They narrow.

They compare.

They substitute.

They eliminate.

In multi-turn journeys, recommendation concentration increases as the conversation progresses. Two or three brands dominate the final answer state. Everyone else disappears.

When 58% of buyers use AI to choose between competitors, the relevant metric is no longer visibility.

It is outcome.

What We Tested

Over the past year, we have run 500+ structured AI decision inspections across:

- Banking and financial disclosures

- Travel platforms

- Automotive safety and trim differentiation

- Enterprise SaaS shortlisting

- Food safety and allergen boundaries

- Consumer retail and beauty categories

Across ChatGPT, Gemini, Claude, Grok, and Google AI.

Not single prompts.

Replicated multi-turn decision journeys.

Three patterns consistently emerged.

1. Cross-Model Factual Divergence

Three AI systems. Same filings. Three materially different regulatory narratives.

In structured testing of financial disclosures, one model denied active investigations while others confirmed them.

In another case, a model fabricated specific DOJ and European Commission probes that did not exist — then propagated those fabrications into peer comparisons.

This is not ranking volatility.

It is narrative divergence at decision stage.

2. Multi-Turn Drift

AI systems frequently begin with accurate framing at turn one.

By turn three, softening, substitution, or escalation occurs.

In a dairy allergen stress test, models began with correct warnings. By turn three, 58% of runs softened to permissive language that contradicted formal allergen boundaries.

In enterprise SaaS evaluation journeys, shortlist positioning shifted materially by turn four depending on which model the buyer consulted.

The pattern transcends sectors.

Decision outcomes are dynamic, not static.

3. Competitive Displacement Concentration

When elimination occurs, replacement is rarely distributed evenly.

In enterprise SaaS testing, when Canva was removed from final recommendation in 64% of structured runs, three competitors captured the displacement:

- Figma — 41%

- Adobe Creative Cloud — 32%

- Miro — 27%

One competitor captured nearly half of lost decisions.

This concentration risk is invisible to traditional brand tracking. Mention frequency does not reveal who consolidates your losses.

AI systems are not distributing opportunity evenly.

They are consolidating it.

The Missing Discipline

The industry conversation is focused on tactics:

How do we influence AI systems?

That is a second-order question.

The first-order question is:

What are we actually measuring?

This is where AI Recommendation Intelligence emerges.

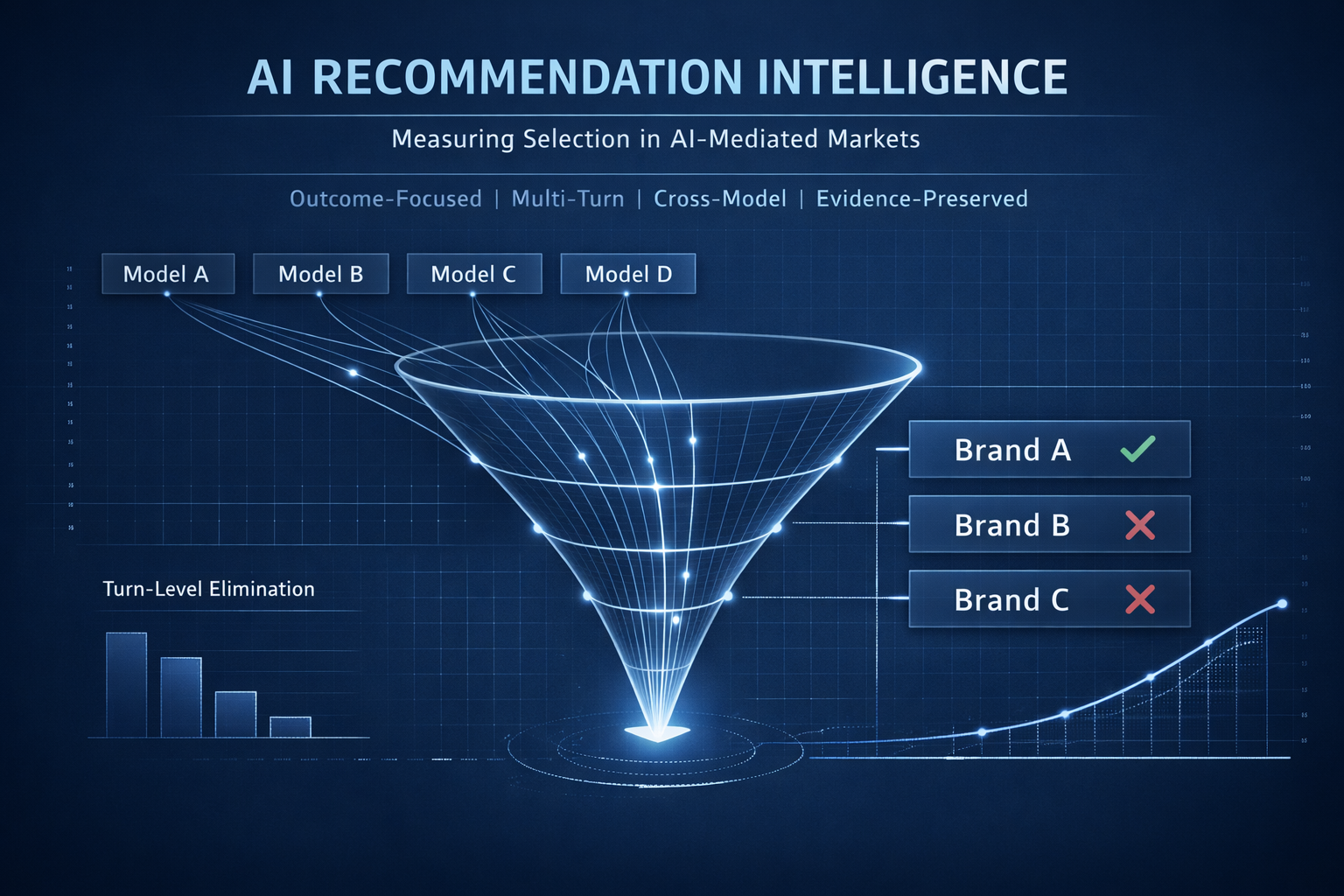

AI Recommendation Intelligence (ARI)

AI Recommendation Intelligence is the measurement-first discipline focused on competitive outcomes inside AI-mediated decision journeys.

It is not citation tracking.

It is not prompt engineering.

It is not sentiment monitoring.

It measures:

1. Final Recommendation Win Rate

How often does your brand resolve as the selected option?

2. Conversational Survival Rate

At which turn does elimination occur?

3. Competitive Displacement Mapping

Which rival captures the outcome when you are removed?

4. Cross-Model Divergence

How do narratives differ across ChatGPT, Gemini, Claude, Grok?

5. Turn-Specific Drift

Where does softening, substitution, or escalation occur?

6. Temporal Stability

Do outcomes change after model updates, absent brand intervention?

7. Evidence Preservation

Are full transcripts stored so the baseline can be reconstructed?

Without these layers, any optimization effort is speculative.

ARI in Practice: Enterprise SaaS Example

Baseline (Week 1)

- 40 structured decision runs across 4 models

- Final recommendation win rate: 23%

- Primary elimination point: Turn 2 (comparison stage)

- Replacement competitor: Brand Y (67% of displacement)

Intervention (Week 2–4)

- Adjusted comparison-stage positioning language

- No other content changes

Re-Measurement (Week 5)

- Same 40-run protocol

- Final recommendation win rate: 31% (+8pp)

- Replacement by Brand Y: 48% (–19pp)

- Cross-model divergence reduced

Attribution is now measurable.

This is AI Recommendation Intelligence:

Measure.

Intervene.

Verify.

Why Measurement Precedes Manipulation

In traditional search, you saw rankings before optimizing.

In AI systems, without structured inspection, you are blind:

- Your baseline recommendation rate — unknown

- Your elimination point — unknown

- Your replacement competitor — unknown

- Your cross-model variance — unknown

Once intervention begins without baseline capture, the original state is lost.

Attribution becomes guesswork.

In a system mediating more than half of buyer decisions, guesswork is expensive.

Infrastructure, Not Tactics

AI Recommendation Intelligence is not an add-on to GEO.

It is the layer beneath it.

Optimization without measurement is interference without instrumentation.

Measurement without intervention is observation without leverage.

The sequence matters:

Baseline.

Mapping.

Replication.

Then intervention.

Infrastructure creates accountability.

Tactics without infrastructure create noise.

The Competitive Reality

When AI systems mediate 58% of competitive choices:

- Shortlist instability directly affects pipeline

- Concentration risk shifts market share silently

- Fabricated narratives distort valuation

- Drift alters safety perception

- Model updates rebalance categories without warning

This is not an experimental channel.

It is emerging competitive infrastructure.

What This Means By Function

For Competitive Strategy

Know your recommendation win rate before a rival consolidates your category inside AI systems.

For Risk & Governance

Track factual divergence before regulatory narratives form outside your control.

For Product & Marketing

Understand which attributes AI systems emphasize, ignore, or fabricate.

For Executive Leadership

Treat AI recommendation systems as infrastructure, not experimentation.

The methodology is consistent.

The implications vary by function.

The Category Declaration

Search Engine Optimization was the first wave.

Generative Engine Optimization is an interim adaptation.

AI Recommendation Intelligence is the next discipline.

Outcome-focused.

Multi-turn.

Cross-model.

Evidence-preserved.

Competitive.

When AI systems resolve decisions, the unit of analysis is no longer traffic.

It is selection.

AI Recommendation Intelligence is not a tactic.

It is the infrastructure layer for AI-mediated markets.

The brands building this infrastructure now will understand their competitive position before their competitors realize it has shifted.

When 58% of buyers use AI to choose, measurement is not preparation for strategy.

Measurement is strategy.

Here is a CTA you can append to the end of the Medium and Journal versions.

It bridges both AIVO Edge (competitive) and AIVO Evidentia (governance) without sounding promotional.

Where This Goes Next

AI Recommendation Intelligence is not theoretical.

It is operational.

For organizations focused on competitive positioning, this means understanding:

- Your final recommendation win rate

- Where elimination occurs

- Which rival consolidates displacement

- How concentration evolves across models

That is the focus of AIVO Edge — competitive outcome intelligence for AI-mediated markets.

For organizations focused on governance, risk, and evidentiary integrity, this means tracking:

- Cross-model factual divergence

- Regulatory narrative instability

- Fabricated claims

- Drift across model updates

- Transcript preservation for audit and board review

That is the mandate of AIVO Evidentia — AI decision intelligence for regulated environments.

Different lenses.

Same infrastructure.

If AI systems are shaping decisions in your category, the first step is not optimization.

It is baseline measurement.

If you would like to run a structured ARI inspection in your sector — competitive or regulated — reach out.

The market is moving from visibility to selection.

Understanding where you stand before it shifts further is no longer optional.