AI Regulation: Fact and Fiction

What the EU AI Act, the U.S. Securities and Exchange Commission, and Global Regulators Actually Require When AI Is Relied Upon

Executive Summary

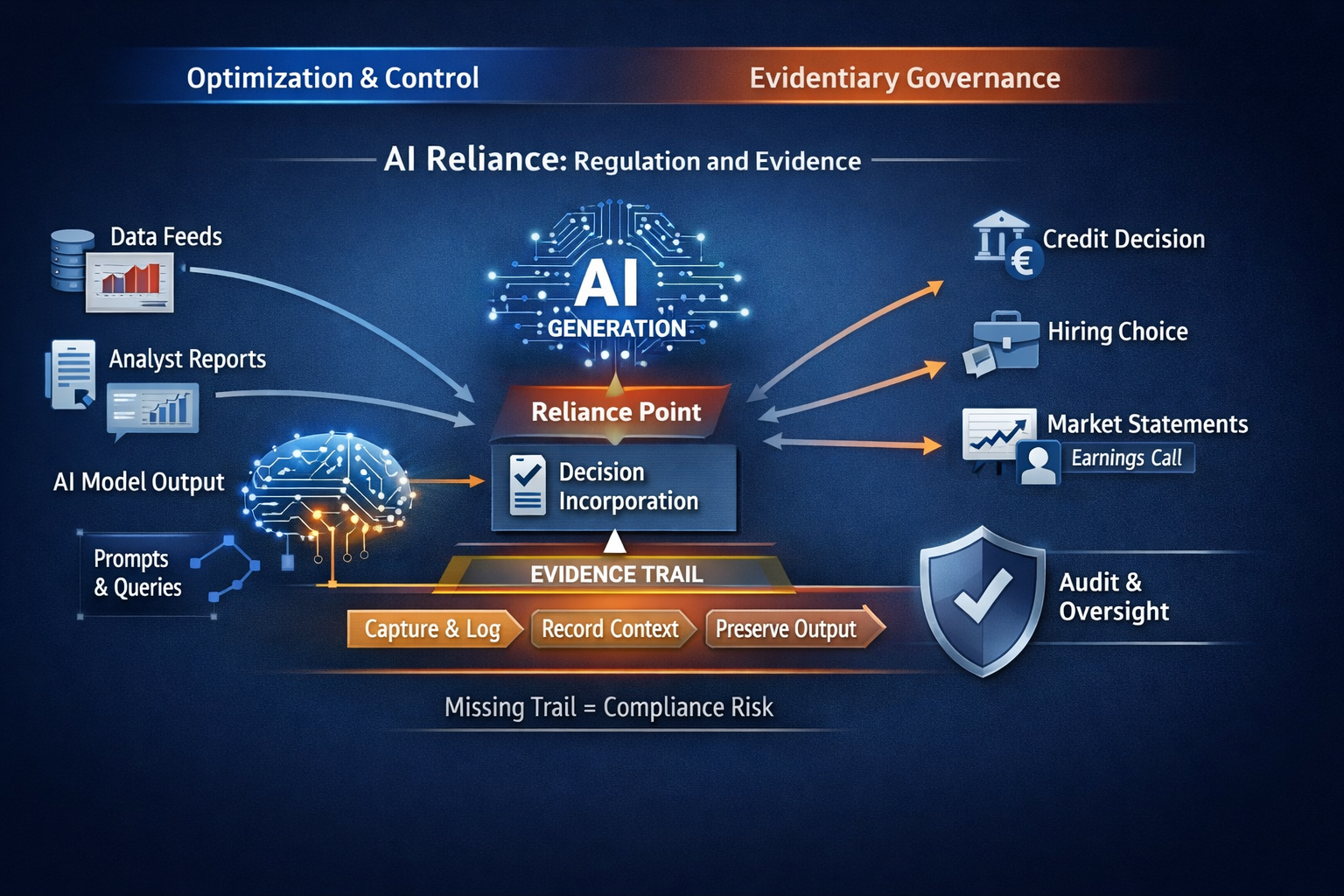

AI regulation is widely discussed as if it were about controlling models. That framing is convenient, but wrong.

The dominant regulatory exposure does not arise from how AI systems are built. It arises from how AI-generated statements are relied upon in decisions that carry legal, financial, or reputational consequences.

Across jurisdictions, regulators are converging on a single expectation:

If an AI-generated statement influences a consequential decision, the organization that relied on it must be able to reconstruct what was said, when, and in what context.

This article separates fact from fiction in current AI regulation and maps enforceable obligations to a specific and under-governed risk surface: AI Reliance.

1. The Core Misconception: “AI Regulation Is About AI Systems”

Fiction

AI regulation primarily targets model safety, bias, or hallucinations.

Fact

Regulatory exposure is triggered at the moment of reliance, not generation.

Regulators consistently assess:

- Did an AI-generated statement influence a decision?

- Was the decision consequential?

- Can the organization reconstruct the representation relied upon?

Reliance is not presumed. It is established when an AI-generated statement is incorporated, implicitly or explicitly, into a decision process or workflow. Mere exposure is insufficient. Reliance is.

If the answer to (3) is no, the issue becomes evidentiary rather than technical.

2. The EU AI Act: What It Requires, and What It Implies

What the EU AI Act Explicitly Requires

The EU AI Act introduces enforceable obligations around:

- Traceability

- Record-keeping

- Post-hoc accountability

These obligations apply to:

- High-risk AI systems

- General-purpose AI with systemic risk

- AI outputs used in regulated or safety-critical contexts

The Act focuses on use and impact, not merely system ownership.

Where AI outputs inform:

- Credit decisions

- Risk classification

- Employment screening

- Healthcare recommendations

- Financial or consumer communications

Organizations must be able to demonstrate the basis of reliance.

The Statutory Objection, and Why It Fails

A common counterargument is that the Act regulates systems “placed on the market” or “put into service,” not ambient third-party AI statements.

That reading fails for three reasons:

- EU supervisory doctrine prioritizes effect over origin. Where an output influences a regulated outcome, it enters scope regardless of provenance.

- Use-based obligations override deployment boundaries. The Act already regulates downstream use, not just upstream creation.

- Post-hoc accountability is outcome-driven. Supervisors assess what influenced the decision, not who authored it.

“We did not control the system” is not a defensible position once reliance is established.

3. SEC Expectations: Disclosure, Controls, and Reconstruction

Fiction

The SEC only concerns itself with internally deployed AI systems.

Fact

The SEC regulates decision-relevant information flows, regardless of source.

SEC expectations around:

- Disclosure controls

- Internal controls

- Reasonable basis for statements

- Post-event reconstruction

apply whenever information influences:

- Earnings calls

- Risk disclosures

- Investment decisions

- Product or performance claims

AI-generated outputs are functionally equivalent to:

- Third-party analyst reports

- Market data feeds

- Consultant memoranda

All are routinely within scope when relied upon. AI introduces volatility, not a new exemption.

The governing question remains unchanged:

What information did you rely on at the time the decision was made, and can you evidence it?

4. The Cross-Jurisdictional Convergence

Across regulatory domains, the same logic applies:

| Domain | Long-standing Rule | AI Translation |

|---|---|---|

| Financial regulation | Decisions must be auditable | AI reliance must be reconstructable |

| Consumer protection | Claims must be substantiated | AI-generated claims must be evidenced |

| Corporate governance | Boards oversee material risk | AI reliance is a board-level risk |

| Litigation & discovery | Evidence must be preserved | AI statements must be logged |

No new doctrine is required. Existing law is encountering a new failure mode.

5. Accuracy vs Evidence: A Necessary Distinction

Accuracy determines substantive compliance.

Evidence determines whether compliance can be assessed at all.

An organization may believe an AI-generated statement was accurate. That belief is irrelevant if the statement cannot later be reconstructed.

Regulators consistently treat the absence of evidence as a control failure, not a technical limitation.

6. Why Optimization and Monitoring Are Insufficient

Optimization and monitoring reduce the probability of error ex ante.

They do not:

- Preserve representations

- Capture decision context

- Enable reconstruction under scrutiny

When incidents occur, regulators privilege evidentiary continuity, not performance metrics.

Optimization without evidentiary capture accelerates exposure rather than mitigating it.

7. The Live Governance Gap

Most enterprises have:

- Cybersecurity audits

- Financial audits

- Data protection programs

- Model risk management

Most lack:

- A record of AI-generated statements relied upon

- Prompt-to-output chains linked to decisions

- Time-bound evidence of what an AI system stated

This gap remains invisible until scrutiny begins. At that point, it is treated as a governance failure.

8. Fact vs Fiction Summary

Fiction

- AI regulation is mainly about model safety

- External AI systems are out of scope

- Lack of control limits responsibility

- Accuracy monitoring is sufficient

Fact

- Regulation attaches at reliance

- External representations matter if relied upon

- Responsibility follows decision impact

- Evidence is the governing standard

The Role of the AIVO Standard™

AI regulation does not require prediction, optimization, or intervention.

It requires evidence.

The AIVO Standard™ exists to address a narrow but critical gap:

- It does not optimize AI outputs.

- It does not influence models.

- It does not provide advice or recommendations.

It defines a neutral evidentiary framework for:

- Capturing AI-generated representations at the point of reliance

- Preserving prompt-to-output context

- Enabling post-hoc reconstruction under regulatory, audit, or legal scrutiny

In regulatory terms, AIVO operates as an assurance and evidence layer, analogous to logging and audit trails in financial systems.

Call to Action

If your organization relies, explicitly or implicitly, on AI-generated statements in decisions that could be scrutinized by regulators, auditors, courts, or boards, the relevant question is no longer whether AI is accurate.

It is whether reliance can be proven.

AIVO Standard™ provides a structured, audit-aligned approach to evidencing AI reliance before scrutiny begins.

For organizations seeking to understand whether this governance gap exists in their current controls, an initial AIVO Reliance Assessment can be conducted under NDA.

Annex A

Methodology, Scope, and Legal Basis

A.1 Purpose of This Annex

This annex clarifies:

- The analytical method used in the article

- The scope boundaries of the claims made

- The legal and supervisory foundations relied upon

- What the article does not claim

It is intended to support defensibility, not to expand regulatory interpretation.

A.2 Methodological Approach

The analysis applies a functional governance methodology, not a doctrinal or textualist one.

Specifically, it:

- Examines how regulators assess decision processes, not technologies

- Maps AI-generated outputs to existing regulatory constructs

- Evaluates exposure based on use, reliance, and reconstructability

- Avoids speculative future regulation or draft guidance

No assumptions are made about:

- Intentional misconduct

- Model accuracy or bias

- AI system ownership or deployment architecture

The analysis focuses solely on post-hoc accountability.

A.3 Definition of AI Reliance (Operational)

For the purposes of this article, AI Reliance is defined narrowly as:

The incorporation, explicit or implicit, of an AI-generated statement into a consequential decision, workflow, or representation such that the statement influences outcome, judgment, or disclosure.

This definition excludes:

- Passive exposure

- Exploratory or non-decision use

- AI outputs not connected to an action or representation

Reliance is assessed functionally, not rhetorically.

A.4 Regulatory Interpretation Basis

The article relies on established supervisory logic, not novel AI doctrine.

Across financial regulation, consumer protection, corporate governance, and litigation, regulators consistently apply three principles:

- Effect over origin

Oversight attaches to what influenced a decision, not who authored it. - Outcome-driven accountability

Where an outcome is regulated, the inputs that shaped it fall within scope. - Reconstructability as a control standard

Inability to evidence decision inputs is treated as a governance failure.

These principles predate AI and are already embedded in:

- Financial audit standards

- Disclosure control frameworks

- Supervisory review processes

- Discovery and evidentiary rules

The article applies these principles to AI-generated representations without extending or redefining them.

A.5 EU AI Act Scope Interpretation

The article does not claim that the EU AI Act explicitly regulates all third-party AI systems or ambient AI content.

Instead, it asserts the following limited and defensible position:

- The Act introduces enforceable obligations around traceability, record-keeping, and accountability for AI-influenced outcomes.

- Where an AI-generated output materially influences a regulated decision, supervisory scrutiny will focus on what was relied upon, regardless of system ownership.

- This reflects established EU administrative practice in safety, product liability, and financial supervision.

This is an interpretive application, not a claim of textual certainty.

A.6 SEC and U.S. Regulatory Understandings

The article does not assert the existence of AI-specific SEC rules governing external AI outputs.

It relies on long-standing SEC expectations that:

- Decision-relevant information must be supportable

- Disclosures must have a reasonable basis

- Internal controls must enable reconstruction under review

AI-generated statements are treated as functionally equivalent to other third-party information sources when relied upon.

No claim is made that AI outputs are uniquely regulated. They are assessed under existing standards.

A.7 Accuracy vs Evidence Clarification

The article distinguishes, but does not oppose, accuracy and evidence.

- Accuracy governs substantive compliance.

- Evidence governs supervisory assessability.

Both are required. The absence of either creates exposure.

The article focuses on evidence because it is the necessary precondition for regulatory evaluation.

A.8 Exclusions and Non-Claims

This article does not:

- Provide legal advice

- Assert guaranteed enforcement outcomes

- Claim that AI reliance is always regulated

- Suggest that monitoring or optimization is valueless

- Predict specific fines, penalties, or timelines

It also does not argue that AI systems are inherently non-compliant or unsafe.

A.9 Role of the AIVO Standard (Clarificatory)

References to the AIVO Standard™ are descriptive, not prescriptive.

The Standard is presented as:

- An evidentiary framework

- A logging and reconstruction methodology

- An assurance-oriented governance layer

It is explicitly not positioned as:

- A compliance certification

- A regulatory substitute

- An optimization or intervention system

- Legal or supervisory advice

A.10 Use of This Article

This article and annex are suitable for:

- Governance discussion

- Risk assessment framing

- Internal education

- Regulator or auditor dialogue

They should not be relied upon as a substitute for legal counsel or formal regulatory guidance.

End of Annex A

Annex B

Illustrative AI Reliance Scenarios (Non-Exhaustive)

B.1 Purpose of This Annex

This annex provides concrete, sector-agnostic illustrations of AI Reliance as defined in Annex A.

Its purpose is to clarify how reliance is established in practice, not to enumerate all possible cases.

Each scenario is:

- Grounded in existing regulatory logic

- Neutral as to intent or accuracy

- Focused on evidentiary reconstructability

No scenario assumes misconduct, negligence, or regulatory breach by default.

B.2 Banking and Financial Services

Scenario

A relationship manager uses a general-purpose AI assistant to summarize a counterparty’s financial health and risk profile based on publicly available information. The summary is referenced during an internal credit committee discussion and influences a credit limit decision.

Why Reliance Exists

- The AI-generated summary is incorporated into a regulated decision process.

- The decision has material financial consequences.

- The summary shapes judgment, even if not determinative.

Regulatory Question Triggered

- What information influenced the credit decision at the time it was made?

Governance Gap

- The AI output was not logged.

- The prompt context is unavailable.

- The exact wording relied upon cannot be reconstructed.

Supervisory Interpretation

- This is not a model risk issue.

- It is a failure to evidence decision inputs.

B.3 Corporate Disclosure and Investor Communications

Scenario

A corporate communications team uses an AI assistant to draft language summarizing competitive positioning and market outlook. Elements of the draft are incorporated into an earnings call script and investor Q&A preparation.

Why Reliance Exists

- The AI output informs external representations.

- Those representations influence investor understanding.

- Disclosure obligations attach to the resulting statements.

Regulatory Question Triggered

- What was the basis for the statements made to the market?

Governance Gap

- The AI-generated draft is not preserved.

- The evolution of language cannot be reconstructed.

- The decision context is lost.

Supervisory Interpretation

- AI is treated as a source of decision-relevant information.

- Absence of evidence is a disclosure control weakness.

B.4 Healthcare and Life Sciences

Scenario

A clinician consults an AI assistant for a summary of treatment options and contraindications for a complex case. The summary informs, but does not replace, clinical judgment and is discussed during case review.

Why Reliance Exists

- The AI output influences clinical decision-making.

- The context is safety-critical.

- The AI is part of the reasoning chain.

Regulatory Question Triggered

- What information informed the treatment decision?

Governance Gap

- The AI interaction is ephemeral.

- No record exists of the specific guidance provided.

- Post-event review cannot reconstruct the informational basis.

Supervisory Interpretation

- This is not about diagnostic accuracy.

- It is about traceability of decision inputs.

B.5 Employment and Human Capital Decisions

Scenario

An HR team uses an AI assistant to summarize candidate strengths and weaknesses from CVs and online profiles. The summary is discussed during hiring deliberations.

Why Reliance Exists

- The AI output shapes assessment and ranking.

- The outcome affects employment decisions.

- Employment law and fairness obligations apply.

Regulatory Question Triggered

- What factors influenced the hiring decision?

Governance Gap

- The AI-generated assessment is not logged.

- The rationale cannot be reconstructed.

- Bias or error cannot be evaluated post hoc.

Supervisory Interpretation

- Lack of evidence undermines fairness assessment.

- Reliance without traceability creates exposure.

B.6 Consumer Communications and Product Claims

Scenario

A marketing team uses an AI assistant to generate product descriptions and comparative claims. The language is published with minor edits.

Why Reliance Exists

- AI-generated content becomes a consumer-facing claim.

- Consumer protection standards apply.

- Substantiation is required.

Regulatory Question Triggered

- On what basis were these claims made?

Governance Gap

- The original AI output is unavailable.

- Prompt context is lost.

- Claim provenance cannot be demonstrated.

Supervisory Interpretation

- Accuracy alone is insufficient.

- Claim substantiation requires evidentiary continuity.

B.7 Board and Executive Decision Support

Scenario

An executive uses an AI assistant to summarize regulatory developments and risk exposure ahead of a board meeting. The summary informs board discussion and risk posture.

Why Reliance Exists

- The AI output influences governance-level decisions.

- Board oversight obligations attach.

- Risk assessment is consequential.

Regulatory Question Triggered

- What information informed board judgment?

Governance Gap

- The AI summary is not preserved.

- Decision rationale cannot be reconstructed.

- Oversight documentation is incomplete.

Supervisory Interpretation

- Boards are accountable for information they rely upon.

- Absence of records weakens governance defense.

B.8 Common Pattern Across Scenarios

Across all sectors, the pattern is consistent:

- AI output influences judgment.

- Judgment leads to a consequential outcome.

- The output is not preserved.

- Reconstruction becomes impossible.

- Regulators treat this as a governance failure.

None of these scenarios require:

- Model deployment

- Intentional automation

- Exclusive reliance on AI

- Demonstrable error or harm

Reliance alone is sufficient to trigger scrutiny.

B.9 Relevance to Evidentiary Governance

These scenarios illustrate why AI Reliance is:

- Already present in enterprise workflows

- Already relevant to regulators

- Largely ungoverned today

They also clarify the narrow role of evidentiary frameworks such as the AIVO Standard™:

- Capture what was relied upon

- Preserve decision context

- Enable post-hoc reconstruction

They do not imply optimization, intervention, or compliance guarantees.

End of Annex B