AI Reliance and External Representation: A Governance Record

The reconstructability of AI-generated company descriptions over time

Journal Note

This entry is published as a governance record, not as an opinion piece, case study, or research paper.

It is intended to be read as a factual governance artifact rather than an argument or recommendation.

Its purpose is to document the existence and repeatability of an externally observable phenomenon with implications for enterprise governance, audit, and evidentiary control. It does not assess accuracy, fault, or harm.

Executive Summary

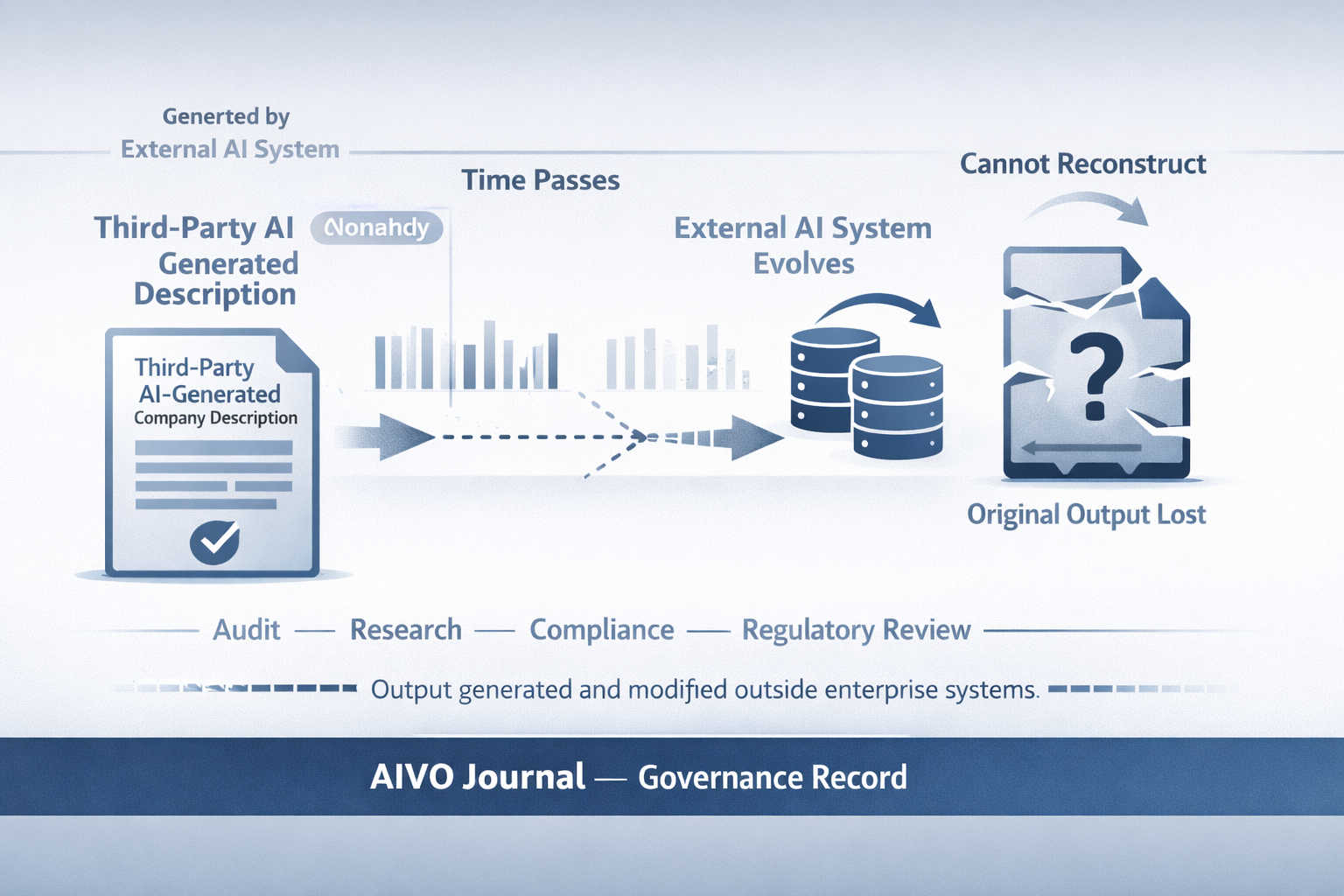

This governance record documents a structural gap in how widely used AI systems generate and update descriptive information about real companies, and how those descriptions can be evidenced over time.

The record shows that AI assistants routinely produce externally consumable descriptions of businesses that are plausible, coherent, and commonly consulted in contexts such as research, diligence, procurement, journalism, and internal decision-making. These descriptions are generated independently by AI systems and are not assertions made by the companies themselves.

Once such AI-generated descriptions change or disappear due to model updates or system evolution, there is typically no reliable way to reconstruct what was presented at a specific point in time. The issue identified is not whether the descriptions are accurate, biased, or misleading. It is whether an authoritative evidentiary record exists once an externally generated description has been delivered and later altered.

The underlying research involved repeated testing across multiple AI systems, industries, and time periods using fixed prompt structures and controlled replication. This approach enabled observation of consistent patterns of change and loss of reconstructability, rather than isolated or anecdotal outputs.

This record does not allege wrongdoing by any company, does not assess harm, damages, or legal liability, and does not evaluate the internal behavior or design of AI models. AI systems are treated as externally observable actors, and the analysis is limited to evidentiary availability after outputs are delivered.

Existing enterprise tools, including search optimization, AI visibility tracking, monitoring dashboards, and post hoc audits, are not designed to preserve time-stamped records of externally generated AI descriptions. As AI-mediated information is increasingly consulted upstream of formal verification processes, the absence of durable records may complicate audit, regulatory review, and dispute resolution. This record does not assess whether such complications have occurred, only whether current controls would permit reconstruction if they did.

Primary evidence supporting these observations, including time-stamped outputs and preserved artefacts, is available for inspection by qualified parties under confidentiality agreements. This mirrors standard forensic, audit, and expert-review practices and is intended to prevent misinterpretation or misuse of entity-specific material.

The purpose of this public record is to establish the existence and repeatability of the phenomenon and to frame it as an evidentiary and governance issue, not a question of AI accuracy, model quality, or marketing performance.

Scope and Boundaries

This governance record is intentionally narrow and bounded.

It does not:

- assess the accuracy, bias, or truthfulness of AI-generated content

- make findings of harm, damages, or liability

- allege wrongdoing by any company

- evaluate internal AI model behavior, training data, or system design

- provide legal, compliance, or regulatory advice

Its scope is limited to the availability and reconstructability of evidence after delivery of externally generated AI descriptions.

What This Record Establishes

This record establishes the following externally observable conditions:

- AI systems generate descriptive information about real companies without enterprise involvement.

- These descriptions are consulted in contexts structurally adjacent to reliance.

- Once altered or overwritten, previously delivered descriptions cannot be reliably reconstructed using standard enterprise controls.

- Existing visibility, monitoring, optimization, and compliance mechanisms do not address this evidentiary gap.

No causal claims, outcome claims, or attribution claims are made.

Evidence Access and Review

Primary evidence artefacts supporting this record include time-stamped AI outputs, preserved prompt inventories, and linked records demonstrating change over time.

Access to these artefacts is available to qualified parties under confidentiality agreements for purposes limited to governance, audit, regulatory, legal, or expert review. Artefacts are not released publicly in order to prevent misinterpretation, misuse, or reputational harm, consistent with forensic and audit norms.

Interpretive Boundary

This record establishes that externally generated AI descriptions of real companies can be produced, consulted foreseeably, and later lost to reconstruction. It does not assert that such descriptions are wrong, harmful, or actionable. It asserts that once delivered, they cannot be evidenced using existing controls.

Published by AIVO Journal

A publication focused on AI governance, evidence, and audit-grade standards.

Primary evidence artefacts supporting this governance record are available for inspection by qualified parties under confidentiality agreements.

Requests for evidentiary review should be limited to governance, audit, regulatory, legal, or expert evaluation purposes.

To request access: audit@aivostandard.org