AI Reliance Logging

Control Summary for Boards, Audit Committees, and Investors

What problem this control addresses

As AI systems increasingly generate recommendations, explanations, summaries, and classifications in governed workflows, organizations face a growing evidentiary risk:

they often cannot reconstruct what an AI system presented at the moment its output could have influenced a decision.

When challenged by auditors, regulators, insurers, or opposing counsel, the absence of reconstructable AI outputs is treated not as a technical limitation, but as a procedural control failure.

Control definition

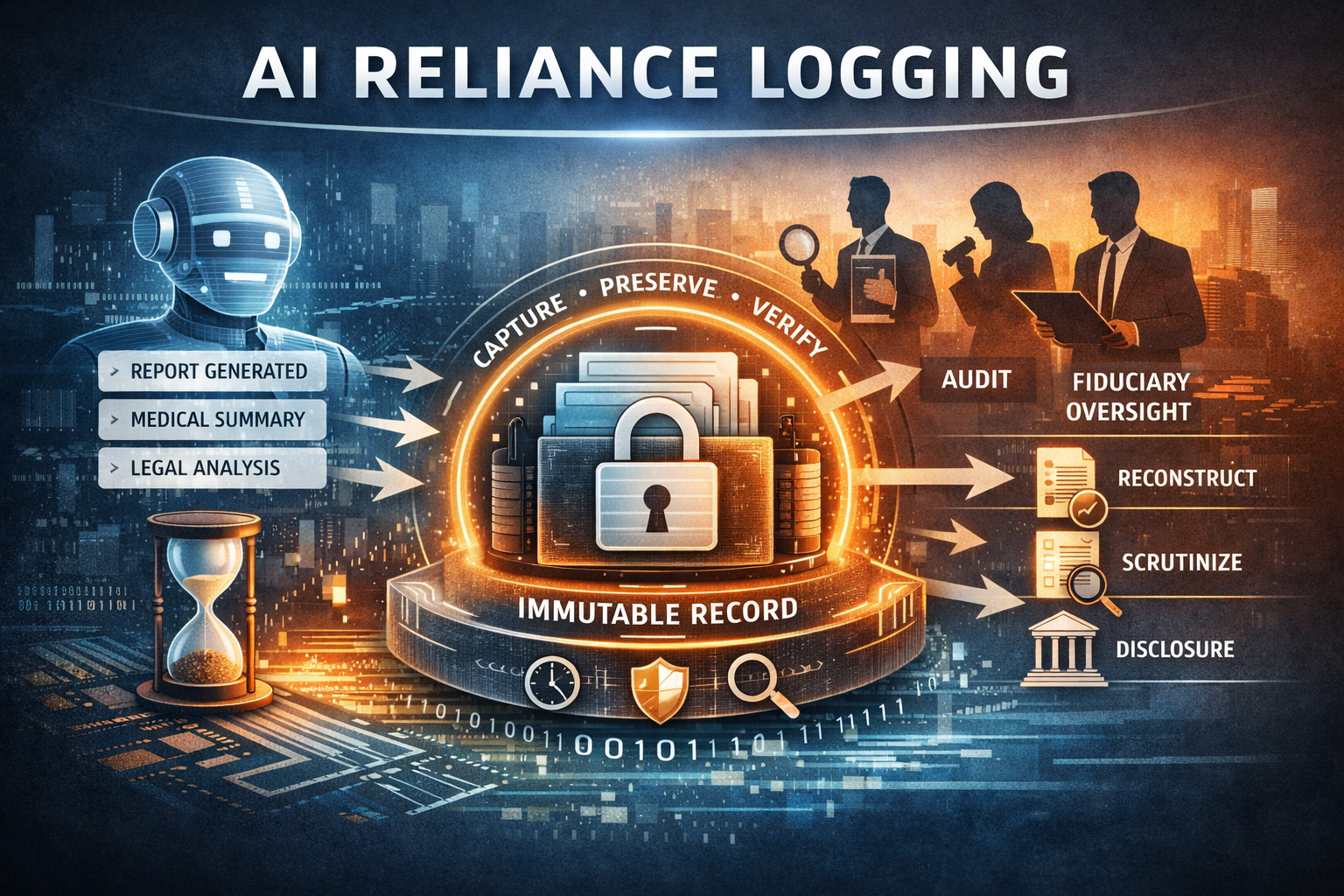

AI Reliance Logging is a distinct evidentiary control class concerned with preserving AI-generated representations that may later become the object of reliance.

AI Reliance Logging is the systematic, reproducible capture and preservation of externally observable AI-generated representations as timestamped, immutable evidence, enabling later reconstruction and scrutiny of what was presented at the moment it could have influenced a governed decision.

The control preserves what was available to be relied upon, not whether or how a human acted on it.

What is logged

- AI-generated representations as externally observable outputs

(e.g. claims, summaries, explanations, classifications, omissions) - Captured at the point outputs intersect with governed workflows

- Preserved as immutable, time-bound records

- Sufficient context to enable later reconstruction and inspection

What is explicitly not logged or claimed

AI Reliance Logging does not:

- evaluate accuracy, bias, or correctness

- explain or validate model reasoning

- influence or constrain AI behavior

- log human decisions, intent, or cognition

- assert legal admissibility or sufficiency

The control is evidentiary only.

Why existing controls are insufficient

Most organizations rely on internal operational logs (prompts, traces, system events). These logs:

- are self-attested and mutable

- are optimized for debugging, not proof

- rarely preserve outputs as presented

- do not withstand adversarial scrutiny

In regulated domains, evidentiary obligations historically externalize once liability crystallizes (e.g. transaction logging, communications archiving, trade surveillance). AI reliance follows the same pattern.

Control placement and ownership

AI Reliance Logging aligns with:

- Internal Audit

- Disclosure and Controls functions

- Legal and Risk oversight

It is distinct from AI development, ML ops, and model governance.

Ownership mirrors other evidentiary controls where independence and reconstructability are required.

Why this control is becoming unavoidable

Without AI Reliance Logging:

- AI outputs cannot be reliably reconstructed

- variance becomes indistinguishable from deficiency

- organizations cannot prove what was presented

- reliance disputes escalate unnecessarily

With AI Reliance Logging:

- evidentiary gaps are closed

- scrutiny shifts from speculation to inspection

- AI adoption aligns with existing audit and legal doctrine

Bottom line

AI systems introduce a new class of reliance risk.

That risk is procedural, not technical.

AI Reliance Logging formalizes the missing control:

preserving AI-generated representations as inspectable evidence, so organizations can answer a simple but critical question when it matters:

What did the AI system present at the time?

This summary defines a control class, not a product. Multiple implementations are possible. The obligation exists independent of any specific vendor or tooling.

AI Reliance Logging defines a new evidentiary control class. The AI Reliance Evidence System is an implementation of that control, designed to preserve AI-generated representations as inspectable, immutable records suitable for audit, regulatory review, and legal scrutiny. The system does not assess accuracy, influence AI behavior, or record human decision-making; its role is evidentiary only.