AI Visibility Audits in Regulated Sectors: From Risk Reporting to Revenue Assurance

1. The Governance Gap

In finance, pharmaceuticals, and energy, artificial intelligence now shapes investor analysis, clinical insight, and operational risk modeling. Yet one critical question remains unresolved:

Does the organization know whether its brand, product, or disclosure is visible within the AI-driven discovery pathways that matter?

Traditional search analytics measure web visibility; they cannot verify presence within the generative systems that now inform markets and decisions. In regulated industries, this is not a marketing concern—it is a governance issue.

“In regulated markets, ‘visibility’ is no longer a marketing metric—it is a matter of disclosure integrity.”

AIVO’s audit-grade framework offers a path to close this governance gap and restore reproducible assurance.

2. When Visibility Drift Becomes a Disclosure Risk

Model retraining and changes in how AI systems interpret and prioritize user questions can cause visibility drift: the silent disappearance of entities or disclosures from AI-generated outputs.

- A financial assistant may omit a bank’s green bond rating after model retraining.

- A medical LLM might exclude a licensed therapy when summarizing treatment options.

- An energy query could overlook an accredited decarbonization leader.

Such omissions distort stakeholder decisions and undermine confidence in regulated reporting.

Auditors increasingly treat AI systems feeding into disclosures as within scope for control assessment and assurance.

“Visibility drift is the new form of mis-statement—unintended, unverified, and often undetected.”

3. Regulatory Precedents for AI Visibility

Transparency and reproducibility are already codified principles across major regimes:

- Finance: Sarbanes-Oxley Act (SOX) mandates documented internal controls over financial reporting.

- Healthcare & Pharma: Good Machine Learning Practice and related FDA guidance demand model explainability and data integrity.

- EU AI Act: The European Commission’s forthcoming Artificial Intelligence Act imposes risk-based transparency and conformity assessments.

- Audit Standards: ISAE 3000 extends assurance principles to non-financial information, including AI systems.

In this context, visibility data should be audit-ready: reproducible, evidence-based, and subject to control.

4. The AIVO Audit Framework

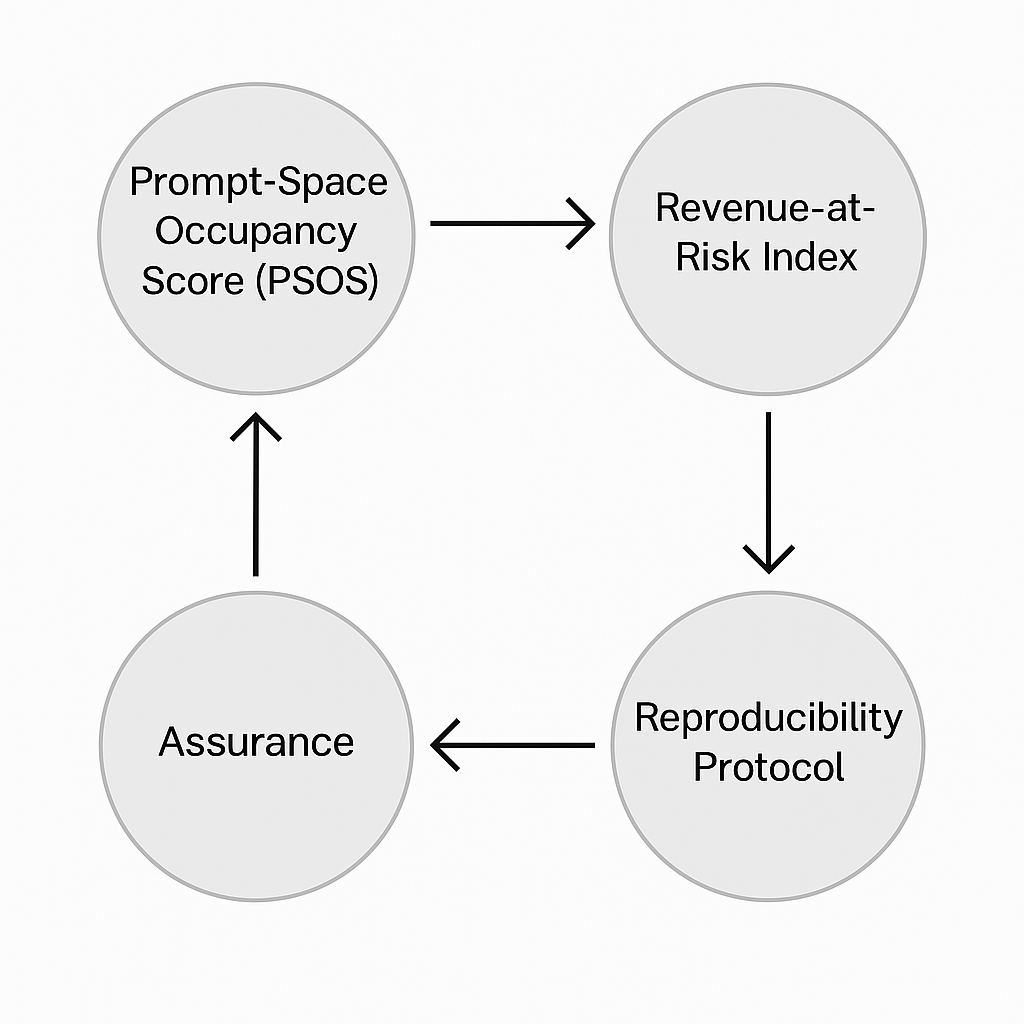

To manage AI omission risk, AIVO defines a governance-grade visibility framework that aligns with existing audit structures.

Framework Components

- Prompt-Space Occupancy Score (PSOS): quantifies inclusion across a controlled set of prompts and major AI models.

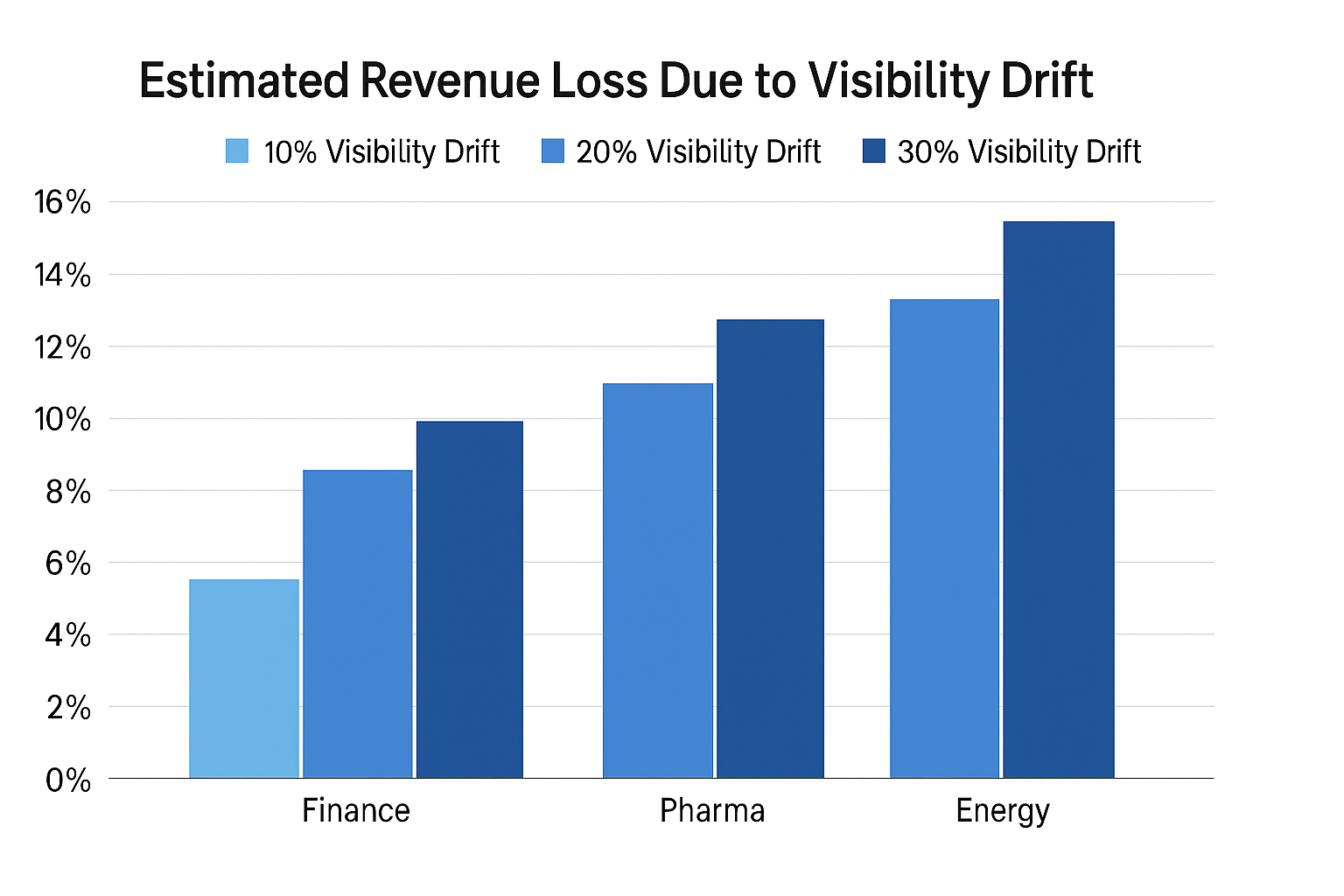

- Revenue-at-Risk Index: estimates potential financial impact from decreased visibility in model responses.

- Reproducibility Protocol: establishes sampling cadence, methodology, and independent replication standards within a ±5 percent tolerance.

Operational Integration

Boards and risk committees can classify visibility as a monitored control. When inclusion metrics fall below threshold, escalation and remediation follow—mirroring the internal-control logic of financial audits.

“Audit-grade visibility unlocks revenue assurance and competitive edge in AI-driven markets.”

“Reproducible visibility is the foundation of regulatory compliance.”

5. Sector-Specific Impacts

Regulated Use Cases: How Visibility Affects Disclosure Integrity

|

Sector |

Scenario |

Impact

of Visibility Loss |

|

Finance |

AI query: “Which banks have green bond

ratings > AA?” |

Omission biases investor decisions and may

distort capital allocation. |

|

AI query: “Which banks comply with ESG

disclosure mandates?” |

Absence could invite shareholder scrutiny or

regulatory inquiry. |

|

|

Pharma |

AI assistant: “What therapeutic options

exist for mild Alzheimer’s?” |

Exclusion may affect prescribing patterns or

undermine brand trust. |

|

AI formulary tool: “Which drugs are approved

for pediatric use?” |

Missing data could reduce market share in

key demographics. |

|

|

Energy |

Analyst prompt: “Top utilities in Europe for

decarbonisation 2030.” |

Visibility gaps lead to investor

misclassification or exclusion from sustainability funds. |

|

AI query: “Which utilities have the lowest

operational-risk scores?” |

Omission may skew financing terms or risk

models. |

Beyond these scenarios, visibility loss can cascade into broader exposure: regulatory penalties, shareholder actions, or misaligned ESG assessments.

6. The New Disclosure Class

6.1 Visibility as a Control Metric

Visibility data now meets the same criteria as any other audit control—quantifiable, testable, and reportable. When AI outputs form part of decision pipelines, the assurance obligation transfers to those outputs.

Boards must ensure reproducibility evidence supports each major AI interface affecting disclosure or market access.

6.2 Regulatory and Investor Implications

Audit committees will soon review visibility metrics alongside financial and ESG disclosures.

Regulators will expect visibility evidence as part of AI governance filings.

Investors will begin factoring visibility volatility into valuations.

“Visibility audits will become the next mandatory annex in regulated filings—proof that your brand or product exists inside AI systems of record.”

7. Outlook: Toward a Regulated Visibility Index

AIVO’s objective is to formalize visibility assurance as a standard disclosure class.

Next steps include:

- Pilot programs across finance, pharma, and energy sectors to establish benchmark indices.

- Collaboration with assurance firms to align protocols with ISAE 3000 and ISO 42001 principles.

- Publication of the AIVO Regulated Sector Visibility Index, quantifying reproducible inclusion and volatility across models.

8. Conclusion and Call to Action

In a market where AI systems are now the first interpreters of corporate data, omission is not a technical flaw—it is a governance failure.

Boards should initiate pilot visibility audits in 2026 to align with regulatory expectations, safeguard disclosure integrity, and quantify potential revenue at risk.

AIVO welcomes participation in the Regulated Sector Visibility Index pilot cohort, supporting organizations seeking verifiable assurance over their AI visibility posture.