AI Visibility Governance for CTOs and Heads of Data: The Full Operating Model

Most enterprises still treat AI visibility as a marketing or search-adjacent concern. The technical reality is different. Assistants now influence supplier assessments, competitive analysis, customer journeys, and even internal decision chains. These systems are unstable by design, update without notice, and produce outputs that organisations do not log, monitor, or verify.

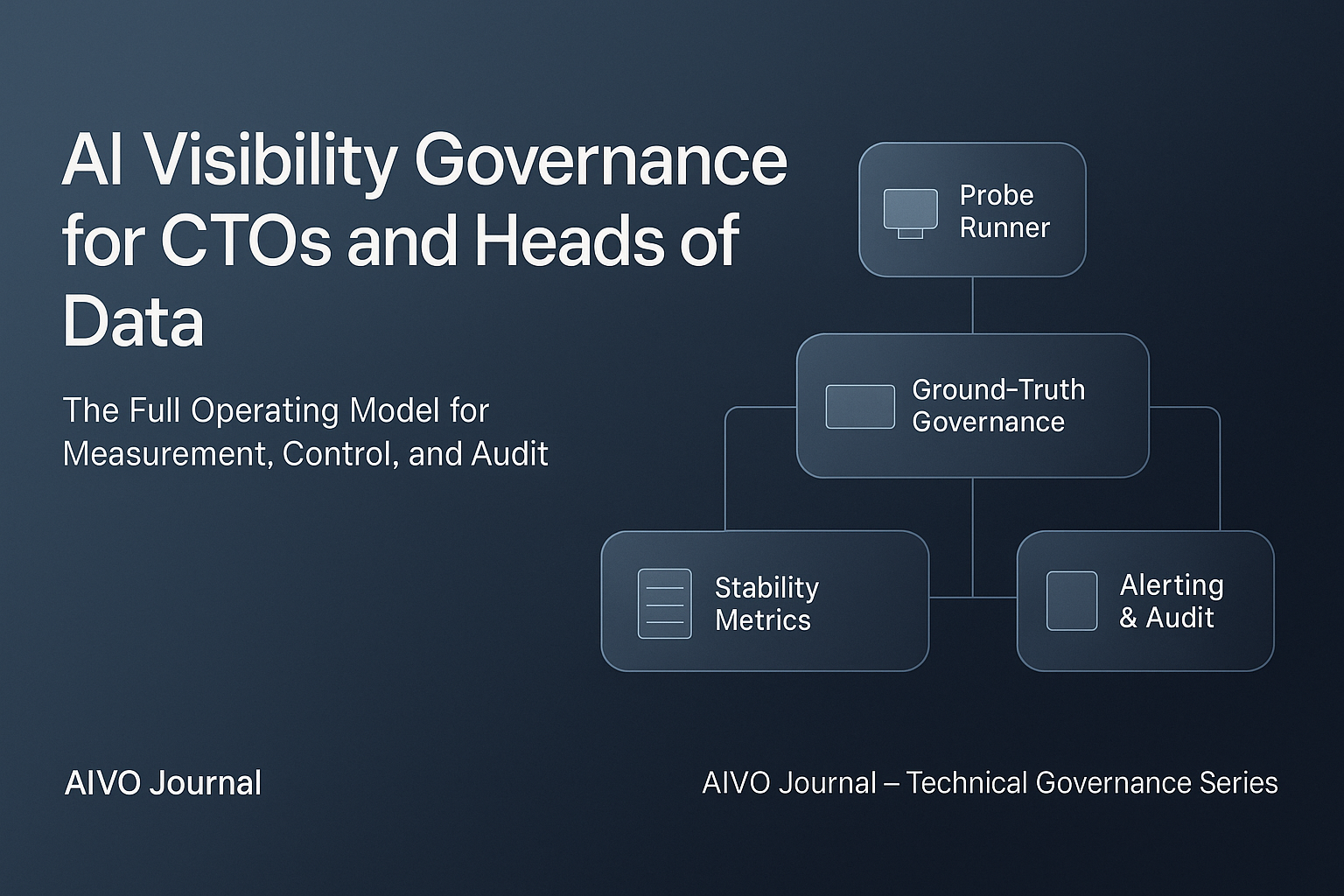

Boards have begun asking whether these outputs are under control. Technical leaders cannot answer that question without a measurement and control layer. This article defines the operating model that allows engineering organisations to build one.

The objective is not compliance theatre or defensive positioning. It is operational reliability for a new class of externalised reasoning systems that influence decisions far earlier and more often than most enterprises realise.

1. Define the evidence object before building controls

All governance collapses without a rigorous definition of what counts as evidence.

A visibility evidence object must include:

- Prompt text

- Assistant and model used

- Model version or release identifier where available

- Provider region and routing metadata

- Execution parameters likely to affect variation

- Complete response text

- Retrieval citations or source metadata

- Timestamps for request and response

- Integrity markers such as hashes or signatures

Without this schema, alerts cannot be trusted, metrics cannot be compared, and audit packs cannot be defended. Every downstream control depends on this definition.

Enterprises that fail to formalise the evidence object will drift into screenshot governance, which is neither reproducible nor admissible for internal or external review.

2. Metrics that engineering must own

Visibility governance hinges on measurable, repeatable signals rather than interpretive judgments. The following metrics form a workable backbone:

Stable Response Index

Consistency of outputs across controlled repetitions. This is the fundamental signal of whether the model behaves predictably for the organisation’s critical domains.

Hallucination Rate

Frequency with which responses contradict validated ground truth. This includes fabricated features, capabilities, pricing, claims, or regulatory statements.

Source Adherence Score

Degree to which responses align with approved input sources or documentation. This is essential in regulated domains where specific guidance must anchor responses.

Drift Velocity

Rate at which answers deviate from prior baselines. This measures interpretive instability, not factual error.

ASOS Profile

Distribution of competitor presence within the same answer space. Sudden shifts signal substitution risk in procurement and comparison workflows.

These metrics are engineering signals, not marketing indicators. Engineering teams must collect, calculate, and alert on them with the same operational discipline used for reliability and security metrics.

3. Ground truth governance: the missing pillar

Many enterprises underestimate how difficult it is to define, maintain, and version “truth.” Without a formal ground truth governance process, visibility metrics are unworkable.

A viable model includes:

Ownership

Data Governance defines the schema. Product and Legal maintain the content. Engineering enforces version control.

Format

A structured repository of validated claims, features, constraints, and canonical descriptions. JSON, YAML, or a dedicated knowledge object can work.

Update cadence

Triggered by product changes, policy changes, regulatory updates, or emerging inaccuracies. Monthly and incremental updates are typical.

Versioning and change control

Every change must be timestamped, reviewed, and stored immutably. Evidence packs must reference the version used at time of audit.

Conflict resolution

When Product, Legal, or Marketing disagree on a claim, visibility governance defaults to the most conservative validated position.

Ground truth drift is as dangerous as model drift. If the organisation’s internal truth is unstable, measurement becomes meaningless.

4. Ownership across the technical organisation

Visibility governance fails when no one owns it. It also fails when ownership is too centralised.

A workable model distributes responsibility:

- Platform or ML Engineering: instrumentation, probe scheduling, evidence capture

- Data Governance: ground truth, validation rules, version control

- SRE or Security: alerting thresholds, integrity guarantees

- Product: mapping critical journeys, identifying high-risk domains

- Compliance: aligning severity tiers with regulatory obligations

This mirrors how security, privacy, and reliability controls already function. Visibility should follow the same operational pattern.

5. Controls that integrate into production systems

Visibility governance only works when engineered into existing observability and release processes.

Automated probes

A probe runner should:

- Execute domain-specific prompt sets

- Query multiple assistants

- Capture complete evidence objects

- Hash and store outputs immutably

- Calculate stability and drift metrics

- Trigger alerts when thresholds are crossed

This is synthetic monitoring for AI behaviour. Manual testing cannot produce reliable governance.

Probe configuration

A functional configuration looks like this:

| Tier | Prompts | Repetitions | Frequency | Assistants |

|---|---|---|---|---|

| Critical | 50 | 10 | Daily | All major |

| High | 100 | 5 | Weekly | Top 3 |

| Medium | 200 | 3 | Bi-weekly | Top 2 |

| Low | 300 | 1 | Monthly | Primary |

The exact numbers can scale by industry, but the structure holds.

Probe cost modelling

A typical enterprise will face:

- Tens of thousands of API calls per month

- Storage for hundreds of thousands of evidence objects

- Engineering time for platform development

- Governance overhead for ground truth maintenance

Approximate envelopes:

| Scale | Domains | Prompts/Month | API Cost | Storage | Engineering |

|---|---|---|---|---|---|

| Small | 3–5 | 1K–2K | $500–2K | 10GB | 2–3 months |

| Medium | 10–15 | 5K–10K | $2K–8K | 50GB | 4–6 months |

| Large | 25+ | 20K+ | $10K–30K | 200GB | 8–12 months |

Enterprises must understand this before committing to full adoption. Partial implementations are possible but leave coverage gaps.

6. Vendor constraints and external model risk

Enterprises depend on external vendors whose models change without notice. Visibility governance must acknowledge this.

Key constraints:

- Rate limits restrict probe frequency

- Terms of service may forbid large-scale automated queries without explicit approval

- Models update silently

- Retrieval behaviour is undocumented

- API pricing is volatile

- Vendors provide no stability guarantees

- Regional routing can change answer profiles

This means:

- Enterprises must negotiate visibility-friendly enterprise agreements

- Probe infrastructure must monitor vendor behaviour, not just model outputs

- Vendor risk becomes part of AI visibility governance

The absence of vendor controls is itself a governance finding.

7. Rollout gating: how visibility becomes a release control

Model updates, routing changes, feature launches, and assistant integrations must be gated. A viable gating workflow:

- Execute stability regression suite

- Execute hallucination and source adherence tests

- Compare ASOS baseline to prior audit

- Generate evidence pack

- Approve or block rollout

Emergency overrides should be limited and logged. Without gating, visibility control collapses under internal code change, not just external AI updates.

8. Failure modes and how to mitigate them

Visibility systems have predictable weaknesses.

Infrastructure failure

Probe runner outages or misconfigurations create blind spots.

Mitigation: redundancy, health checks, alerting on probe failure.

Ground truth staleness

If ground truth is outdated, hallucination metrics become invalid.

Mitigation: versioned updates triggered by product and legal changes.

Alert fatigue

Excess drift alerts lead to ignored signals.

Mitigation: tiered severity levels and domain classification.

Vendor shifts

Unannounced model updates change behaviour overnight.

Mitigation: daily probes on critical domains.

Internal model changes

Organisations deploying their own RAG or LLMs may cause visibility regression.

Mitigation: extend the same governance controls to internal models.

A failure mode analysis must be part of the audit cycle.

9. Maturity roadmap: implementing this across 12 months

Visibility governance cannot launch in a single sprint.

Phase 1: Foundation (Months 1–3)

- Define evidence object schema

- Build basic probe runner

- Implement ground truth repository

- Produce first evidence packs

Phase 2: Automation (Months 4–6)

- Integrate with observability stack

- Formalise thresholds

- Implement alerting pathways

- Expand probe coverage

Phase 3: Scaling (Months 7–9)

- Rollout gating for updates

- High-volume evidence storage

- Weekly internal audits

Phase 4: Maturity (Months 10–12)

- Full domain coverage

- Vendor monitoring

- Board-level reporting

- Formal incident taxonomy

This creates a predictable path from initial pilot to enterprise governance.

10. Integration with existing governance frameworks

Visibility governance does not replace established frameworks. It extends them.

ISO 42001 (AI Management)

Visibility controls fit under Monitoring, Traceability, and Evidence Management.

NIST AI RMF

Visibility metrics map to Govern, Measure, and Manage functions.

SOC2

Evidence packs and gating strengthen Availability, Confidentiality, and Processing Integrity controls.

Internal AI governance committees

Visibility becomes a quantifiable pillar rather than a policy-driven abstraction.

Closing

Enterprises cannot govern what they cannot measure, and they cannot measure what they do not define or instrument. AI assistants mediate discovery, comparison, and evaluation processes that influence decisions across the organisation. Without engineering-driven visibility controls, these systems behave in ways the enterprise cannot observe, anticipate, or audit.

This operating model is the basis for a sustainable, defensible approach to AI visibility governance. It gives technical leaders the tools to stabilise a decision environment that is becoming more influential and less predictable than any prior discovery surface.

If adopted broadly, it will move visibility from an emergent risk into a managed operational discipline.