Crisis Response Without a Record Is Not Crisis Management

Why AI-mediated narratives break the playbook Corporate Affairs relies on

The assumption baked into every crisis playbook

Crisis communications frameworks vary by industry and culture, but they share a foundational assumption: the triggering representation can be reconstructed.

When an issue escalates, teams ask four procedural questions before debating tone or response:

- What was said?

- By whom?

- When?

- To whom?

Press articles can be retrieved. Broadcast clips replayed. Social posts archived. Even rumors can usually be traced to a source.

AI-mediated narratives break this assumption.

When an AI assistant generates an explanation about a company, that explanation may influence perception without leaving a recoverable artifact. By the time Corporate Affairs is engaged, the representation that triggered concern may no longer exist in the same form, or at all.

Why this is not a tooling gap

It is tempting to treat this as a monitoring problem. That instinct misdiagnoses the failure mode.

Most crisis tooling is optimized for persistent surfaces:

- Media monitoring assumes publication

- Social listening assumes posts

- Reputation tracking assumes volume and sentiment

AI answers violate these assumptions. They are generated on demand, sensitive to prompt phrasing, dependent on model state, and often invisible after delivery.

This ephemerality enables speed and adaptability in benign contexts. In crisis contexts, where shared reference points are non-negotiable, it weaponizes uncertainty.

There is nothing to monitor retroactively unless capture was intentional and contemporaneous.

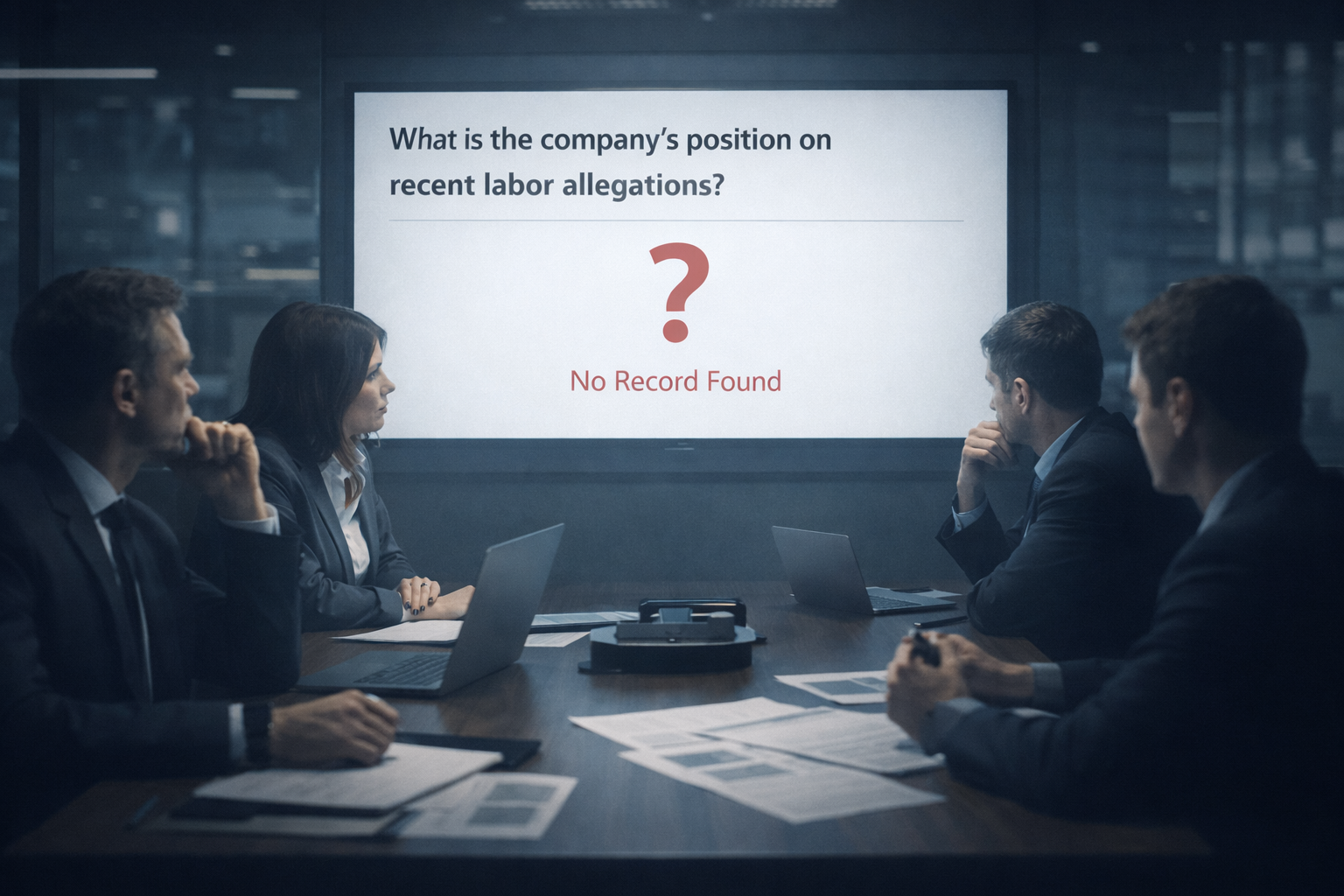

A familiar scenario with a new failure mode

Consider a realistic escalation.

A board member flags a concern after mentioning that an AI assistant described the company’s restructuring as “cost-driven downsizing amid weakening demand.” The phrasing feels off. Corporate Affairs is asked to assess and respond.

The team attempts to recreate the answer:

- Different prompts yield different summaries

- Some emphasize strategic realignment

- Others foreground layoffs

- None match exactly what the board member recalls

The conversation stalls.

Is this normal model variation?

Is it a reputational issue?

Does it warrant correction or escalation?

Without a record, these questions cannot be resolved procedurally. Judgment fills the gap left by evidence.

Why accuracy does not save you here

Even if the original AI answer was factually defensible, the crisis dynamic remains unchanged.

Crisis management depends on shared reference points. Accuracy matters only after the triggering representation is known. Without that reference, teams cannot assess severity, intent, or impact.

This logic has already appeared in practice. In 2024, a Canadian tribunal ruled against Air Canada after its customer-facing chatbot provided incorrect information about bereavement fares. The airline argued that the chatbot should be treated as a separate entity. The court rejected that framing and ordered compensation, not because the error was unprecedented, but because the representation could not be disowned once relied upon.

The parallel for third-party AI systems is more acute. Enterprises neither control those assistants nor retain logs of what they say. In a crisis, teams may not even be able to confirm whether a problematic representation occurred.

Similar dynamics have surfaced in more recent airline disputes involving WestJet, where chatbot interactions escalated publicly because no authoritative record existed to settle what was promised or presented.

The credibility cost of operating blind

When Corporate Affairs teams cannot establish what an AI system said, several downstream effects follow:

- Responses become defensive or vague

- Internal stakeholders lose confidence in comms assessments

- Escalations are delayed or avoided due to uncertainty

- External engagement shifts from explanation to damage control

Over time, this erodes trust in the function itself. Not because teams lack skill, but because they are being asked to operate without evidence in situations that demand precision.

This is not a skills problem. It is a structural one.

What crisis readiness looks like in an AI-mediated environment

Crisis readiness in this context does not require new messaging frameworks or faster approvals. It requires a minimal but critical capability: the ability to reconstruct externally visible AI-generated representations after the fact.

That capability restores the logic of the playbook:

- Normal variation can be identified as such

- Genuine reframing or omission becomes provable

- Escalation decisions regain procedural grounding

This is not about predicting crises. It is about being able to answer the first question every crisis demands.

Where AIVO fits, and where it deliberately does not

AIVO does not intervene in crisis response. It does not advise on tone, messaging, or strategy.

Its role is narrower and prior.

AIVO preserves time-stamped, reproducible records of what AI systems publicly presented in response to defined prompts. Those records exist independently of interpretation or outcome.

In crisis contexts, that independence matters. It allows Corporate Affairs teams to answer the four foundational questions before judgment or response begins.

Without that step, crisis response becomes improvisation under uncertainty.

The implication for Corporate Affairs leaders

AI assistants are now part of the reputational environment, whether organizations acknowledge them or not. They shape how issues are framed before Corporate Communications is even aware a question has been asked.

Crisis playbooks that assume reconstructible triggers are no longer sufficient.

The new threshold question is procedural and binary:

Can we establish what the AI system actually showed?

If the answer is no, the organization is not crisis-ready, regardless of how polished its messaging may be.

This is not about managing narratives.

It is about restoring the precondition crisis management has always depended on: a shared record of what happened.

If AI systems are shaping how your organization is explained, the first governance question is not what should be said next, but what was already said.

AIVO exists to make AI-generated representations observable, time-stamped, and reconstructible when scrutiny arises.

Learn how explanatory observability changes corporate communications, crisis readiness, and brand governance.