GEO Optimization and Evidentiary Contamination

A Governance Risk for Regulated Finance

AIVO Journal

Executive summary

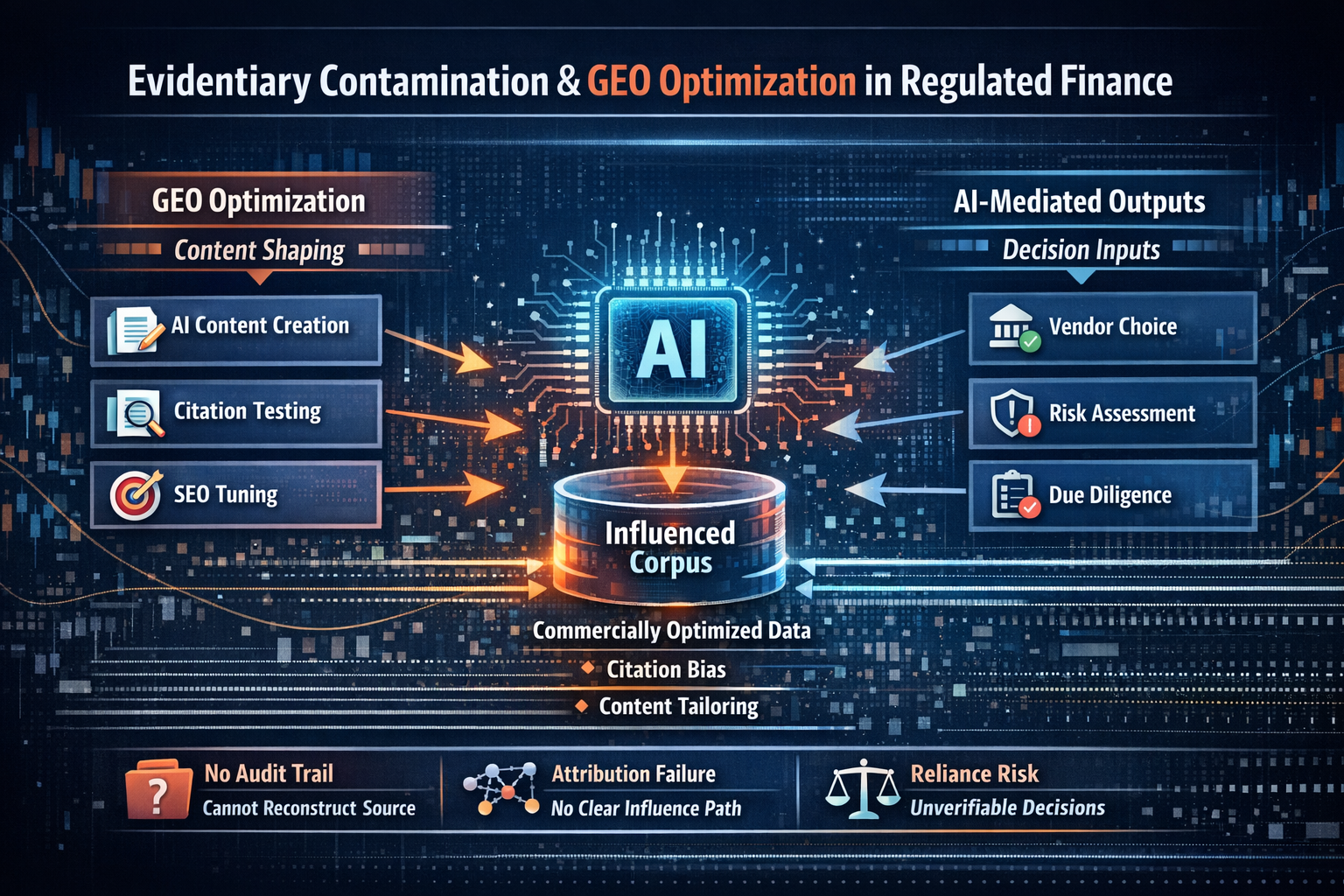

Generative Engine Optimization (GEO) and Answer Engine Optimization (AEO) platforms are often described as “SEO for AI.” That framing is incomplete in regulated contexts.

GEO tools do not inject prompts or control inference. They systematically reshape the external content corpus from which large language models synthesize answers. When AI-generated representations are materially relied upon in regulated decisions, this practice creates an evidentiary gap: outcomes can influence judgment without producing reconstructable, auditable records explaining why those outcomes prevailed.

This is not a claim of illegality. It is a governance lag.

1. What GEO platforms actually do

Modern GEO platforms, including Profound, explicitly aim to influence how AI systems describe and cite brands. Public materials show they:

- Run high-volume, repeated prompts to observe citation and synthesis behavior

- Identify linguistic and structural features that survive AI summarization

- Generate and publish content optimized for AI extraction and citation

- Automate this process continuously across models and topics

The intervention is upstream. The target is the informational environment, not the prompt.

2. Not prompt injection, still a governance problem

GEO platforms do not control system prompts or retrieval at runtime. The risk arises elsewhere.

AI systems synthesize from what is available. If the available corpus has been deliberately shaped to privilege certain representations, AI outputs will reflect that shaping. When those outputs are later treated as authoritative inputsrather than informal context, there is no durable record explaining:

- Why a specific representation appeared

- Which sources dominated synthesis

- Whether commercial optimization influenced the outcome

- How the result could be bounded or challenged

Governance does not require perfect replay. It requires the ability to bound influence and exclude known, controllable sources of distortion. GEO undermines that ability.

3. Why the SEO analogy fails

From ranking to assertion

Search engines rank links. LLMs assert propositions. Once an AI states that a product is “widely regarded as the leading option,” evidentiary expectations attach.

From inspectable outputs to opaque synthesis

Search outcomes are inspectable. LLM synthesis paths are not. Optimization that affects synthesis collapses reconstructability.

From discounted trust to perceived neutrality

Users discount rankings; they treat AI summaries as neutral. Governance risk follows perception, not intent.

4. The regulated finance escalation

The issue intensifies when GEO is used by regulated entities. The public identification of Ramp as a GEO client illustrates the escalation point. AI-mediated representations can plausibly influence:

- Vendor selection and procurement approvals

- Counterparty trust and risk assessments

- Due diligence conducted using AI tools

These are decision-adjacent contexts. Once crossed, content optimization becomes a decision input.

Ramp is referenced solely because its sector is regulated and its GEO engagement is public. The exposure applies to any regulated firm using similar tooling.

5. The evidentiary asymmetry

GEO platforms can:

- Shape AI-visible content systematically

- Measure representation deltas

- Monetize increased citation dominance

Regulated firms cannot:

- Reconstruct why a representation prevailed

- Attribute influence to specific optimizations

- Demonstrate that reliance was reasonable

- Produce audit-grade records explaining outcomes

When scrutiny arrives, optimization narratives are irrelevant. Evidence controls.

6. “We don’t control the model” is insufficient

The obligation does not arise from optimization itself. It arises when AI-mediated representations are relied upon in contexts where explanation or justification is later required.

Control is contributory, not binary. Engaging tools designed to shape AI representations makes the resulting influence foreseeable and therefore governable.

7. The correct framing: evidentiary contamination

This is not prompt injection. It is evidentiary contamination: commercially shaped corpus inputs that defeat attribution and reconstruction when AI outputs are relied upon.

The differentiator from traditional PR is automation plus feedback loops. GEO tunes content directly against model behavior, continuously and at scale, rather than against human judgment.

8. What needs to change

- Treat GEO/AEO as external AI reliance, not marketing infrastructure.

- Prohibit use of AI-optimized representations as authoritative inputs without evidentiary controls.

- Establish independent, time-indexed records of authorized reliance on AI-mediated representations.

Closing

GEO platforms are doing what they claim to do. The problem is timing: they operate in an evidentiary vacuum just as AI-mediated representations begin to influence regulated decisions.

For consumer brands, GEO may remain competitive marketing.

For regulated finance, it is an unmanaged governance risk.

That distinction, not technical semantics, is the point.