How General Counsel Can Operationalise AIVO Inside Legal Workflows

An evidence-first approach to AI-mediated reliance

Executive premise

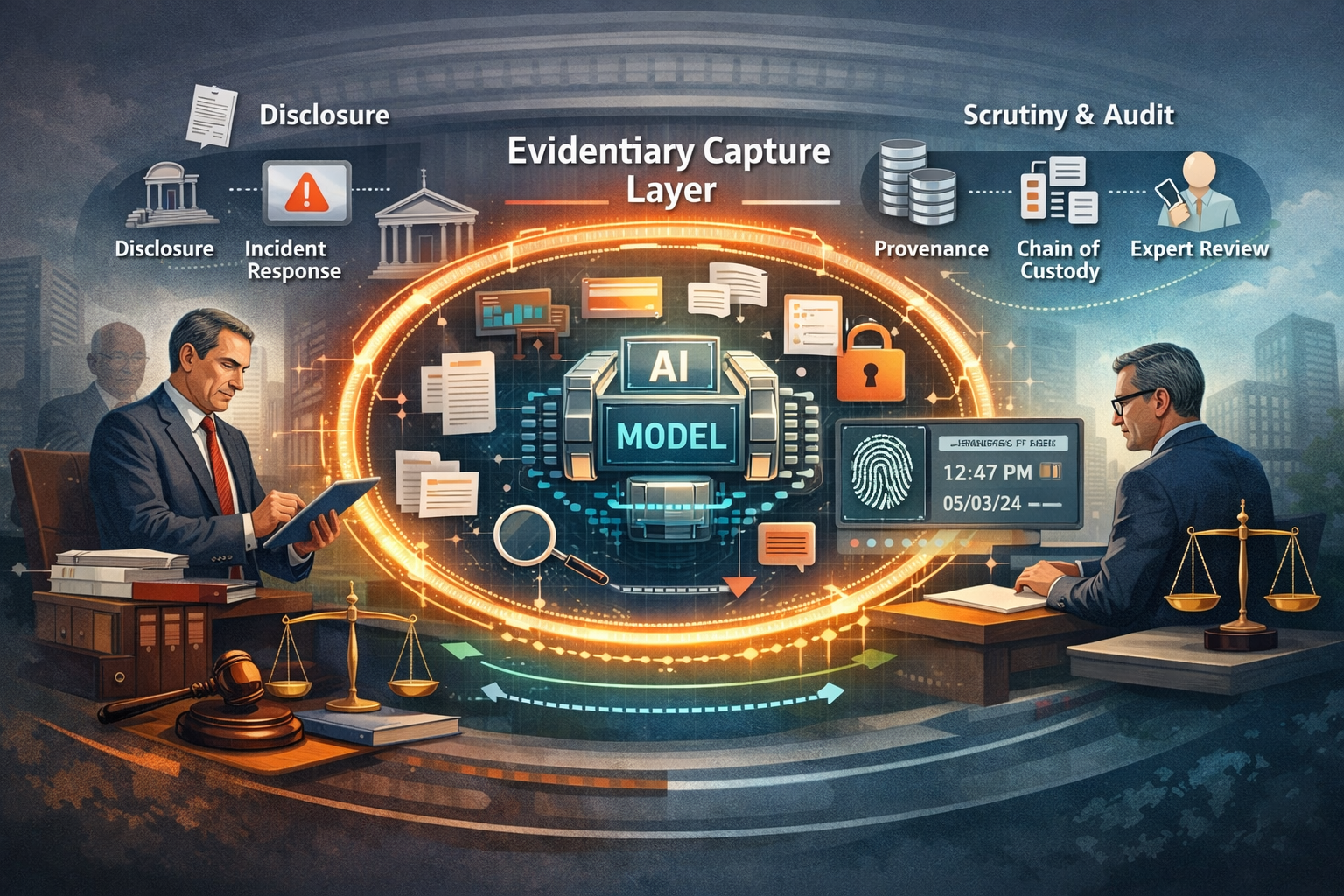

AI governance discussions still focus heavily on model quality, controls, and deployment risk. Those issues matter. But once AI-mediated statements influence disclosure, eligibility, pricing, health guidance, or internal conclusions, a narrower and distinct risk emerges: whether the organisation can later evidence what was relied upon, when, and under what constraints.

That risk is evidentiary, not technical.

AIVO is designed to address this specific failure mode. It does not validate AI outputs, reduce bias, or guarantee admissibility. It preserves AI-mediated decision narratives at the moment of reliance so they can later be examined, challenged, or defended without speculation.

Preserving an AI-mediated output does not cure bias or methodological weakness. It makes those defects examinable rather than hypothetical.

Reframing AI governance once reliance exists

In many organisations, AI governance sits with IT or data science. That allocation works for development and deployment oversight. It becomes incomplete once AI outputs are relied upon in governed decisions.

At that point, the governing questions change:

- What information was presented?

- Who relied on it?

- In what form?

- With what limits and qualifications?

Engineering logs and model documentation explain system behaviour. They rarely reconstruct what a decision-maker actually saw. Courts, regulators, and auditors consistently focus on the latter.

AIVO therefore sits alongside established General Counsel workflows:

- disclosure review and sign-off

- incident response

- supervisory inquiry

- internal investigation

- litigation hold and discovery

This reflects where legal accountability ultimately resides.

What an AIVO deliverable is and is not

An AIVO deliverable is a fixed evidentiary artifact capturing an AI-mediated output under controlled conditions. It is intentionally limited in scope.

It is defined by four properties:

- Temporal capture

The artifact reflects the output as it existed at the moment of reliance. It is not recreated after an issue arises. - Bounded reproducibility

Variance across time, model versions, or environments is measured and documented within a defined tolerance, established ex ante for the workflow and not adjusted post-incident. Non-determinism is not eliminated. It is made inspectable. - Attribution clarity

The boundary between AI output and human judgment is explicit. The artifact does not blur authorship or responsibility. - Non-intervention

The system does not improve, summarise, validate, or editorialise the output. This prevents narrative drift and hindsight contamination.

What an AIVO deliverable does not do:

- It does not assess accuracy or bias.

- It does not certify compliance.

- It does not guarantee admissibility.

Courts increasingly evaluate machine-generated material under expert-style reliability frameworks, including the proposed Federal Rule of Evidence 707, which extends Rule 702-style scrutiny to machine outputs. AIVO supports authenticity, provenance, and temporal preservation. Questions of methodological reliability remain for legal and judicial assessment.

How AIVO fits into legal workflows in practice

1. Disclosure and sign-off

A common disclosure risk is not inaccuracy, but inability to defend provenance.

Operationally:

- AI-generated summaries or explanations used in disclosure are captured as AIVO artifacts before approval.

- The artifact becomes part of the disclosure record.

- If challenged later, counsel can evidence what was reviewed rather than rely on post-hoc reconstruction.

This does not replace substantive review. It reduces provenance ambiguity.

2. Incident response and supervisory review

In AI-related incidents, regulators typically ask what the system communicated, not what it was designed to do.

AIVO supports:

- reconstruction of AI outputs as delivered

- comparison across time or model versions

- separation of model drift from contextual change

This does not resolve upstream quality issues. It allows them to be examined without speculation.

3. Litigation and discovery

Non-deterministic systems complicate discovery because they cannot be reliably re-run.

AIVO artifacts are created before disputes arise and can function as:

- time-stamped exhibits

- reference points for expert analysis

- anchors for reliance assessment

They do not replace logs or internal records. They address a different gap: reconstructability of the relied-upon narrative itself.

Transmission formats as an evidentiary choice

Paper-format artifacts

AIVO deliverables can be issued as signed PDFs suitable for:

- board and risk committee review

- regulatory submissions

- litigation exhibits

Paper persists because many high-stakes decisions occur in static review contexts where chain of custody and annotation matter.

Machine-readable artifacts for controlled reference

AIVO artifacts can also be delivered in structured, machine-readable form for reference-only use via Model Context Protocol or equivalent interfaces used with OpenAI, Anthropic, or other LLM providers.

This does not permit free-form ingestion. The design intent is citation, not synthesis. Artifacts are treated as immutable evidence that may be referenced but not rewritten. Enforcement requires explicit technical and policy controls defined by General Counsel.

Governance boundaries General Counsel should define

To preserve evidentiary integrity, organisations should clearly distinguish between:

- evidence-bearing AI outputs

- advisory or analytical use of AI

Recommended governance measures include:

- prohibiting AI summarisation or paraphrasing of evidentiary artifacts in disclosure contexts

- preventing post-incident recreation from being treated as original output

- separating evidence repositories from advisory prompt environments

In practice, enforcement depends less on tooling than on classification. Whether AI outputs are designated as evidence or assistance determines how they are governed, retained, and reviewed.

Independence and the limits of internal reconstruction

Some organisations can reconstruct AI outputs internally, particularly with independent auditors. Many cannot once reliance exists.

Internal observability tools may support reconstruction, but after reliance they are often assessed as operational records rather than neutral evidentiary artifacts. Post-hoc recreation is vulnerable to hindsight bias, incentive distortion, and credibility challenge.

AIVO’s external positioning is intended to reduce, not eliminate, that contestability. Independence supports credibility. It does not guarantee acceptance.

Takeaway for General Counsel

AI has become a representational channel inside governed decisions. Treating its outputs as ephemeral assistance is increasingly misaligned with legal scrutiny.

AIVO does not replace model governance, risk management, or legal judgment. It complements them by preserving what those systems cannot: the relied-upon narrative itself.

That function is evidentiary. Its value lies in restraint.

Call to Action

If AI-mediated summaries, explanations, or eligibility narratives already influence decisions in your organisation, a practical next step is to test whether those outputs could be evidenced later.

A 10-day AIVO Reliance Risk Probe examines a single live workflow and produces:

- one evidentiary artifact

- a scoped gap assessment

No system changes. No optimisation. No claims of admissibility.

In many cases, probes identify several points where reliance exists without durable evidence. Identifying that asymmetry early is the purpose.

Contact: audit@aivostandard.org

Appendix A: Admissibility context (Rules 702 and 707)

- Rule 702 (Expert Evidence): Requires sufficient facts or data, reliable principles and methods, and reliable application.

- Proposed Rule 707 (Machine-Generated Evidence): Extends similar scrutiny to machine outputs offered without a human expert, emphasizing reliability, transparency of method, and appropriate context.

Where AIVO fits:

- Supports authenticity, provenance, and temporal integrity.

- Does not substitute for validation of the underlying AI system.

- Enables courts and regulators to examine reliability questions using a preserved record rather than conjecture.

Appendix B: General Counsel checklist

Is this workflow creating evidentiary reliance?

Answering “yes” to any of the following indicates evidentiary exposure:

- Is an AI-generated summary, explanation, or eligibility rationale reviewed or approved by humans?

- Could that output influence disclosure, customer eligibility, pricing, credit, health, or enforcement decisions?

- Would a regulator, court, or auditor later ask what information the decision-maker saw?

- Could the output change over time if regenerated?

- Would post-hoc reconstruction rely on memory, screenshots, or re-runs?

If so, evidentiary capture should be considered.

Appendix C: Key legal references (non-exhaustive)

- Federal Rule of Evidence 702, Testimony by Expert Witnesses

- Proposed Federal Rule of Evidence 707, Machine-Generated Evidence

- Daubert v. Merrell Dow Pharmaceuticals, Inc., 509 U.S. 579 (1993)

- Advisory Committee Notes on emerging treatment of AI-generated evidence

These frameworks underscore a common theme: preservation and reliability are distinct questions. AIVO addresses the former so the latter can be examined on the merits.