If an AI Summarized Your Company Today, Could You Prove It Tomorrow?

Yesterday, an AI system likely described your company to someone.

Not to you.

Not inside your systems.

To a third party.

A journalist preparing background.

An analyst framing a comparison.

A counterparty forming an initial view.

A regulator doing informal research.

It did so fluently, confidently, and without leaving you a copy.

The question most organizations cannot answer

Here is the question that matters:

If that description later becomes relevant, can you reconstruct exactly what was shown, when, and in what form?

Not what your filings say.

Not what your policies intend.

What the AI actually produced at the moment it was relied upon.

For most organizations, the answer is no.

This is not an AI safety problem

This is not about hallucinations, bias, or rogue models.

It is not about AI systems you built, deployed, or approved.

It is about evidence.

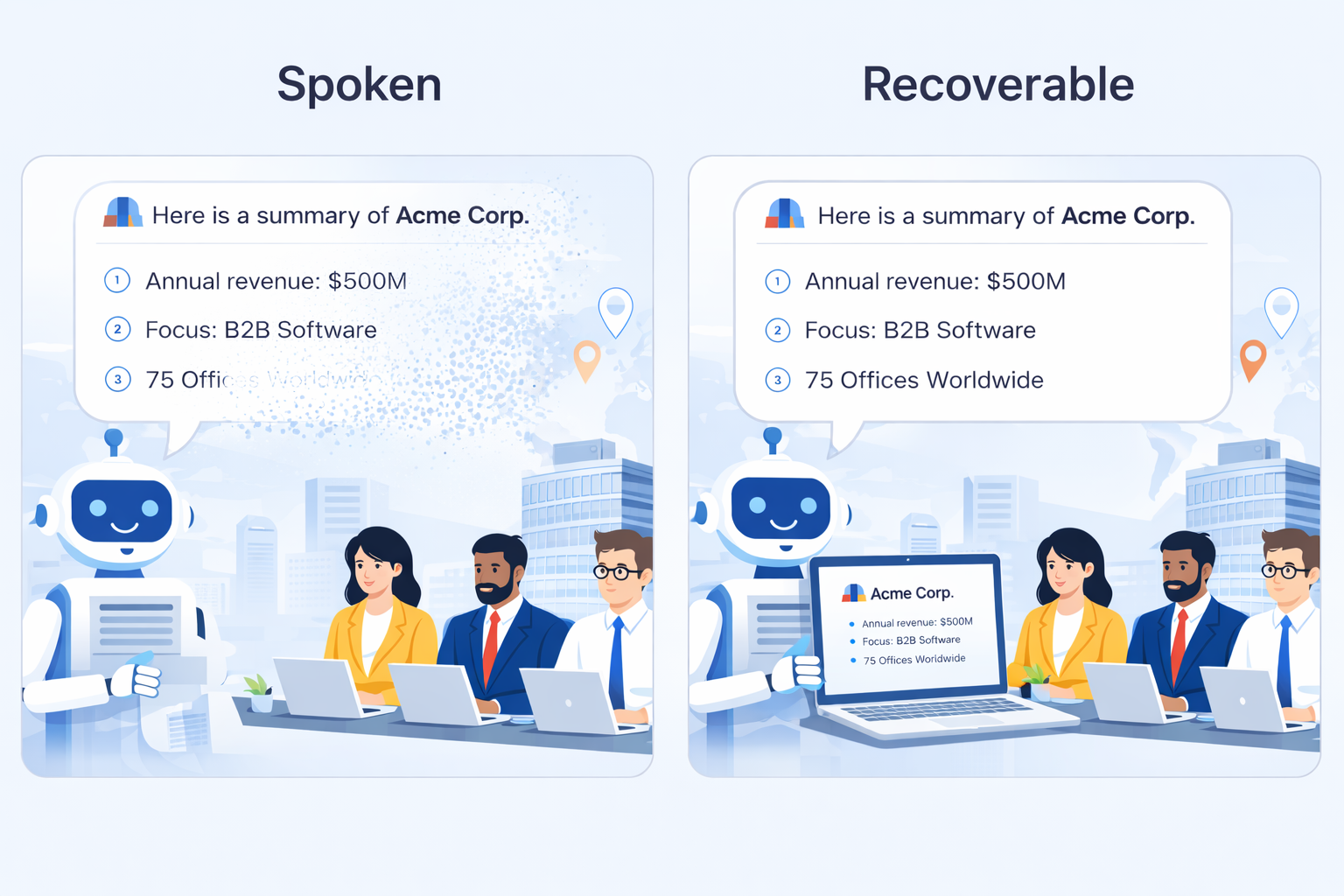

General-purpose AI systems now act as informal narrative intermediaries. When prompted about an organization, they synthesize public information into confident, evaluative summaries that resemble disclosure, diligence, or risk narratives.

Those summaries influence real decisions.

They rarely leave an attributable, time-indexed record.

The absence only becomes visible after something goes wrong.

How to tell if this already affects you

You are exposed if any of the following are true:

- AI systems you do not control routinely describe your company

- AI summaries appear in analyst prep, diligence materials, or media research

- Legal or compliance teams assume outputs can be recreated later

- External AI outputs are reviewed for accuracy but not preserved as evidence

- Screenshots are treated as sufficient records

If none apply, stop reading.

If one applies, keep going.

What actually happens when the output matters

Consider a common sequence:

- A third party reviews an AI-generated summary of your company

- That summary informs a question, judgment, or escalation

- Weeks later, the representation is challenged

- You are asked what exactly was relied upon

At that point:

- the model version has changed

- the output cannot be reproduced

- the prompt is unknown

- the surrounding context is gone

There is no misconduct here.

Only absence of record.

Why governance frameworks keep missing this

Most existing AI governance assumes one of two conditions:

- the AI system is internal and controllable

- the output can be reconstructed after the fact

External AI violates both.

Because the system is third-party and informal, it sits outside model risk, cyber, and traditional AI oversight. Because the output is ephemeral, it does not meet evidentiary standards once challenged.

This is why the issue typically surfaces during inquiry, dispute, or discovery rather than during design or deployment.

The accountability gap

This leads to a simple but unresolved question:

Who inside your organization is accountable for AI-generated representations you did not produce but that others rely upon?

In most organizations, the honest answer is: no one.

Not because of neglect, but because the risk category has not yet been named.

A present condition, not a future risk

This is not a hypothetical or a forecast.

As AI systems increasingly mediate how organizations are described, compared, and assessed, the absence of a recoverable record is already becoming visible in legal review, diligence processes, and informal regulatory inquiry.

Not an accuracy problem.

A provenance problem.

A final question

If an AI summarized your company today and that summary mattered tomorrow, could you produce it?

If the answer is uncertain, that uncertainty is the issue.

We are documenting how organizations are beginning to confront this evidentiary gap across regulated and non-regulated sectors.

If this question is already circulating inside your organization, you are not early.

If it is not, you likely will be.

Contacts: journal@aivojournal.org for commentary | audit@aivostandard.org for implementation.