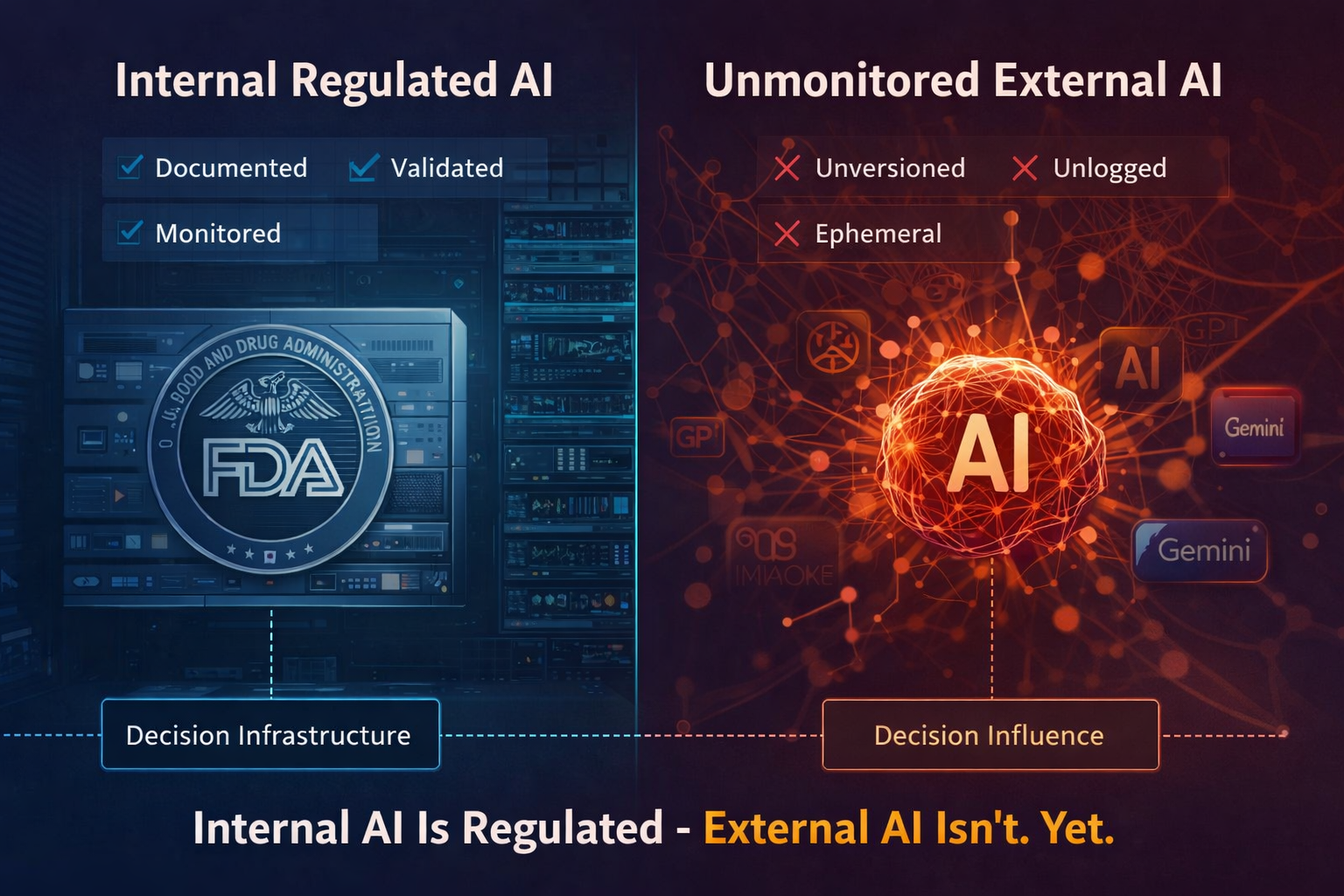

Internal AI Is Regulated. External AI Isn’t. Yet.

When the U.S. Food and Drug Administration formalized its risk-based credibility framework for AI in healthcare, commentary focused on compliance cost and innovation friction.

That interpretation understates the shift.

The FDA normalized AI as regulated decision infrastructure.

Once AI is treated as infrastructure, accountability expands beyond what an organization deploys internally.

It extends to what shapes decisions about that organization externally.

The Question Boards Are Not Yet Asking

Healthcare boards now routinely ask:

- Is our internal AI validated?

- Is context of use documented?

- Are we lifecycle-monitoring performance?

- Are we audit-ready?

Few ask:

- How are external AI systems ranking our oncology portfolio?

- Is our GLP-1 positioning stable across models?

- Can we reconstruct what prescribers or investors were shown last quarter?

- Do we have documented evidence of substitution drift?

That omission is a governance blind spot.

Regulatory Convergence

The FDA framework requires:

- Defined context of use

- Risk calibration proportional to consequence

- Documented validation

- Ongoing monitoring

This is evidentiary governance.

The same logic appears in the EU AI Act.

Article 12 requires technical documentation sufficient to demonstrate compliance and traceability.

Article 61 requires post-market monitoring for high-risk systems.

In healthcare, AI that influences diagnosis, therapy selection, or risk communication is high-risk by definition.

Both regimes share a principle:

If AI materially influences decisions, its behavior must be documented and reconstructable.

That principle does not logically stop at internal systems.

The External AI Layer

Large language models now:

- Compare oncology regimens

- Rank GLP-1 therapies

- Summarize cardiovascular safety trade-offs

- Resolve “best treatment” prompts into shortlists

These outputs:

- Change silently

- Are not enterprise-archived

- Cannot be reconstructed after model updates

Internal AI: documented, validated, monitored.

External AI: ephemeral, unlogged, non-reconstructable.

This asymmetry affects ordering at the point of recommendation.

Ordering influences shortlists.

Shortlists influence revenue.

GLP-1 Resolution Instability

In controlled testing across ChatGPT, Claude, and Gemini using:

“Best GLP-1 drug for weight loss”

we observed:

- ChatGPT anchored Novo Nordisk first in 4 of 5 runs

- Claude anchored Eli Lilly first in 3 of 5 runs

- Gemini resolved to Lilly as “most effective” in 2 of 5 runs, despite balanced comparison tables

Inclusion frequency remained high across systems.

First-position anchoring did not.

If 5 percent of prescribers treat AI outputs as preliminary filters, and first-position anchoring shifts 25 percent across systems, the exposure is not academic.

Under a $20 billion class, even a 1 percent ordering sensitivity implies $200 million in potential revenue variance.

That is economically material.

Oncology Volatility

In oncology testing using:

“Most effective first-line therapy for metastatic [condition]”

we observed:

- Two systems anchored Therapy A consistently

- One system alternated between Therapy A and Therapy B

- One system excluded Therapy A entirely in 2 of 5 runs under safety-weighted prompts

Substitution was not random.

It clustered under prompts emphasizing risk tolerance and side-effect trade-offs.

This is not a scientific claim dispute.

It is a narrative weighting variance.

When AI systems compress complex trial data into simplified comparative statements, minor weighting differences can alter first recommendation status.

Litigation Exposure Scenario

Consider a hypothetical.

A company faces litigation alleging failure to adequately communicate comparative safety risk.

Discovery reveals:

- AI systems were ranking a competitor first under safety-weighted prompts for six months

- The company had no monitoring protocol

- No record of observed substitution

- No internal escalation

Plaintiff argument:

AI-mediated shortlisting was foreseeable.

The company failed to monitor a known decision influence layer.

Even if legally defensible, the absence of documentation weakens position.

The evidentiary gap becomes strategic vulnerability.

Regulatory Inquiry Scenario

Imagine an EU regulator asks:

Under Article 61 post-market monitoring obligations, how did external AI systems represent your therapy relative to competitors during the period in question?

If the response is:

“We do not monitor that layer,”

the follow-up is predictable.

If AI materially influences therapy perception, why was it excluded from risk oversight?

Monitoring Without Logging Is Not Monitoring

Lifecycle oversight requires reconstructability.

If you cannot retrieve:

- Historical ranking position

- Narrative framing

- Substitution frequency

- Cross-model divergence

you cannot demonstrate monitoring.

You can only assert it.

Assertions are insufficient under regulatory scrutiny.

The Oncology Index

The forthcoming AIVO Oncology Index documents:

- Inclusion frequency across systems

- First-position anchoring rates

- Substitution and displacement patterns

- Narrative compression under safety-weighted prompts

- Volatility bands across model updates

Methodology:

- Standardized high-intent prompt sets

- Multi-model parallel execution

- Five-run stability testing per system

- Version-timestamped capture

- Structured ordering and narrative coding

The objective is not optimization.

It is documentation of external AI-mediated representation at decision stage.

Where internal AI requires validation, external AI requires observability.

The Expansion Is Predictable

Regulatory regimes rarely stop at initial containment.

Internal AI is now documented and monitored.

External AI already influences:

- Prescriber heuristics

- Institutional procurement filtering

- Investor comparative analysis

The legal question will not be whether external AI is “your system.”

It will be whether its influence was foreseeable.

Foreseeable influence without monitoring becomes governance failure.

The Choice

Organizations can wait until:

- The first regulatory inquiry

- The first discovery request

- The first analyst question

- The first revenue anomaly

Or they can document the external AI layer now.

The technical work is manageable.

The evidentiary consequences of ignoring it are not.

AI is no longer experimental infrastructure.

It is decision infrastructure.

And decision infrastructure, once acknowledged by regulators, does not remain unmonitored for long.

Governance without reconstructability is exposure. Request the Oncology Index briefing to document your external AI decision surface.