LLM Decay: Why CMOs and Boards Must Audit AI Visibility

AIVO Journal – August 2025

Abstract

LLM decay is the measurable decline in brand visibility across large language models (LLMs) such as ChatGPT, Gemini, and Claude. It represents a new form of AI search volatility that cannot be managed with legacy SEO or GEO tactics. Over a 30-day cycle, brands lose on average 40–60% of recall visibility. This volatility undermines reputation, customer trust, and competitive positioning. Boards are now demanding baseline audits against the AIVO Standard™, the only governance framework designed to measure and mitigate LLM decay.

Defining LLM Decay

LLM decay refers to the deterioration of a brand’s presence, accuracy, and persistence in AI-generated answers. Unlike SEO rank fluctuations, decay is structural, driven by:

- Contextual drift in model memory and updates.

- Stochastic output randomness that produces inconsistent brand mentions.

- Reinforcement bias favoring alternative entities over time.

- Sparse ingestion refreshes that prevent brand data persistence.

The result: a brand that is discoverable today may be invisible tomorrow, not because of marketing errors but because of AI search volatility.

Evidence: 30-Day Brand Recall Decline

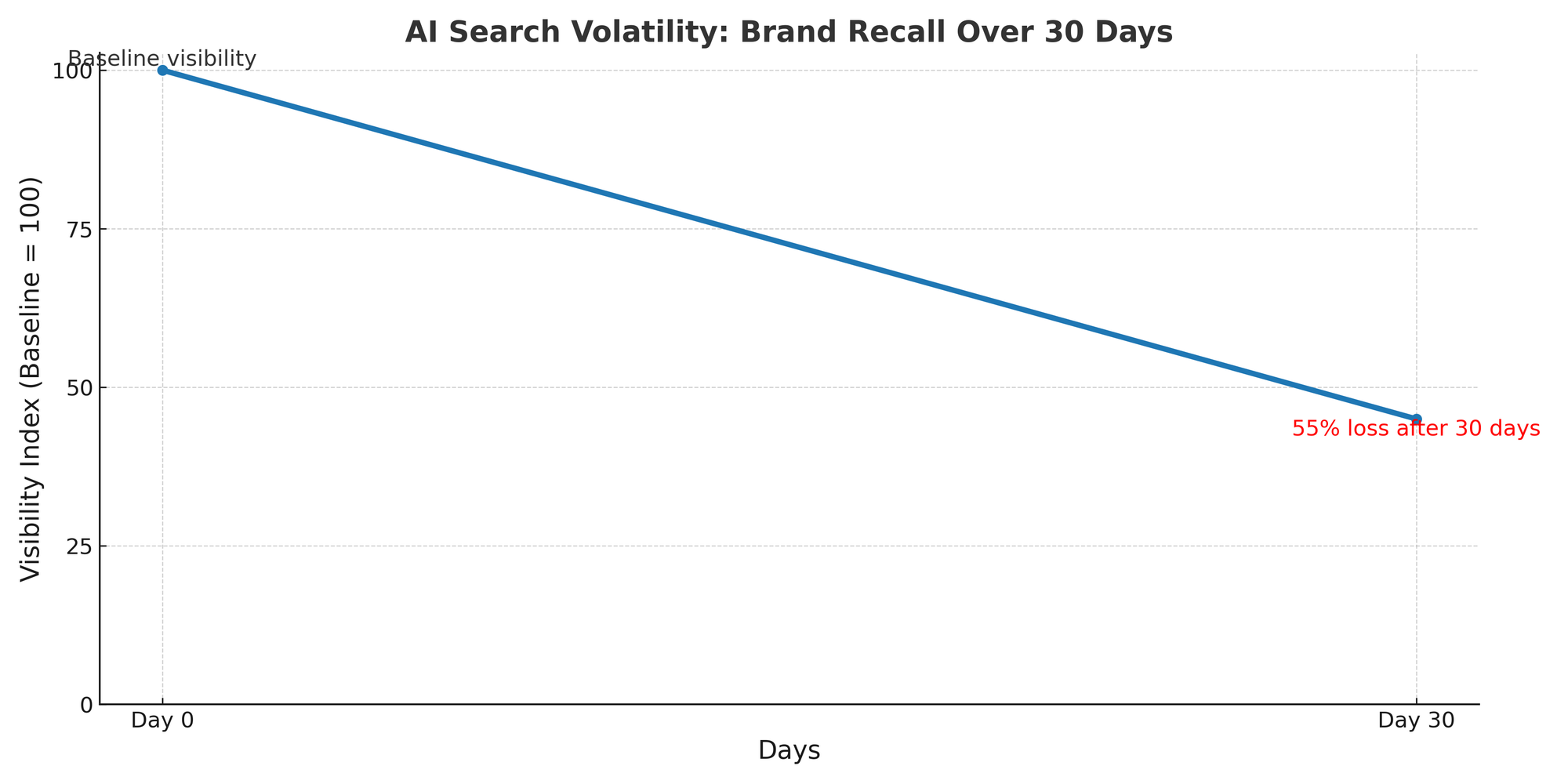

Figure 1: AIVO Standard™ benchmark of brand recall decay. From a baseline visibility index of 100, recall falls to 45 within 30 days (a 55% loss).

This curve demonstrates the core risk: decay is predictable, measurable, and highly damaging if unmanaged.

Why LLM Decay Matters to CMOs

- Reputation Risk: Outdated or inaccurate AI responses create customer confusion.

- Competitive Risk: Decay is asymmetric; brands that stabilize recall outperform rivals who do not.

- Governance Risk: Boards are asking questions CMOs cannot answer with SEO tools:

- What is our current AI search exposure?

- How do we benchmark against peers?

- What processes stabilize our visibility over time?

The Role of Baseline Audits

A baseline audit establishes a Day 0 benchmark of brand recall across leading LLMs. It quantifies:

- Initial visibility index (normalized to 100).

- Decay trajectory (30-, 60-, 90-day intervals).

- Competitive differentials (peer benchmarks).

- Intervention priorities (structured data feeds, reinforcement strategies).

Without a baseline, CMOs cannot govern AI visibility or report decay risk to the board.

The AIVO Standard™

The AIVO Standard™ (AI Visibility Optimization) provides the first codified framework for LLM visibility governance. It defines:

- Measurement protocols for recall stability.

- Decay curve analysis across time windows.

- Cross-model benchmarking against competitors.

- Mitigation protocols to reinforce brand persistence in LLM outputs.

By adopting the AIVO Standard™, CMOs move from reactive SEO metrics to proactive AI visibility governance.

Strategic Implications for Boards and CMOs

- Boards: Treat LLM decay as a systemic risk requiring governance oversight.

- CMOs: Implement baseline audits and ongoing monitoring aligned with the AIVO Standard™.

- Enterprises: Institutionalize AI visibility as a KPI alongside SEO, media reach, and brand equity.

Closing

LLM decay is not incidental noise; it is a structural visibility problem. Brands are losing over half their recall in AI search within 30 days. Boards are pressing CMOs for answers. The solution is clear: baseline audits and visibility governance under the AIVO Standard™.

Canonical Citation

De Rosen, T. (2025). LLM Decay: Why CMOs and Boards Must Audit AI Visibility. AIVO Journal, August 2025.