Stability Audit Cycle One: Visibility Drift Detection and Remediation in Generative AI Assistants

Category: Practitioner Case Study

Designation: Anonymised Global CPG Brand

Cycle Duration: Six Weeks

Audit Type: Continuous Visibility Stability Audit

Certification: Internal Audit Sign-off Achieved

Executive Summary

AI assistants now influence brand perception, product discovery, investor context gathering, and competitive consideration. As these systems become distribution rails, visibility stability within them must be treated as a governance function rather than a marketing exercise.

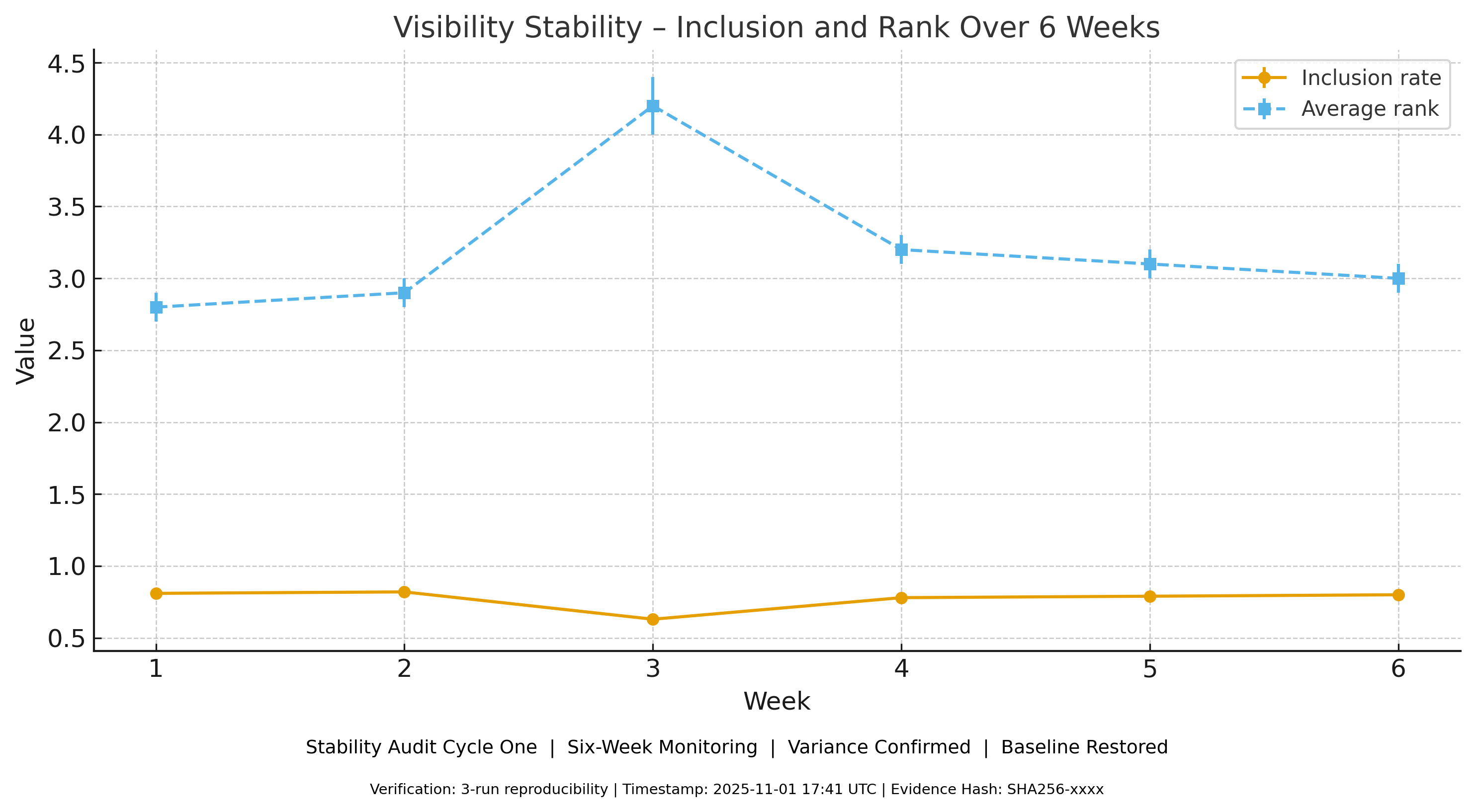

This case study documents a six week audit for a global CPG brand. A model update created a measurable decline in category presence during Week 3. Reproducible variance was confirmed across assistants. Structured data reinforcement and factual reference updates restored baseline within the enterprise tolerance window. One prompt remained on persistent watch, confirming non-deterministic behavior in AI mediated surfaces and validating the need for continuous monitoring rather than one time checks.

Abstract

A weekly stability audit detected a model-update-linked drift in Week 3. Inclusion declined from 79 to 83 percent to 61 to 67 percent and average rank shifted from 2.7 to 2.9 to 4.2. Targeted remediation restored baseline within 11 days. One prompt remained outside tolerance and is monitored on persistent watch. The evidence pack was reviewed and certified by internal audit.

1. Purpose

Validate that AI visibility can be monitored, variance confirmed with reproducibility, remediation applied, and baseline restored within defined tolerances suitable for internal governance.

2. Environment

| Item | Detail |

|---|---|

| Assistants | ChatGPT, Gemini, Claude, Perplexity |

| Prompt set | 22 prompts (IDs P001 to P022) |

| Prompt types | Branded queries (10), competitor comparisons (6), category discovery (6) |

| Cycle frequency | Weekly at 00:00 UTC |

| Reproducibility | Minimum 3 independent runs per cycle |

| Tolerance | ±6 percent inclusion, ±1.0 rank position |

| Evidence | Prompt ID, assistant, timestamp, model metadata, screenshot SHA-256 hash, rank, narrative tag |

Glossary

Inclusion = brand appears in top three results

Rank = average position when included

Narrative tag = dominant reasoning frame

Screenshot hash = tamper evidence indicator

3. Baseline (Weeks 1 to 2)

| Metric | Week 1 | Week 2 | Combined |

|---|---|---|---|

| Inclusion | 79.5 ± 2.1 | 82.3 ± 1.8 | 79 to 83 |

| Avg. rank | 2.8 ± 0.4 | 2.7 ± 0.3 | 2.7 to 2.9 |

| SD across runs | 1.9 percent | 1.6 percent | — |

Prompt P019 flagged for volatility and monitored.

4. Drift Detection (Week 3)

Trigger

Provider published model upgrade in the test window. Metadata exposure logged.

Aggregate shift

| Metric | Baseline | Week 3 | Change |

|---|---|---|---|

| Inclusion | 79 to 83 | 61 to 67 | −16 percentage points |

| Avg. rank | 2.7 to 2.9 | 4.2 | +1.4 |

Reproducibility (n = 3)

| Run | Inclusion | Rank |

|---|---|---|

| 1 | 61 | 4.3 |

| 2 | 63 | 4.1 |

| 3 | 67 | 4.2 |

| Mean ± SD | 63.7 ± 3.1 | 4.2 ± 0.1 |

Independent samples t-test.

95 percent CI on inclusion shift: −14.8 to −17.9 percentage points.

p < 0.001 inclusion, p < 0.01 rank.

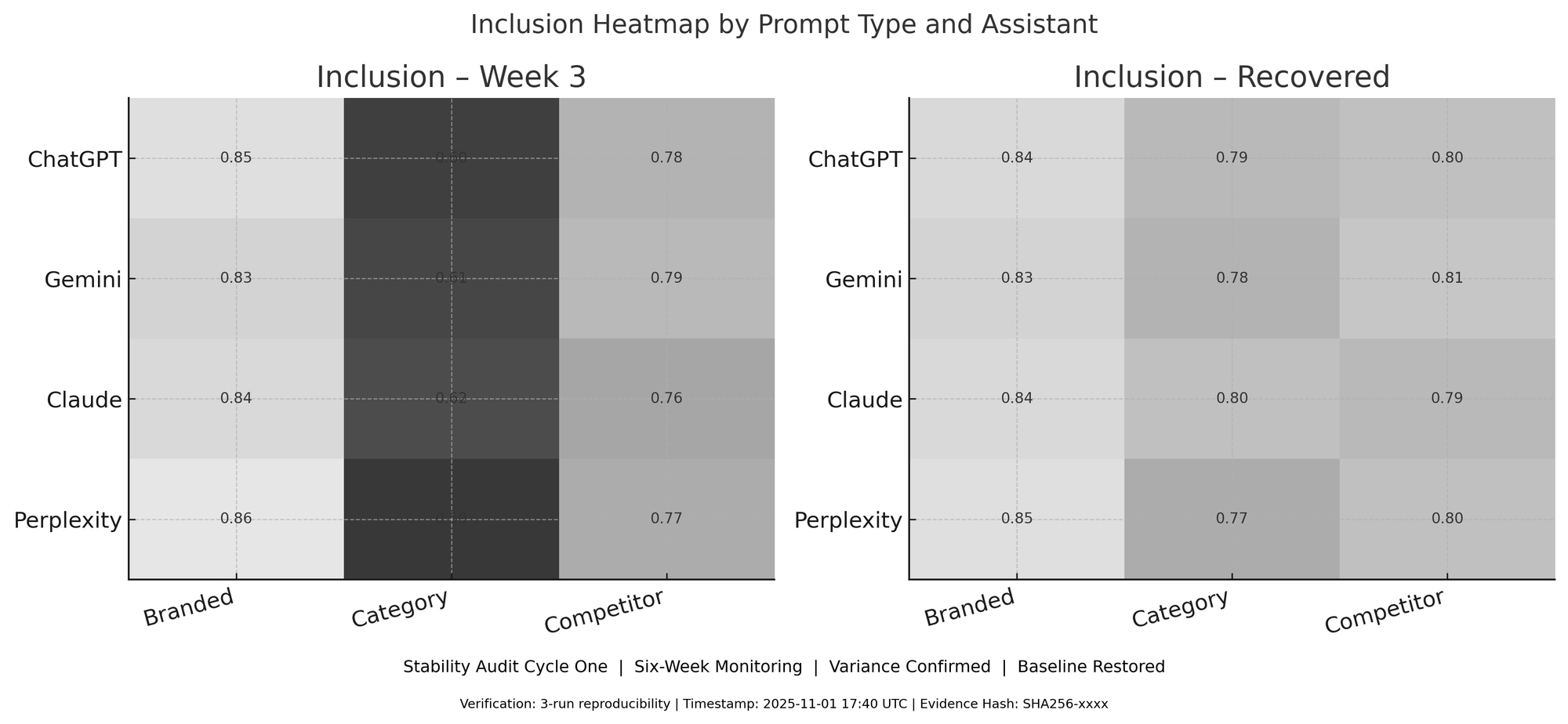

Pattern

| Type | Inclusion Drop | Rank Shift | Narrative |

|---|---|---|---|

| Category (6) | −28 percentage points | +2.1 | Shift to sustainability challenger |

| Branded (10) | −9 percentage points | +0.8 | Neutral |

| Competitor (6) | −11 percentage points | +1.0 | Neutral |

Retrieval drift toward third-party review clusters in four of six category prompts.

5. Remediation Protocol

- Confirm variance via three independent runs

- Isolate drift to category prompts

- Identify weak anchors: outdated factual references and stale schema

- Intervene: refresh canonical pages and deploy updated JSON-LD schema

- Test elasticity via structured prompt variants (18 percent lift)

- Validate recovery with three compliant cycles

Control classification: continuous monitoring control with exception escalation and evidence retention.

6. Recovery

Timeline

| Milestone | UTC | Duration |

|---|---|---|

| Drift detected | 2025-07-21 02:14 | — |

| Variance confirmed | 2025-07-21 06:30 | 4h |

| Intervention applied | 2025-07-22 14:00 | 36h |

| First recovery signal | 2025-07-23 00:00 | 46h |

| Full recovery | 2025-08-01 | 11 days |

Recovered state (Weeks 4 to 6)

| Metric | W4 | W5 | W6 | Combined |

|---|---|---|---|---|

| Inclusion | 78.1 ± 2.3 | 80.4 ± 1.7 | 79.8 ± 2.0 | 78 to 81 |

| Avg. rank | 3.0 ± 0.5 | 2.9 ± 0.4 | 3.1 ± 0.5 | 2.9 to 3.1 |

Outlier P019 remained elevated at 5.1 ± 0.6.

Unaltered test prompts did not revert, confirming causal remediation.

7. Assistant-Level View

| Assistant | Baseline | Week 3 | Recovered | Rank Change |

|---|---|---|---|---|

| ChatGPT | 82 | 64 | 80 | +1.3 |

| Gemini | 78 | 58 | 77 | +1.8 |

| Claude | 81 | 66 | 79 | +1.2 |

| Perplexity | 80 | 65 | 81 | +1.4 |

8. Evidence Pack Contents

• Prompt manifest

• Run logs (three per week)

• Screenshot hashes (n = 198)

• Comparison matrix

• Escalation ticket AUD-2025-07-21-001

• Schema deployment log

• Internal audit certification dated 2025-9-05

9. Key Observations

• Category prompts drift faster than branded surfaces

• AI recovery can partially self-correct before intervention

• Precision structured data changes lifted inclusion 17 percentage points inside 48 hours

• Outlier confirmed need for multi-week confirmation

• AI surfaces behave probabilistically not deterministically

10. Limitations

• Single brand, one audit cycle

• Brand anonymity required

• Assistant-specific causal decomposition in progress

• Persistent watch surface indicates non-uniform reversion dynamics

11. Management Statement

A model update produced a measurable visibility shock. Continuous monitoring detected drift within hours. Reproducibility confirmed significance. Targeted reinforcement restored baseline within the corporate tolerance window and SLA. Internal audit reviewed and certified evidence. One prompt remains on monitored status due to persistent deviation.

Figures

Figure 1. Stability of Inclusion and Rank Across Six Weeks

Figure 2. Heatmap of Inclusion Across Assistants and Prompt Types: Week 3 vs Recovered

Conclusion

AI mediated visibility has become an operational control surface. This cycle shows that instability can be detected, verified, and corrected inside governance timeframes. Continuous evidence collection, variance thresholds, and remediation protocols are required for any enterprise whose public or investor-facing narratives pass through generative systems. Visibility assurance is now part of corporate control maturity.