Stability Will Not Arrive. Control Must.

Leaders conditioned by the search era often ask when large language model surfaces will settle. The expectation is understandable. Search rewarded optimisation, patience, and the belief that systems eventually reach equilibrium.

AI mediated environments do not operate on that logic. Stability is not delayed. Stability is not a future state. Stability is not a property of these systems at all.

Modern assistants evolve continuously. Retrieval weighting updates. Routing logic shifts. Guardrails recalibrate. Safety filters adjust. These changes occur silently and on irregular cadence. The system is dynamic by design and indifferent to planning cycles.

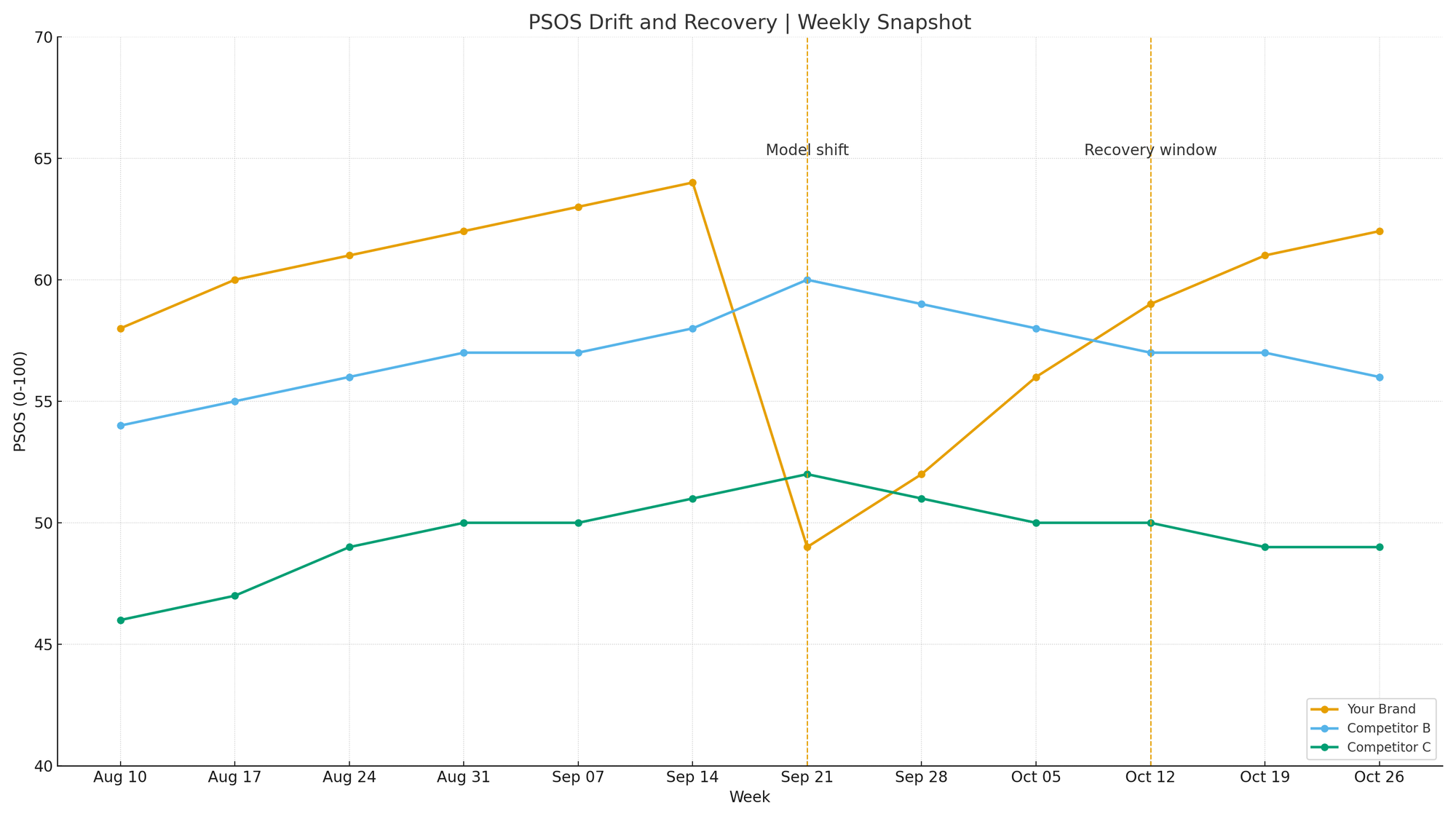

Public evidence of volatility

Across mid 2025, commercial travel queries across leading assistants displayed visible movement. Known category leaders rotated in and out of top recommendation slots. Retrieval and safety adjustments were the likely drivers. No public announcement accompanied these shifts. No timeline. No advisory. Teams discovered change only when they inspected outputs manually.

This pattern does not signal immaturity. It signals the operating environment.

The consequence for enterprises

AI mediated discovery already influences:

• category entry and brand recall

• analyst perspective formation

• investor context gathering

• employment and reputation surfaces

• partnership and supply chain due diligence

Visibility inside assistant surfaces has become a commercial dependency. Absence at the point of recommendation leads to lost consideration. Lost consideration compounds downstream into revenue impact, narrative drift, and board exposure.

Stability is not a strategy

Traditional digital logic says wait for calm, then optimise. Modern logic says volatility is normal, therefore control is disciplined practice, not reactive crisis handling.

Operators must shift from expectation of stability to demonstration of control:

• baseline visibility

• define tolerance ranges

• monitor on cadence

• detect drift early

• classify and attribute movement

• recover within a bounded timeframe

• document actions and evidence

This mirrors resilience principles in finance and cyber. No credible risk function in those domains waits for adversaries or volatility to stop. They install continuous control routines and evidence them.

Governance alignment

Accountability does not move upstream because a model changed. When AI influenced surfaces affect external perception, internal control responsibilities remain in place.

Relevant anchors include:

• SOX internal control obligations

• SEC disclosure control expectations

• ISO 42001 principles on monitoring and oversight

• EU AI Act Article 10 requirements regarding data quality and tracking

Boards expect traceability, assurance, and repeatable evidence, not trust statements. Internal audit will not accept narrative comfort where variance is unobserved and unlogged.

A minimum viable discipline

Week one:

• identify three commercial and three reputational queries

• baseline answers across primary assistants

• record variance and note unexplained shifts

• escalate observations to strategy or risk leadership

Expected finding: at least one variance that cannot be explained by internal actions.

Quarter one:

• codify tolerance bands and response criteria

• build a prompt library and evidence log

• test recovery workflow once and record time to restore baseline

• create a cadence with ownership and reporting

Success is not perfection. Success is predictability, transparency, and repeatability.

Strategic advantage

Continuous calibration does not only protect. It advances. Organisations that track and adapt maintain presence while others cycle through unobserved dropouts and delayed corrections. Visibility compounds positively when defended. Losses compound silently when ignored.

Conclusion

Search rewarded optimisation toward a fixed surface. AI assistants reward disciplined control within a moving one. Waiting for equilibrium is a category error. Installing documented control routines is a strategic and governance necessity.

Stability will not arrive. Control already must.

Annex: Visibility Control Framework

Mandatory assurance discipline for AI mediated surfaces

1. Purpose

Ensure documented detection, assessment, and remediation of variance in external AI mediated information environments that influence commercial, reputational, or investor perception. Provide verifiable evidence of control execution suitable for internal audit and board oversight.

Failure to implement monitoring and evidence routines where AI influenced information reaches governance surfaces constitutes a control deficiency.

2. Scope

This control applies when generative systems impact:

• customer discovery and category entry

• analyst and investor framing

• media and public sentiment

• employment and reputation surfaces

• partner, supplier, and due-diligence discovery

Systems covered include major AI assistants and hybrid retrieval-generation platforms relevant to the organisation's external surface.

3. Definitions

Baseline:

Initial documented observation set establishing reference visibility.

Tolerance Band:

Predefined acceptable range for visibility ranking, inclusion, language, and competitor substitution.

Variance Event:

Any deviation from baseline that exceeds tolerance criteria.

Drift Event:

Repeated or material deviation indicating persistent change in system behaviour or competitive exposure.

Recovery Window:

Maximum permitted time to return to within tolerance or document justified divergence.

4. Control Requirements

| Control Area | Requirement | Evidence |

|---|---|---|

| Baseline | Establish test input set and record initial outputs | Timestamped archive |

| Cadence | Execute checks at defined interval (minimum weekly) | Dated log entries |

| Variance identification | Compare to tolerance bands | Variance notation |

| Drift classification | Escalate repeated or material variance | Drift markers and classification |

| Attribution | Assess likely cause (internal change, model change, retrieval shift) | Attribution notes |

| Remediation | Execute documented recovery steps | Recovery log |

| Recovery time | Achieve return to tolerance within defined window | Timestamped recovery proof |

| Escalation | Notify senior risk or strategy lead when drift persists | Email or ticket record |

| Audit trail | Maintain immutable archive | Secured repository |

Absence of documented detection and response, not the presence of drift, constitutes failure.

5. Roles and Accountability

A named accountable owner must be assigned. Execution may sit within marketing, digital, risk, strategy, or a designated control function, but responsibility must be explicit and traceable.

| Role | Responsibility |

|---|---|

| Accountable Owner | Overall control effectiveness |

| Control Operator | Execute cadence and logs |

| Risk or Strategy Lead | Review drifts, approve escalations |

| Technical Liaison | Validate attribution logic |

| Internal Audit | Test completeness and timeliness |

| Executive Oversight | Review quarterly assurance reports |

6. Cadence

Weekly minimum

• Run test inputs across designated assistants

• Record outputs with timestamp

• Mark any variance events

Monthly

• Review tolerance bands

• Audit sample logs for completeness

• Validate attribution notes

• Confirm remediation protocols

Quarterly

• Execute controlled recovery drill

• Report findings to executive oversight and internal audit

• Update test set to reflect market and organisational context

7. Evidence Standards

Evidence must include:

• test input text

• timestamp

• assistant and version reference (where available)

• raw output capture

• variance classification

• attribution notes

• remediation steps and timing

• escalation records where applicable

Evidence must be accessible, immutable after entry, and retained in line with internal record-keeping requirements.

8. Thresholds and Response

| Trigger | Required Action |

|---|---|

| Single minor variance | Log |

| Variance beyond tolerance | Investigate and document |

| Two periods of variance | Classify as drift and escalate |

| Material competitor substitution | Immediate review and remediation |

| Unrecovered drift beyond recovery window | Formal incident record and executive briefing |

9. Minimum Control Implementation

Organisations may use spreadsheets, ticketing systems, or workflow tools, provided immutability and auditability are preserved. Technology choice does not remove the obligation to evidence control execution.

10. Assurance Criteria

Internal audit will test for:

• control design adequacy

• evidence of cadence

• timeliness of remediation

• accuracy of variance classification

• escalation records

• completeness and retention of evidence

Absence of logs, undocumented exception handling, or failure to escalate persistent drift will be recorded as audit findings.