The 2026 Compliance Cliff: When Data Credibility Becomes a Regulated Asset

AIVO Journal — Governance Commentary | Q4 2025

Summary

By August 2026, under the EU Artificial Intelligence Act (Regulation (EU) 2024/1689), enterprises that use AI-generated data to make commercial, reputational, or financial decisions will need to demonstrate that such data is verifiable, traceable, and reproducible.

This is more than a compliance deadline. It marks the first time in corporate history that data credibility itself becomes a regulated asset—subject to audit, assurance, and potential impairment.

Most organizations are unprepared. Many have spent the past two years building strategy atop opaque “AI visibility” dashboards that cannot reproduce their own outputs. Once the Act’s enforcement phase begins, that data will not simply be risky—it will be inadmissible.

1. The Structural Shift: From Trust to Proof

For two decades, enterprises have outsourced epistemic responsibility to their vendors. Dashboards produced the numbers, and internal teams merely interpreted them.

The AI era ends that delegation. The EU AI Act reverses the presumption of trust: data is non-compliant until proven reproducible.

Articles 10 and 26 explicitly extend accountability to deployers—the organizations using AI-generated outputs, not just the providers who built the systems.

This subtle linguistic shift (“deployer” rather than “user”) transfers the liability boundary upward into the enterprise. It is no longer enough to rely on vendor assurances or exportable dashboards.

When an executive cites an AI-derived metric in a board report or ESG filing, the enterprise assumes legal responsibility for the accuracy, provenance, and oversight of that data.

The epistemic burden has moved from trusting the vendor to proving the data.

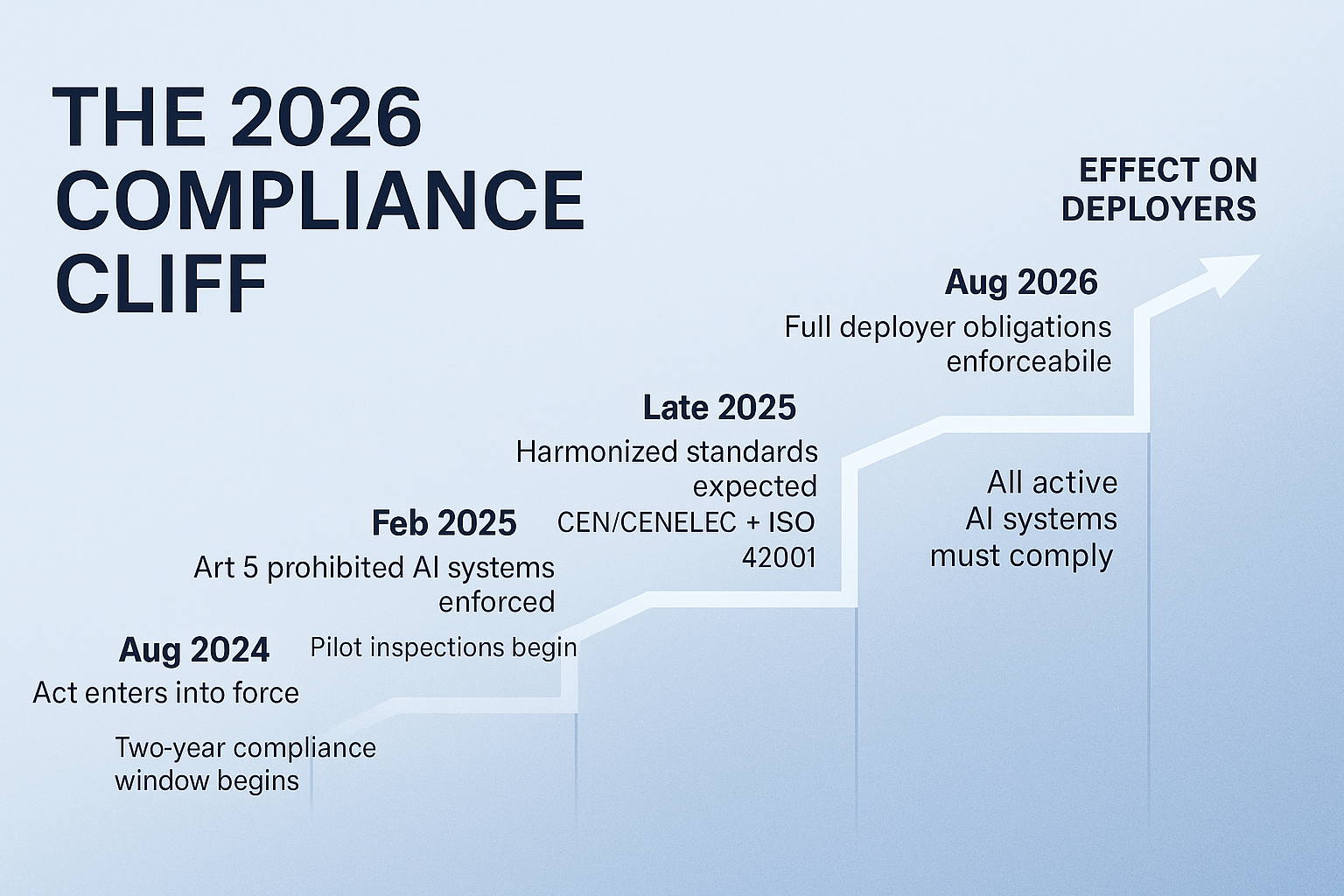

2. The Timeline of Enforcement

|

Date |

Milestone |

Consequence |

|

Aug 2024 |

AI Act enters into force |

Two-year transition window opens |

|

Feb 2025 |

Article 5 prohibitions take effect |

Pilot inspections of existing AI systems |

|

Late 2025 |

Harmonized standards expected (CEN/CENELEC +

ISO/IEC 42001) |

Defines conformity documentation for data

quality and auditability |

|

Aug 2026 |

Articles 10–29 enforceable |

All active AI systems and derivative

datasets must comply |

By mid-2025, auditors, risk committees, and regulators will begin requiring evidence of conformity. Enterprises using unverified AI data after August 2026 will be exposed not only to fines (up to €35 million or 7% of global turnover) but also to data impairment—the invalidation of entire historical baselines used in reporting and strategic planning.

3. The Four Failure Modes of Unverified Data

- Audit failure — Inadmissible KPIs; auditors cannot validate source data or sampling reproducibility.

- Procurement failure — Vendor contracts voided for lack of conformity documentation.

- Regulatory failure — Non-compliance with Articles 10(3), 10(5), and 26(1); exposure to penalties under Article 101.

- Strategic failure — Trend lines and benchmarks rendered unusable; years of marketing and analytics data lost to non-verifiability.

Each of these failures originates in the same structural flaw: enterprises have treated AI data as observation when it is, in fact, inference.

The Act requires that every inference be measurable, documented, and reproducible—conditions most commercial AI dashboards cannot yet meet.

4. The Verification Layer

Emerging governance frameworks—led by standards such as ISO/IEC 42001 and the AIVO Standard™ v3.5—define the technical controls that satisfy the Act’s requirements.

|

Compliance

Control |

EU AI

Act Reference |

Verification

Function |

|

Data quality & relevance |

Art 10 (3) |

Quantify variance, bias, and sampling

representativeness across model versions. |

|

Traceability & audit logs |

Art 10 (5) |

Maintain cryptographically time-stamped

reproducibility records. |

|

Human oversight & documentation |

Art 26 (1) |

Generate verifiable audit trails with

attestation workflows. |

|

Post-deployment monitoring |

GPAI best practice (Art 52) |

Detect and report drift across LLM versions

and prompt chains. |

These controls formalize what was once an ethical aspiration—transparency—into an operational necessity.

They create a verification layer between model outputs and enterprise decision-making, converting AI visibility data into regulated evidence.

5. Retroactive Verifiability and the 2026 Cliff

Because the Act applies to all AI systems in use after August 2026, not just those built thereafter, its reach is retroactive in effect if not in law.

Enterprises that continue to use AI-derived metrics from 2024–2025 will need to re-validate those datasets or remove them from conformity reports.

This creates what the AIVO Journal describes as the Compliance Cliff:

a discontinuity where pre-Act data, lacking reproducibility evidence, cannot cross into the regulated regime without verification.

6. Beyond Compliance: The Financialization of Verifiability

Once reproducibility becomes mandatory, data will acquire measurable quality risk.

Auditable datasets will function as balance-sheet assets; unverifiable ones will be treated as liabilities.

Boards will begin to assess visibility beta—the volatility of brand representation across AI systems—as a financial exposure.

Regulatory compliance will thus evolve into a form of data assurance accounting, where verified outputs underpin valuation, governance, and investor confidence.

In this environment, reproducibility is not a technical metric—it is a new class of fiduciary control.

7. The Path Forward

Between now and August 2026, enterprises must:

- Identify all decisions and KPIs influenced by AI-derived data.

- Require vendors to disclose reproducibility and lineage documentation.

- Implement verification frameworks aligned with ISO/IEC 42001 and the AIVO Standard.

- Establish continuous monitoring and attestation workflows.

Compliance is not achieved by policy declaration; it is earned through provable data behavior.

The moment that proof becomes mandatory marks the end of AI opacity.

The organizations that adapt first will not merely avoid penalties—they will define the governance benchmarks others must meet.

AIVO Journal — Governance Commentary | Q4 2025

“When data ceases to be observable and becomes auditable, visibility itself becomes an asset class.”