The Cost of Not Knowing

Why the Absence of AI Output Records Turns Routine Inquiries into Seven-Figure Governance Events

Most enterprises now accept that AI systems shape how they are perceived by customers, partners, journalists, and counterparties. Fewer have recognized that these perceptions are already being relied upon in ways that create legal and financial exposure, even outside regulated industries.

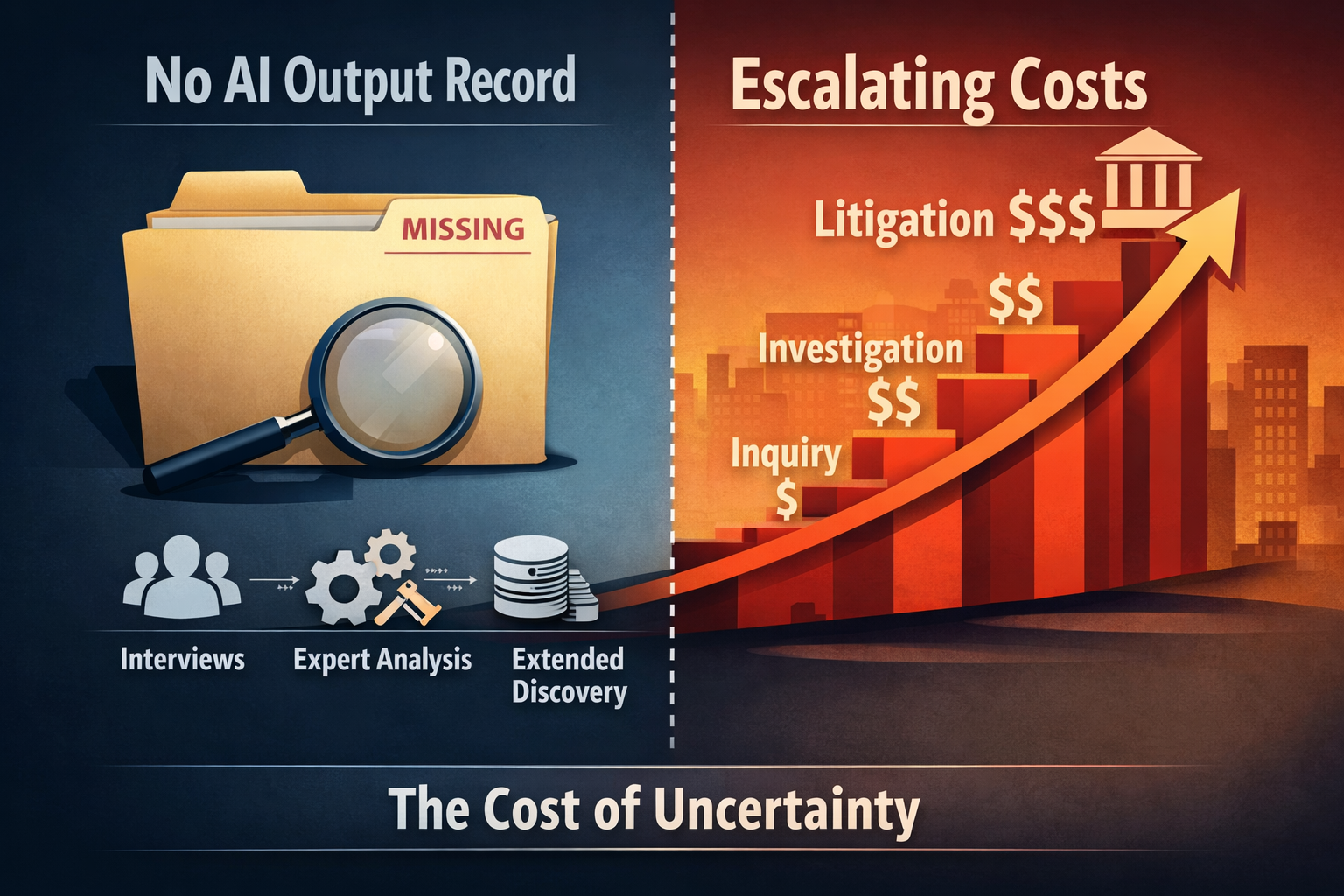

The dominant framing of AI risk focuses on accuracy, bias, or safety. That framing misses a quieter but more consequential failure mode: the inability to reconstruct what an AI system asserted at a specific moment, when that assertion is later questioned.

This is not a model performance issue. It is an evidentiary one.

A New Class of Inquiry Without a New Class of Evidence

Across consumer brands, SaaS companies, travel platforms, media organizations, education providers, and professional services firms, AI systems now act as third-party narrators. They describe products, policies, capabilities, risks, pricing, and comparative positioning.

These descriptions increasingly surface in:

- customer disputes and complaints,

- media inquiries,

- partner diligence and procurement,

- board-level questions,

- and early-stage litigation.

In most cases, the enterprise did not author the statement. Yet the enterprise is expected to respond to it.

The structural problem is not output variability. It is that AI-generated representations often leave no authoritative, time-bound record. When an inquiry arrives, organizations are forced to reconstruct after the fact what the system might have said, under which conditions, and with what implied authority.

That reconstruction gap is where cost begins to compound.

The Reconstruction Trap

When no preserved AI output exists, response teams substitute:

- employee recollection for logs,

- expert opinion for records,

- probabilistic explanations for facts.

Each substitution introduces uncertainty. Each increment of uncertainty expands scope.

This dynamic is familiar to litigators, auditors, and regulators. Uncertainty invites follow-up. Follow-up invites expert involvement. Expert involvement broadens discovery. Discovery invites motion practice.

Escalation is not driven by misconduct. It is driven by ignorance that cannot be conclusively resolved.

This Is Already Happening

While enterprises often treat this risk as hypothetical, the pattern is already visible:

- In consumer-facing services, AI-driven explanations of refund eligibility, insurance coverage, or product limitations have triggered disputes where companies were unable to demonstrate what customers were shown or told at the time of reliance. Resolution required reconstruction through interviews, vendor documentation, and expert inference.

- In B2B SaaS procurement and diligence, AI-generated summaries of security posture, feature availability, or integration support have been surfaced by counterparties as informal evidence. When challenged, legal teams were forced to explain model behavior rather than produce records, expanding counsel and expert involvement well beyond the original inquiry.

These situations rarely begin as litigation. They escalate because no definitive artifact exists to anchor the response.

Pricing the Cost of Ignorance

To quantify this dynamic, a conservative downside model was developed to estimate response costs attributable solely to missing contemporaneous AI output records.

The model deliberately excludes damages, fines, settlements, and business disruption. It prices only the friction of reconstruction under inquiry, investigation, or litigation. Assumptions are explicit and adjustable, reflecting typical U.S.-based benchmarks for:

- external counsel blended rates,

- forensic analysis,

- eDiscovery processing,

- expert witnesses,

- and limited crisis communications support.

The intent is not to present worst-case scenarios, but to isolate the cost of uncertainty itself.

Scenario A: Minor Inquiry

An inbound request from a customer, journalist, or regulator lasting two to four weeks.

Without preserved records, legal teams rely on interviews and hypothetical reconstructions of system behavior. Conservative estimates place response costs between $65,000 and $500,000, driven primarily by counsel time consumed by reconstruction and qualification.

Scenario B: Formal Investigation

A regulator request or audit committee inquiry lasting six to twelve weeks.

Here, uncertainty broadens scope. Experts are retained to explain how AI systems typically behave. Discovery volume expands because no focal record exists. Conservative estimates commonly reach high six figures and extend into the low millions, driven by expert involvement and extended counsel effort.

Scenario C: Litigation Escalation

An injunction, lawsuit, or cross-border dispute extending over months.

At this stage, missing records amplify discovery disputes, adverse inference risk, and expert contention. Even before damages or settlements are considered, response costs frequently reach seven figures, driven by prolonged discovery, motion practice, and competing expert narratives.

The escalation pattern is structural. It repeats because uncertainty compounds, not because underlying facts deteriorate.

Why Monitoring and Optimization Do Not Solve This

Many organizations assume they are protected because they monitor brand sentiment or invest in AI optimization.

They are not.

Monitoring captures frequency and tone, not what was asserted at a specific moment. Optimization increases the probability of being mentioned, without preserving the content of those mentions.

Neither produces an evidentiary artifact that can be reconstructed, defended, or rebutted under inquiry.

This is why response costs rise even in organizations that believe they manage AI visibility effectively.

Addressing the Predictable Objections

Two objections commonly arise.

First, privacy and data minimization. Logging AI outputs raises legitimate concerns under GDPR, CCPA, and similar regimes. However, evidentiary preservation does not require indiscriminate retention. Targeted capture of externally visible, customer-facing outputs with scoped metadata can materially reduce exposure while respecting minimization principles.

Second, cost at scale. High-volume AI interactions make comprehensive capture non-trivial. That is precisely why the comparison to reactive response costs matters. When reconstruction replaces records, marginal savings in logging are routinely overwhelmed by downstream legal and expert costs.

The Governance Implication for Non-Regulated Sectors

In regulated industries, evidentiary controls are familiar. Outside those sectors, governance is often assumed to arrive with regulation.

AI breaks that sequence.

External reliance now precedes formal oversight. Inquiries arise before rules. Disputes form before standards. When they do, the absence of preserved records converts ordinary response into extended reconstruction.

The conservative downside model demonstrates a simple conclusion: once uncertainty drives response cost, prevention becomes cheaper than reaction, regardless of industry.

That conclusion holds even under restrained assumptions.

Closing Observation

Enterprises already preserve financial transactions, security events, and access logs because those records matter later.

AI-mediated representations are joining that category.

The question is not whether AI systems will produce questionable statements. They already do. The question is whether, when asked, an organization can show exactly what was said, when, and under what conditions, or whether it will be forced to explain after the fact.

The difference between those two states is not philosophical. It is operational. And it is increasingly expensive.

Assess whether your organization could reconstruct an AI-generated representation if questioned tomorrow.

If the answer is unclear, that uncertainty already has a cost.

Contacts: journal@aivojournal.org for commentary | audit@aivostandard.org for implementation.