The Next Phase of AI Will Not Be Smarter: It Will Be Accountable

The debate about large language models and world models has been useful, but it is already incomplete.

Whether current systems lack grounded internal representations is no longer the most consequential question. The more important shift is this:

AI systems are becoming operationally influential before they are epistemically reliable.

That inversion creates a governance problem that cannot be solved by better models alone.

This article looks forward, not to artificial general intelligence, but to the next stable equilibrium in AI deployment. One defined not by intelligence, but by evidence.

From Capability Risk to Representation Risk

Early AI risk discussions focused on capability:

- Can the system reason?

- Can it plan?

- Can it act autonomously?

Those questions assumed AI would become powerful first, then consequential.

That assumption is already false.

Today’s systems influence:

- Brand perception

- Product comparison

- Financial interpretation

- Medical framing

- Legal understanding

They do so externally, in environments the represented entity does not control, cannot correct in real time, and often cannot even observe.

This introduces a new class of risk:

Externally mediated representation risk

When an AI system’s interpretation of an entity becomes operationally relevant, despite being outside that entity’s control.

This risk exists regardless of whether the system is intelligent by any academic definition.

Why World Models Do Not Resolve This Risk

There is an implicit belief that better internal representations will close downstream exposure. That belief does not survive contact with governance reality.

1. Accuracy does not imply accountability

A system can be statistically accurate and still:

- Misrepresent edge cases

- Collapse categories

- Substitute entities

- Drift over time

None of these failures are eliminated by improved internal modeling.

2. The external reasoning layer remains opaque

Even if an AI system has a world model, its external reasoning layer remains unstable.

By external reasoning layer, we mean:

The interpretive surface where a model’s internal probabilities are translated into claims, comparisons, or recommendations that a human can act on.

This layer is:

- Context sensitive

- Prompt dependent

- Temporally unstable

- Non-deterministic

From a governance perspective, this is indistinguishable from error unless it is observable and reproducible.

3. Control and ownership are structurally decoupled

The entities most affected by AI representations do not own the systems producing them.

No improvement in model architecture changes that fact.

World models may improve AI performance. They do not solve external accountability.

The Structural Shift Already Underway

AI is moving from:

- Stateless responses

to - Persistent, system-level participation

But this persistence is:

- Narrowly scoped

- Domain bounded

- Economically motivated

- Legally constrained

As a result, AI systems increasingly:

- Maintain continuity across interactions

- Influence decisions over time

- Accumulate representational history

- Leave evidentiary traces, whether designed to or not

At that point, a new question becomes unavoidable:

Can we prove what an AI system represented, when it represented it, and how that representation changed over time?

In most organizations today, the answer is no.

Why Audit-Grade Evidence Becomes Inevitable

This is not a philosophical claim. It is a historical one.

Every infrastructure that mediates value at scale eventually converges on independent auditability.

Accounting systems required GAAP.

Aviation required flight data recorders.

Financial markets required independent ratings and disclosure regimes.

AI is following the same trajectory:

- Complexity increases faster than transparency

- Trust erodes under opacity

- Evidence becomes a prerequisite for participation

Three forces accelerate this convergence.

1. Regulatory asymmetry

Regulators do not require AI systems to be intelligent. They require:

- Disclosure

- Diligence

- Repeatability

- Defensible process

Absent preserved evidence, intent and effort are irrelevant.

2. Litigation dynamics

In disputes, courts do not accept:

- Screenshots

- Vendor dashboards

- Post-hoc explanations

They accept:

- Time-stamped artifacts

- Reproducible methods

- Independent verification

AI outputs without preserved context are legally fragile.

3. Procurement and insurance pressure

Enterprise buyers and insurers increasingly treat AI exposure as:

- Operational risk

- Reputational liability

- Disclosure obligation

Tools that cannot produce audit-grade evidence will be excluded, regardless of their technical sophistication.

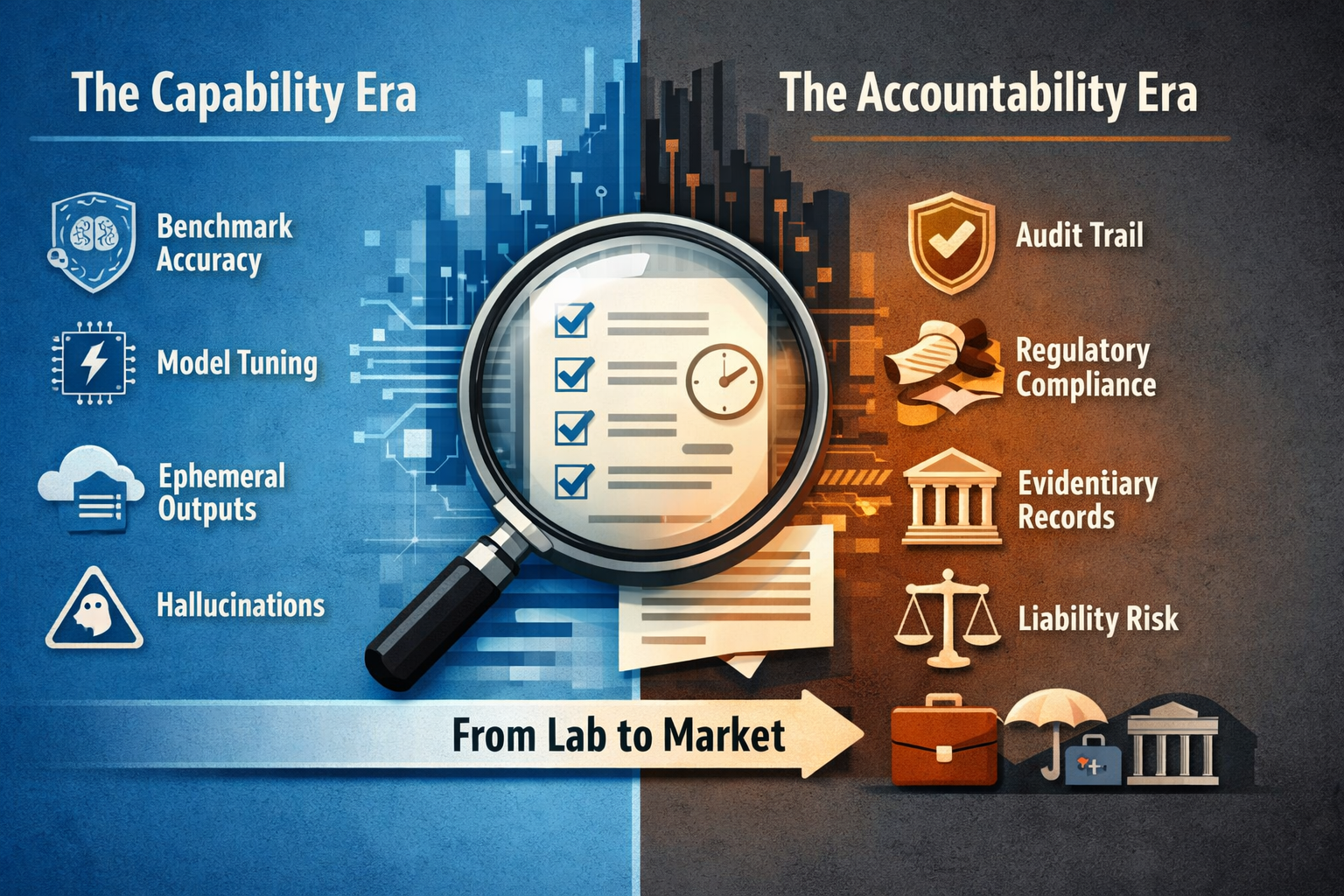

The Two Eras of AI Deployment

As AI moves from labs into regulated markets, the governing requirements shift.

| Feature | The Capability Era (Lab) | The Accountability Era (Market) |

|---|---|---|

| Primary Metric | Benchmark accuracy | Reproducibility and audit trail |

| System State | Ephemeral, stateless | Persistent, contextual |

| Failure Mode | Hallucination (error) | Misrepresentation (liability) |

| Success Tool | Fine-tuning, RLHF | Independent observability |

| Key Stakeholder | Research scientist | General Counsel, Chief Risk Officer |

Most AI tooling is still built for the first column. Exposure accumulates in the second.

The Missing Layer in the AI Stack

Current AI tooling clusters around:

- Optimization and performance

- Model and application development

What is missing is an independent layer that:

- Observes without intervening

- Measures without optimizing

- Preserves without correcting

- Translates technical variance into governance-relevant evidence

This layer does not make AI better.

It makes AI defensible.

Historically, this layer appears late in technology cycles. In AI, it is arriving early because consequence has outpaced control.

Why Non-Intervention Is the Only Defensible Posture

The instinctive response to AI misrepresentation is correction.

In regulated contexts, this is often a mistake.

Intervention introduces:

- Attribution ambiguity

- Disclosure risk

- Manipulation claims

- Expanded liability

In contrast, non-interventionist observability allows organizations to:

- Demonstrate diligence without influence

- Preserve evidentiary integrity

- Avoid becoming responsible for third-party systems they do not control

This distinction will matter more than accuracy improvements in the coming years.

What the Future Actually Looks Like

The future of AI is not dominated by autonomous agents or universal intelligence.

It is dominated by:

- Embedded AI systems

- Persistent but constrained memory

- Delegated agency

- External reasoning layers

- Growing institutional exposure

In that environment, the decisive question is no longer:

How smart is the system?

It becomes:

Can we prove what it represented, and can we prove we acted responsibly in response?

The Uncomfortable Conclusion

AI is becoming consequential faster than it is becoming governable.

That gap will not be closed by better models alone.

It will be closed by:

- Standards

- Evidence

- Independence

- Auditability

Organizations waiting for intelligence breakthroughs will miss the governance inflection point.

Those who establish evidence first will shape the rules later.

Closing note

The next phase of AI adoption will not be decided by research labs.

It will be decided by:

- Boards

- Courts

- Regulators

- Insurers

- Procurement committees

They will not ask whether a system understands the world.

They will ask whether you can prove what it said about you.

Below is a board-level memo, written so it can be forwarded unchanged to an Audit Committee, CFO, or General Counsel. It is procedural, not promotional, and deliberately avoids any reference to vendors, tools, or remediation tactics.

Memorandum

Subject: AI Representation Risk and the Emerging Requirement for Audit-Grade Evidence

Audience: Board of Directors, Audit Committee, CFO, General Counsel

Purpose: Risk identification and governance posture, not technology strategy

Executive Summary

Artificial intelligence systems now influence how organizations are represented to customers, partners, regulators, and the public. This influence often occurs outside the organization’s control, visibility, or ability to correct in real time.

The primary risk is no longer technical error. It is misrepresentation with potential legal, financial, and reputational consequences, occurring without preserved evidence.

This memo outlines:

- The nature of the emerging risk

- Why existing controls are insufficient

- Why audit-grade evidence is becoming mandatory

- A governance checklist for board-level oversight

1. What Has Changed

Historically, AI risk discussions focused on internal use cases and model accuracy.

That framing is now outdated.

Today, AI systems:

- Compare companies and products

- Summarize financial and legal positions

- Frame risk, quality, and suitability

- Influence decisions upstream of formal disclosures

These representations increasingly occur:

- In third-party systems

- Without notification

- Without persistence

- Without reproducibility

The organization may be affected without participating.

2. Definition of the Risk

Externally mediated representation risk arises when:

An AI system’s interpretation of an organization becomes operationally relevant, despite the organization not owning, controlling, or being able to reliably observe that system.

Key characteristics:

- The system is external

- The representation is consequential

- The output is transient

- The organization lacks evidence of what was said

This risk exists regardless of whether the AI system is “intelligent” or statistically accurate.

3. Why Existing Controls Do Not Cover This Risk

a) Accuracy controls are insufficient

Model performance metrics do not address:

- Temporal drift

- Context sensitivity

- Category substitution

- Inconsistent framing across sessions

A statement can be accurate in isolation and still be misleading in context.

b) Screenshots and anecdotes are not evidence

In disputes, regulators and courts do not accept:

- Screenshots

- Vendor dashboards

- After-the-fact explanations

They accept:

- Time-stamped artifacts

- Reproducible methods

- Independent documentation

c) Intervention increases exposure

Attempting to “correct” AI outputs without a preserved record can:

- Create attribution ambiguity

- Trigger disclosure obligations

- Introduce manipulation claims

- Expand liability

Correction without evidence is not defensible diligence.

4. Why Audit-Grade Evidence Is Becoming Inevitable

This is not a speculative trend. It follows a familiar pattern.

Every system that mediates value at scale eventually requires:

- Independent observation

- Standardized documentation

- Reproducibility

- Separation between operation and oversight

Examples:

- Accounting before GAAP

- Aviation before flight data recorders

- Financial markets before disclosure regimes

AI has reached the same inflection point:

- Consequence has outpaced control

- Trust cannot be asserted without evidence

- Oversight requires preserved context

5. The Shift Boards Should Recognize

AI governance is moving from a capability era to an accountability era.

| Dimension | Earlier Focus | Emerging Requirement |

|---|---|---|

| Primary Question | How accurate is the system? | Can we prove what it represented? |

| Risk Type | Technical error | Legal and reputational liability |

| Evidence | None or anecdotal | Preserved, reproducible artifacts |

| Oversight Owner | IT / Innovation | Legal, Finance, Risk |

| Failure Mode | Hallucination | Misrepresentation |

Boards should assume this shift is irreversible.

6. Governance Checklist for Directors

The following questions should be answerable without relying on vendors or assurances:

- Visibility

Do we have any systematic way to observe how AI systems represent our organization externally? - Evidence

Can we reproduce what an AI system said about us at a specific point in time? - Independence

Is observation separated from intervention, optimization, or correction? - Documentation

Would preserved artifacts meet regulatory or litigation standards? - Escalation

Is there a defined process when material misrepresentation is detected? - Disclosure Readiness

Could we demonstrate diligence if asked by a regulator, insurer, or court?

If the answer to more than two of these is “no,” the organization is exposed.

7. Recommended Board Posture

This memo does not recommend:

- Modifying AI systems

- Influencing external models

- Making claims about correctness

It recommends:

- Treating AI representations as an external risk surface

- Prioritizing observability over optimization

- Preserving evidence before intervention

- Framing AI exposure as a governance issue, not an IT feature

The objective is not control.

It is defensibility.

Closing Note

AI adoption is accelerating faster than accountability frameworks.

Boards will not be judged on whether they predicted AI capabilities correctly.

They will be judged on whether they exercised reasonable oversight once consequences became foreseeable.

The question is no longer:

“Is the AI smart?”

It is:

“Can we prove what it said about us, and can we prove we acted responsibly in response?”