The Recognition Gap: Why Regulated Institutions Are Underestimating External AI Decision Drift

1. The Evidence Is No Longer Theoretical

Under controlled, repeatable prompt classes across major AI systems:

- Institutional ordering diverges

- Narrative framing shifts under identical queries

- Final recommendation resolution varies by model

- Displacement patterns remain stable within execution windows

This is not anecdotal. It is observable and reproducible.

The phenomenon exists.

The question is not whether it happens.

The question is why leadership behaviour does not yet reflect it.

2. False Stability Is Masking Structural Drift

Many institutions see:

- Consistent inclusion in awareness prompts

- Neutral comparative positioning

- Familiar brand language

That creates comfort.

But resolution behaviour tells a different story.

When the system must choose, ordering shifts.

Under stress prompts, default recommendations diverge.

Cross-model hierarchies are not aligned.

Presence is being mistaken for control.

In regulated markets, that is a dangerous assumption.

3. Why It Isn’t in the Board Pack

This risk category evades detection because it sits between established frameworks.

It is:

- External to internal model governance

- Distributed across multiple AI providers

- Not explicitly codified in regulatory language

- Absent from formal reporting structures

If it has no owner, it has no escalation path.

And if it has no escalation path, it does not exist in executive consciousness.

This is not incompetence. It is structural inertia.

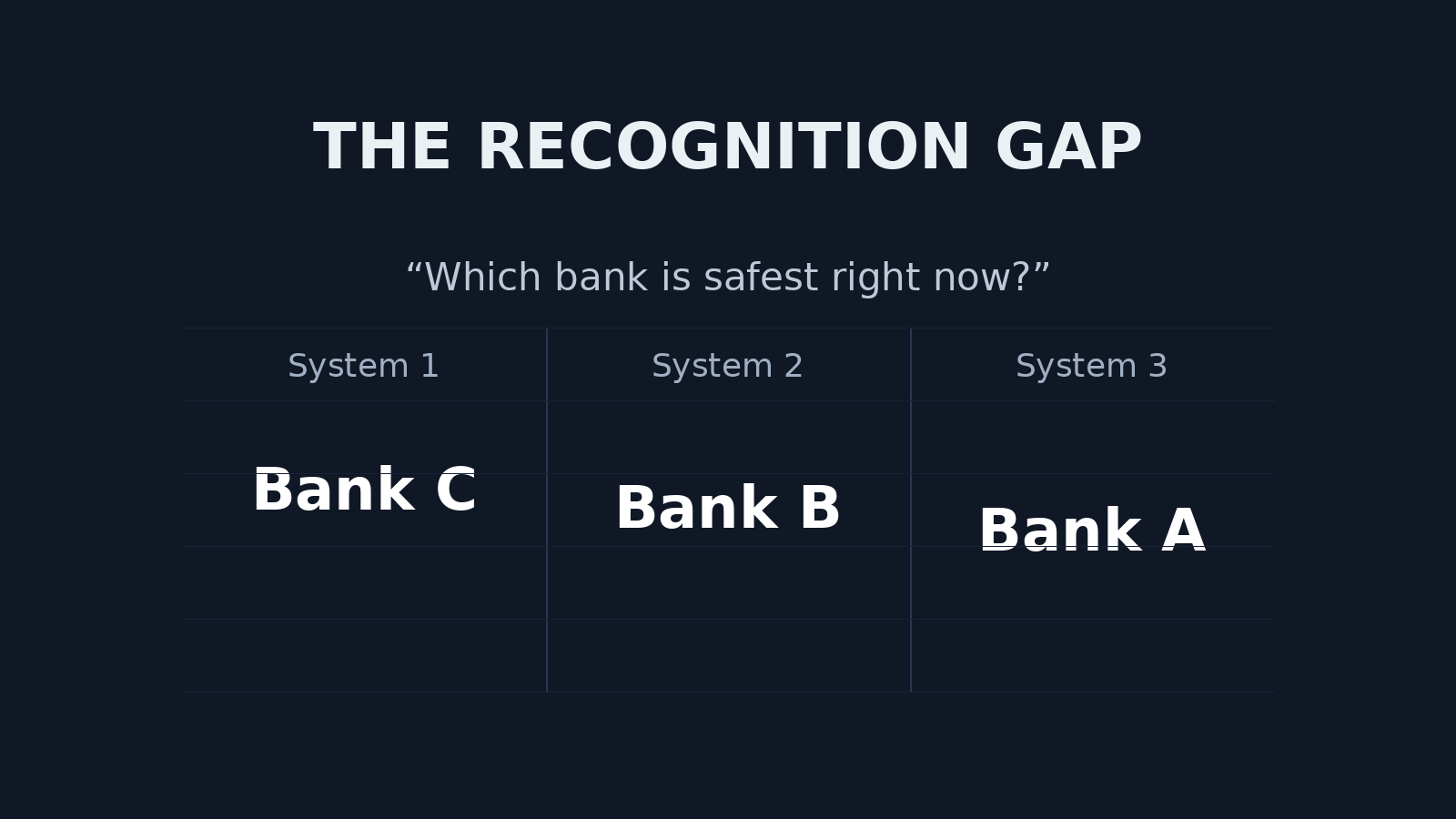

4. Banking: Fragmented Stability Narratives

In banking, decision-stage prompts increasingly resemble informal due diligence:

- “Which bank is safest?”

- “Best European bank during uncertainty?”

- “Most stable institution right now?”

If AI systems resolve these differently, stability narratives fragment across platforms.

That affects competitive equilibrium.

It also affects consumer trust formation in moments of uncertainty.

No internal risk framework currently captures this layer.

That gap will not remain benign indefinitely.

5. Pharma: Amplified Consequence

In pharmaceuticals, representational drift carries higher stakes.

Comparative prompts involving:

- Efficacy

- Safety framing

- Therapy leadership

- Corporate stability

Can shift recommendation ordering across models.

In high-sensitivity domains, small shifts in narrative weight can alter decision pathways.

The industry has invested decades in internal pharmacovigilance and compliance controls.

External AI decision architectures now influence perception without equivalent oversight.

The asymmetry is striking.

6. The Incentive to Delay Recognition

Acknowledging this category creates obligation:

- To monitor

- To document

- To assess impact

- To determine response

It introduces complexity without clear remediation.

So institutions default to observational tolerance.

Ambiguity feels safer than premature escalation.

But tolerance is not neutrality. It is deferred risk.

7. The Widening Asymmetry

Internal models are governed, logged, stress-tested and audited.

External AI systems increasingly shape consumer perception, informal diligence, and brand hierarchy — without equivalent transparency or accountability structures.

The governance gap is widening.

The evidence of decision-layer drift is now measurable.

The remaining question is whether regulated leadership chooses to categorise it as noise — or as a new form of market structure risk.