Trust in the Media: When Public Belief and AI Representation Diverge

Case Pattern: The BBC and Algorithmic Authority

Editorial Note: This article uses the BBC as an illustrative example to demonstrate measurement methodology. No independent data collection has yet been conducted.

1. Introduction: Institutional Trust Meets AI Mediation

For much of the last century, the BBC symbolized reliability. Recent editorial controversies—spanning impartiality debates, coverage bias accusations, and internal governance reviews—have reopened a deeper question:

What happens when audience trust changes but AI systems continue to treat a legacy outlet as an unquestioned authority?

This is not only a journalistic issue. It is a measurement problem across two interacting layers: what the public believes, and what generative systems repeatedly surface as fact.

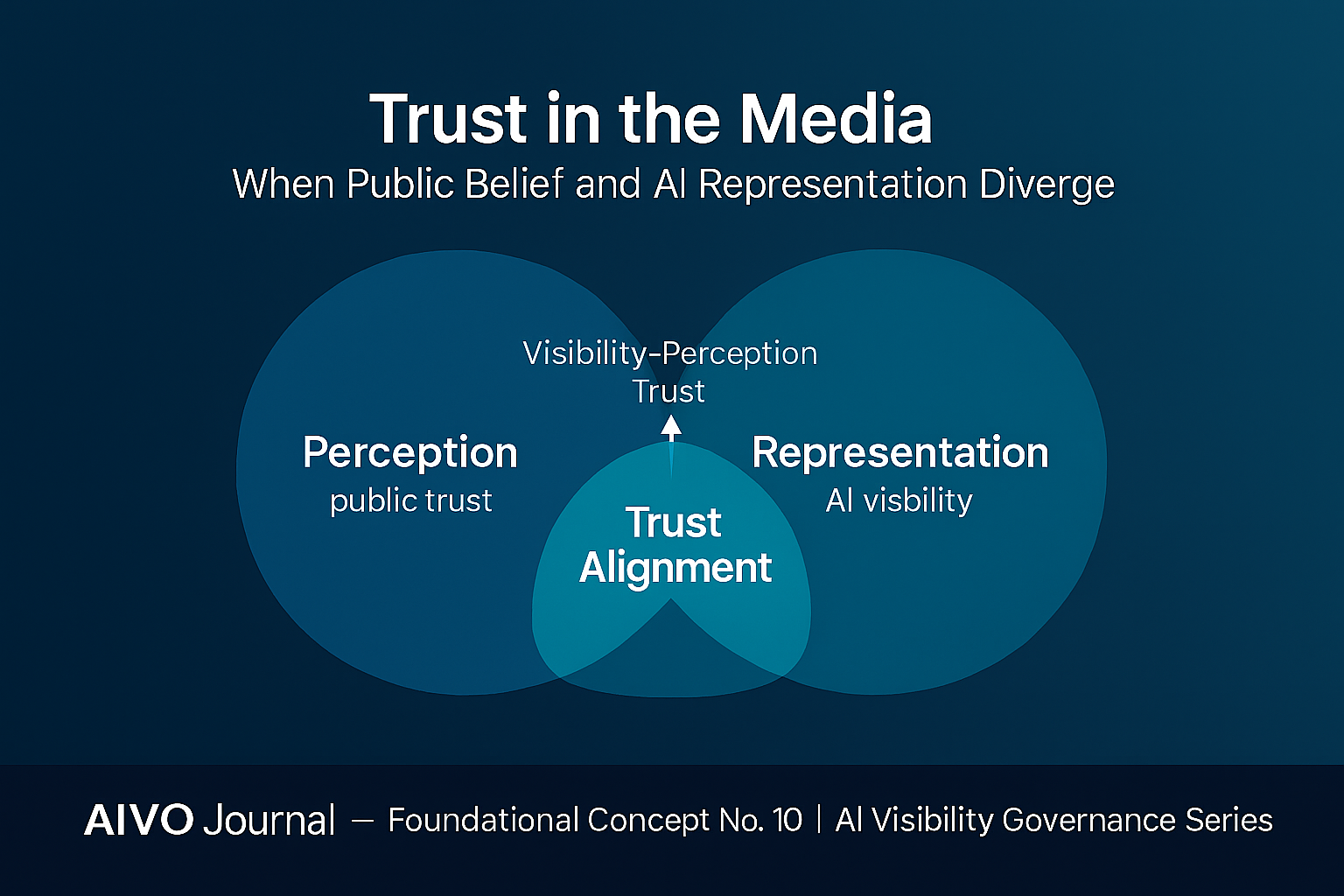

2. The Trust-Alignment Model

To examine this gap, AIVO applies a three-layer framework:

| Layer | Source Type | Metric Concept | Objective |

|---|---|---|---|

| Perception | Public trust indices (Ofcom, Reuters Institute, Edelman) | Confidence and credibility measures | Capture belief |

| Representation | AIVO continuous multi-model monitoring | PSOS™ for visibility, ASOS™ for answer quality | Capture what AI shows |

| Alignment | AIVO Verify fusion | Visibility–Perception Delta (VPD) | Quantify divergence |

A rising VPD means AI systems are presenting an outlet more prominently than the public currently trusts—or the reverse.

3. Visibility Inertia vs. Sentiment Movement

Preliminary sampling suggests that general-purpose LLMs continue to cite the BBC frequently for factual or balanced-reporting queries, even as portions of the audience express declining confidence.

This pattern—PSOS persistence without sentiment parity—represents visibility inertia: historical citation density and archival prominence sustain algorithmic authority after legitimacy has weakened.

Counter-forces exist:

- Reinforcement-learning feedback can down-weight polarizing sources.

- Retrieval updates can boost recent material.

- Bias-mitigation filters can rebalance exposure.

Yet none fully offset decades of accumulated citation weight.

4. Measuring the Divergence

Minimal Methods Outline

- Models: ChatGPT-5, Gemini 2.5, Claude 4.5, Perplexity Sonar.

- Window: 30 days, two runs per week.

- Prompts: 120 across politics, culture, and general news.

- Outputs: PSOS (share of surfaced citations), ASOS (factuality and tone scoring), VPD (difference vs. public-trust baseline).

- Controls: fixed temperature, deduped prompts, logged chains, DIVM ledger anchoring, ±5 % reproducibility tolerance.

Deliverables:

- Time-series chart comparing BBC PSOS with a chosen external trust index.

- Benchmark curve for Reuters, AP, and one regional outlet.

- Confidence intervals and error bars published with each cycle.

(All figures illustrative; no empirical data yet published.)

5. Governance Implications

- Regulatory integrity – Decision-makers relying on AI-summarized media risk treating visibility as reliability. Verification layers must adjust for trust drift.

- Institutional accountability – A positive VPD signals over-representation: visibility exceeding current confidence. A negative VPD marks under-recognition of credible newcomers.

- Market correction – Transparent VPD reporting allows emerging outlets with genuine trust to compete on verifiable visibility rather than legacy weight.

6. Practical Controls to Reduce Misalignment

- Trust-weighted retrieval signals – Incorporate independent trust indices as soft ranking factors, with governance safeguards.

- Legacy-weight decay – Apply temporal decay to historical authority so current performance contributes proportionally.

- Answer-surface transparency – Disclose why a source appears and which evidence chain supports it.

7. Strategic Lesson

Trust no longer resides only in editorial content; it lives in systems of retrieval and repetition.

When visibility inertia outpaces public legitimacy, credibility debt accumulates—until a correction occurs, often abruptly.

Conversely, when trust improves but representation lags, credible journalism remains hidden from algorithmic view.

8. Conclusion

The measurement frontier for media trust now lies in aligning perception with representation.

Defining prompt sets, logging model behavior, and publishing visibility deltas converts reputation management from narrative defense to empirical governance.

In the generative era, trust itself requires verification—not once, but continuously.

Methods and Disclosure Notes

All metrics described (PSOS™, ASOS™, VPD) are proprietary measurement concepts within AIVO Standard.

Public-trust baselines should be sourced transparently from independent datasets.

All future studies will disclose prompt sets, sampling windows, and reproducibility intervals.