What AIVO Standard Governs: The Layer Above Visibility Tools

AIVO Journal — Governance Commentary

© 2025 AIVO Standard™ | All Rights Reserved

1. The Fragmented Visibility Market

The AI visibility market is expanding rapidly—and confusingly. Each week, new Answer Engine Optimization (AEO) and Generative Engine Optimization (GEO) dashboards promise to show how brands “appear” in ChatGPT, Gemini, Claude, or Perplexity.

These tools often deliver valuable insight, yet their methodologies differ so widely that results cannot be compared. One dashboard may rank brands by query frequency: another by model-weighting or citation count. The outcome is a visibility landscape defined by incompatible metrics rather than shared standards.

Without verification, visibility becomes volatility disguised as insight.

(See Search Engine Journal, 2025, for examples of divergent AEO ranking methodologies.)

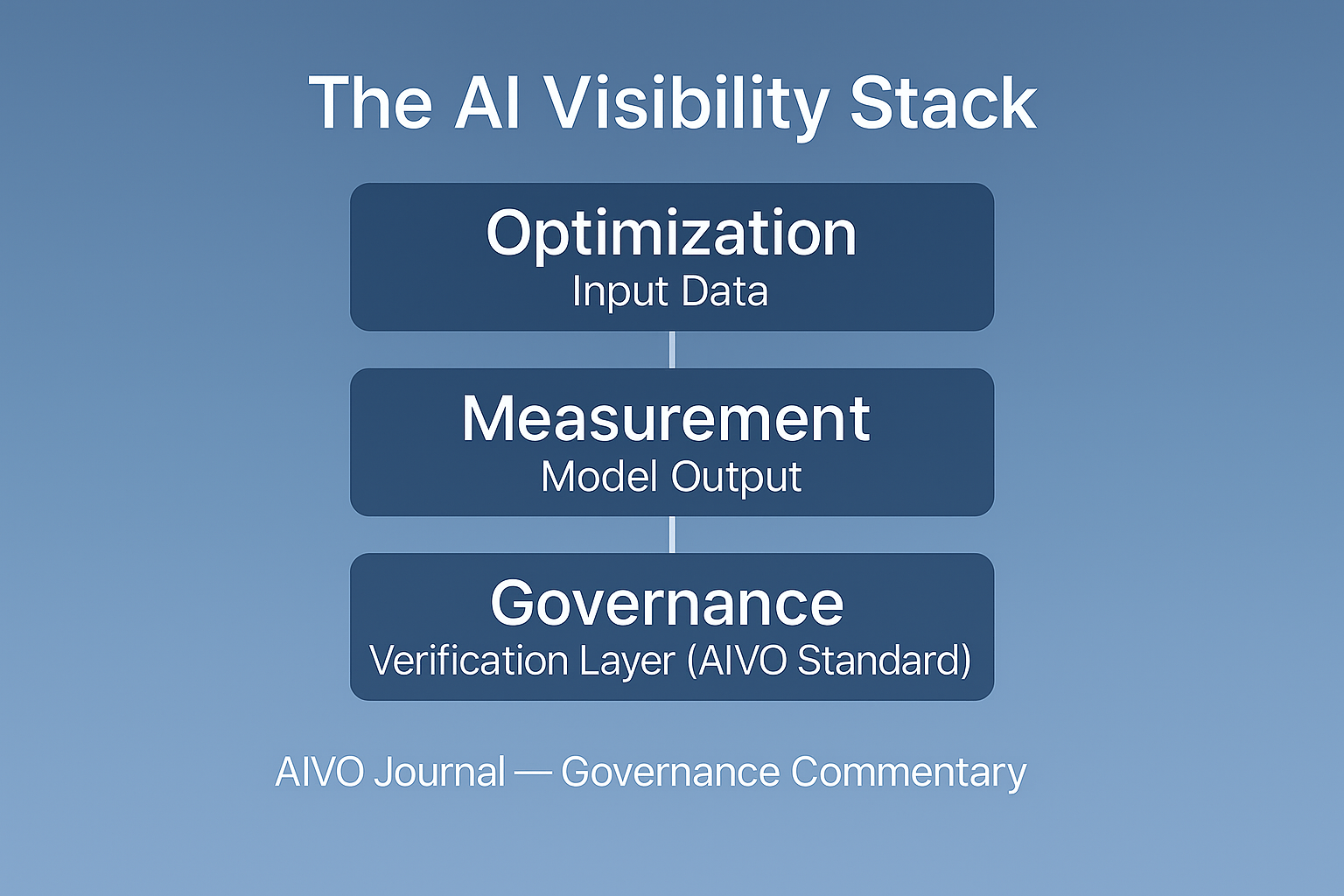

2. The Visibility Stack: Inputs, Outputs, and Oversight

|

Layer |

Core

Function |

Examples |

Key

Challenge |

AIVO’s

Role |

|

Optimization Layer |

Feeds structured, verified product data into

AI systems to improve discoverability |

Novi, Peec, Profound |

Limited transparency on how data origin

affects model weighting |

Defines standards for data origin and

traceability |

|

Measurement Layer |

Tracks where and how brands appear across AI

assistants |

Commercial AEO/GEO dashboards |

Results vary by crawler, query pool, and

model version |

Establishes reproducible measurement

protocols |

|

Governance Layer |

Ensures visibility data is verifiable,

comparable, and auditable |

AIVO Standard |

Largely absent today |

Offers the reference framework (PSOS™,

PSOS-E™) |

With this stack in mind, the next question is whether the visibility results these systems produce can be verified.

3. Why Verification Matters

AI assistants now intermediate commercial and policy decisions worth billions. Which product appears, which source is cited, or which dataset is trusted are economically material outcomes.

Each model retrain silently reshuffles inclusion and exclusion. Without standardized measurement, organizations cannot prove continuity, fairness, or causation—creating unquantified visibility risk.

AIVO 100™ Index (Q1 2025) data show 30–60 percent volatility in brand visibility across leading models between retrains. For high-intent prompts, such shifts translate directly into revenue exposure and disclosure uncertainty. In one case study, a single-point PSOS decline across travel-sector prompts correlated with a $2.3 million reduction in modeled customer intent.

This volatility already intersects with disclosure obligations under the EU AI Act (Articles 10–14), which require traceability and transparency for high-risk systems.

4. How the AIVO Standard Works

The AIVO Standard defines a reproducible, audit-ready metric—Prompt-Space Occupancy Score (PSOS)—which measures a brand’s share of representative prompts across leading AI assistants.

- PSOS™ quantifies visibility share in generative environments using prompts selected to reflect common user intents.

- PSOS-E™ (Eligibility) extends this to agentic systems—AI that act autonomously in commercial or policy contexts.

PSOS metrics derive from tiered, cross-model prompt testing conducted under open, documented protocols published via AIVO’s SSRN repository (v2.2 Methodology Paper). They can be replicated, audited, and compared across reporting periods.

In short: financial data has GAAP; visibility data has AIVO.

5. Implications and Next Steps

A one-point PSOS decline across high-intent prompts can redirect millions in customer intent. In governance terms, such losses are material—yet most organizations cannot audit them.

The AIVO Standard closes that accountability gap. It allows CMOs, CFOs, and compliance teams to align AI-visibility data with existing assurance principles already applied under ESG and AI-governance frameworks.

Optimization tools like Novi improve inputs; dashboards visualize outputs. AIVO provides the oversight layer that validates both.

As commerce becomes increasingly agentic, visibility will evolve into eligibility—the right to be considered by autonomous systems. Optimization will pursue performance; AIVO ensures proof.

Stakeholders should begin integrating verifiable visibility metrics into governance and reporting frameworks now, before AI-mediated markets harden around opaque systems.

6. Conclusion

The AI discovery stack is maturing from experimentation to infrastructure. What it still lacks is governance—the ability to verify whether AI systems represent brands fairly, consistently, and transparently.

AIVO Standard serves as the compass ensuring that AI-driven discovery remains oriented toward accountability—the independent layer above visibility tools, making every inclusion and exclusion measurable, reproducible, and auditable.