What an Evidentiary Control Mechanism Looks Like in Practice

How regulated organizations make AI outputs reconstructable, reviewable, and defensible across functions

Most discussions of AI governance focus on policies, accuracy, or model behavior. In regulated environments, those concerns rarely determine outcomes.

What matters after reliance is whether an organization can reconstruct what an AI system produced, under what controls, and what happened next.

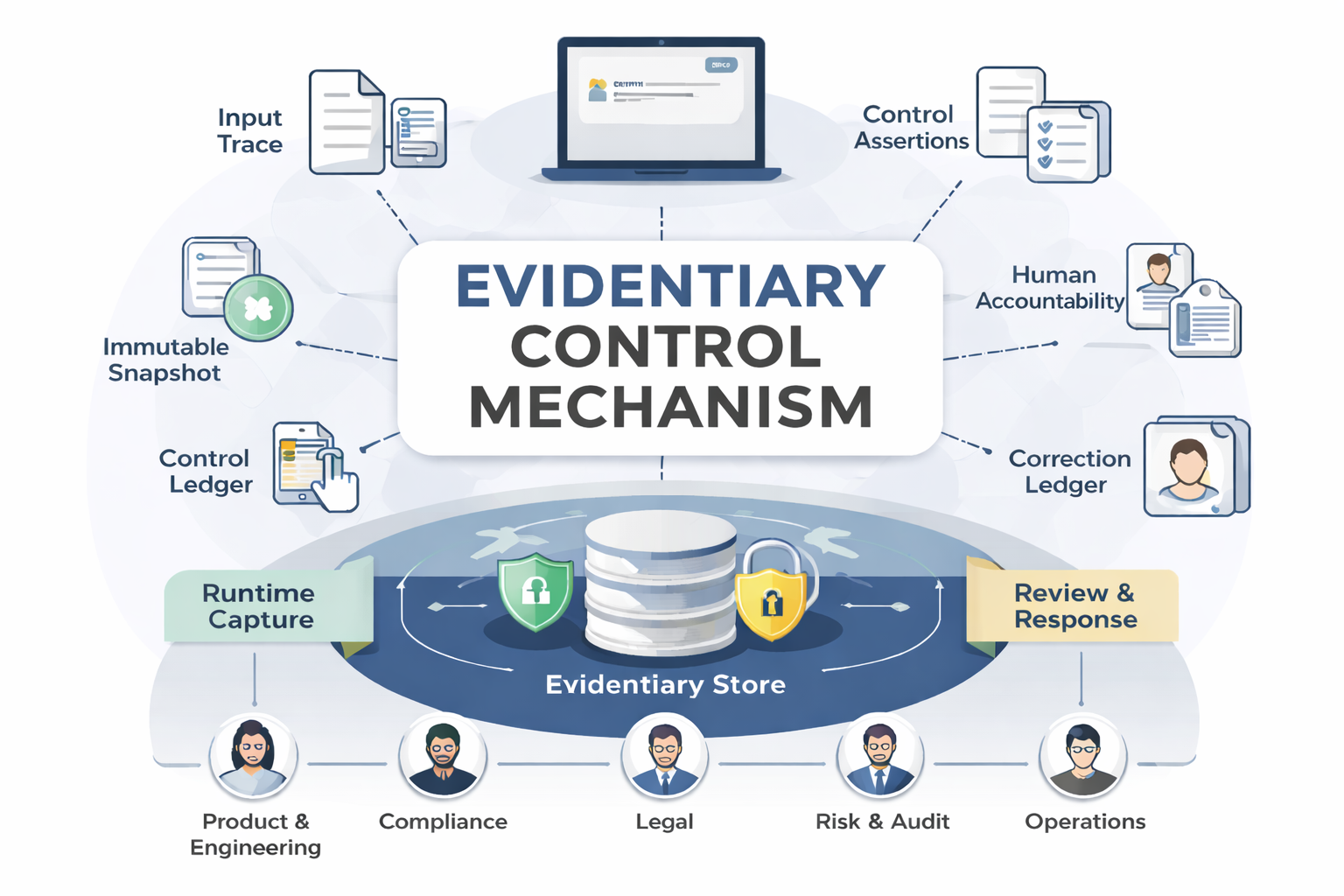

This article describes what an evidentiary control mechanism looks like in practice. It sets out the minimum evidence objects, operating model, and review cadence required to make AI-influenced outputs auditable, attributable, and correctable across legal, compliance, risk, product, and operational functions.

The focus is deliberately narrow. This is not a framework for optimization or a proposal for new regulation. It is a practical description of the controls organizations must have in place if they expect AI use to withstand audit, investigation, or litigation.

Abstract

In regulated environments, AI-related failures rarely turn on whether an output was factually correct. They arise when organizations cannot reconstruct what was produced, under what conditions, and how it was relied upon. This article describes the minimum characteristics of an evidentiary control mechanism: the system that makes AI-influenced outputs replayable, attributable, and correctable across legal, compliance, risk, product, and operational functions. It is implementation-focused and intentionally scoped to survivability under audit, investigation, or litigation.

1. Regulatory and audit context

Regulators and auditors do not evaluate AI systems as abstract technologies. They evaluate outcomes after reliance.

Across financial services, healthcare, employment, consumer protection, and public disclosures, scrutiny converges on a small set of questions:

- What was presented or relied upon?

- Under which policy, controls, and approvals?

- Using which permitted inputs or sources?

- Who owned the workflow?

- What corrective actions followed?

Existing governance instruments such as policies, model cards, disclaimers, and dashboards do not reliably answer these questions. They describe intent or activity, not reconstructable fact.

The mechanism described in this article is not a new regulatory framework. It is a synthesis of control expectations that already appear, implicitly or explicitly, in audit practice, enforcement actions, and emerging AI governance standards. Its purpose is narrow: to ensure that AI outputs which become operationally relied upon can later be evidenced without inference.

2. The problem the mechanism solves

Most organizations still frame AI risk around accuracy, bias, or hallucination. These are visible failure modes, but they are not the decisive ones in regulated environments.

Once an AI output is relied upon, the question becomes evidentiary rather than technical. If the organization cannot demonstrate what was produced, why it was produced, and what happened next, liability attaches regardless of good faith or reasonable design.

An evidentiary control mechanism exists to prevent governance failure caused by missing or unreconstructable evidence.

3. Defining the record boundary

No evidentiary mechanism works without a declared boundary for capture.

An AI output is record-relevant when it is:

- Presented to customers, patients, candidates, investors, or regulators

- Relied upon in regulated workflows such as suitability, complaints, claims, triage, HR decisions, or disclosures

- Copied or summarized into downstream systems of record

- Used to justify or explain a decision, rather than explore possibilities

This boundary is defined by Compliance and Legal, but must be enforced by Product and Operations at runtime. A boundary that cannot be operationalized does not exist.

4. Minimum evidence objects (invariant requirements)

While implementations may vary, the evidence objects themselves do not. At minimum, an evidentiary control mechanism requires the following.

4.1 Immutable output snapshot

A tamper-evident capture of the exact output delivered, including content, timestamp, channel, locale, workflow identifier, and a unique snapshot ID.

If the organization cannot reproduce what was shown, governance has failed.

4.2 Bounded input and retrieval trace

A record of what the system consulted within the organization’s control boundary:

- Approved knowledge base identifiers

- Retrieval references or hashes

- Prompt or template version

- Policy version applied

This does not claim access to model internals. It documents orchestration and retrieval, which are controllable.

4.3 Control assertions

Evidence of which controls were expected to apply and whether they operated:

- Content restrictions

- Escalation rules

- Mandatory disclaimers

- Human review requirements

- Blocking or gating logic

The question is not whether controls exist, but whether they fired.

4.4 Human accountability markers

Clear attribution of responsibility:

- Workflow owner

- Prompt or template approver

- Business function accountable for use

- Authority to issue corrections

Accountability ambiguity is itself a governance failure.

4.5 Correction and assurance ledger

An append-only record linking original outputs to corrections, retractions, clarifications, notifications, and closure decisions.

History must be preserved. Overwriting outputs destroys evidentiary integrity.

5. Architecture that survives scrutiny

Effective implementations converge on three layers.

Layer 1: Runtime capture

Instrumentation at the moment of output delivery that automatically creates snapshots and control assertions.

Manual capture does not scale and does not survive incident pressure.

Layer 2: Evidence store

A tamper-evident, queryable store with:

- Append-only or WORM characteristics

- Segregation of duties

- Access logging

- Retention aligned to regulatory obligations

This is an evidence system, not a general logging system.

Layer 3: Review and response

Operational workflows for triage, investigation, correction issuance, and closure, producing auditable artifacts.

Process without capture produces narratives. Capture without process produces paralysis.

6. Variations by risk and scale

The evidence objects above are invariant. Their realization is not.

- Low-risk internal workflows may retain snapshots with reduced metadata and shorter retention

- High-risk, customer-facing workflows may require stronger immutability, longer retention, and mandatory review

- Multi-entity organizations may federate evidence stores while enforcing shared schemas

- Smaller teams may sample outputs rather than review all, provided sampling is defensible

What varies is depth, not presence.

7. Cross-functional ownership model

A single function cannot own evidentiary controls end-to-end. The stable model is a shared evidence spine with distributed ownership.

Product and Engineering implement capture and gating.

AI Platform and Data maintain retrieval and model registries.

Compliance defines boundaries and control requirements.

Legal governs correction language and notification thresholds.

Risk and Internal Audit test reconstructability through replay.

Operations execute escalation and closure.

Security protects integrity, access, and retention.

This distribution reflects how liability is assigned in practice.

8. Operating cadence

Controls degrade without use.

Effective organizations maintain:

- Weekly review of flagged outputs and issued corrections

- Monthly reconstructability sampling

- Quarterly incident simulations

- Evidence verification as a release gate for new workflows

Annual testing is insufficient.

9. Known implementation risks

Evidentiary mechanisms fail in predictable ways:

- Over-capture leading to storage and review overload

- Privacy conflicts between evidence retention and data minimization

- Partial instrumentation in legacy systems

- Cadence collapse due to staffing constraints

- Silent bypasses during product iteration

Naming these risks does not weaken the mechanism. It makes it governable.

10. Regulated examples

Financial services

AI-generated explanations used in suitability discussions can be reconstructed, traced to approved sources, and corrected after complaints.

Healthcare

AI-guided patient information can be evidenced at the orchestration level, including escalation logic and corrective actions, without claiming insight into model internals.

In both cases, defensibility derives from evidence, not confidence.

Conclusion

AI systems become systems of record through use, not declaration.

Once relied upon, organizations are judged not on whether an output was accurate, but on whether it was reconstructable, attributable, and correctable.

An evidentiary control mechanism is the minimum infrastructure required to meet that standard.

Appendix 1

A. Example evidence object schema (illustrative)

- Snapshot ID

- Timestamp (UTC)

- Channel and locale

- Workflow identifier

- Output content hash

- Prompt/template version

- Policy version

- Retrieval source IDs

- Control assertions (pass/fail)

- Accountable role IDs

- Correction references (if any)

B. Example correction ledger entry

- Original snapshot ID

- Trigger (complaint, audit, monitoring)

- Correction type (clarification, retraction)

- Approved correction text

- Approval authority

- Notification scope

- Closure timestamp

C. Minimum replay test

Select a snapshot at random and reconstruct:

- Delivered output

- Applied controls

- Approved sources

- Responsible owners

- Subsequent actions

Failure at any step indicates control weakness.

Appendix 2

This mechanism aligns with, but does not replace, existing standards.

ISO/IEC 42001

- Recordkeeping and traceability requirements

- Governance accountability

- Incident response documentation

NIST AI RMF

- Govern and Map functions

- Risk response and monitoring

- Accountability and transparency artifacts

EU AI Act (high-risk systems)

- Logging and traceability

- Human oversight evidence

- Post-market monitoring

Audit practice (cross-sector)

- Reconstructability

- Control operation evidence

- Corrective action traceability

The evidentiary control mechanism operationalizes these expectations at runtime.