What Uncontaminated Observation Can and Cannot Prove

Context

The previous analyses in this series established two related problems: external AI systems now generate decision-relevant representations without organisational visibility, and optimisation-first approaches contaminate the evidentiary record organisations later need to evaluate their own interventions.

The governance implication follows directly:

Observation must precede intervention.

The next question is therefore methodological rather than philosophical:

What can uncontaminated observation demonstrate, and what remains beyond its epistemic reach?

This distinction matters. Observational capability is increasingly positioned as foundational to AI governance. Yet its evidentiary limits are rarely defined. Without clear articulation of those limits, organisations risk replacing one form of false certainty with another.

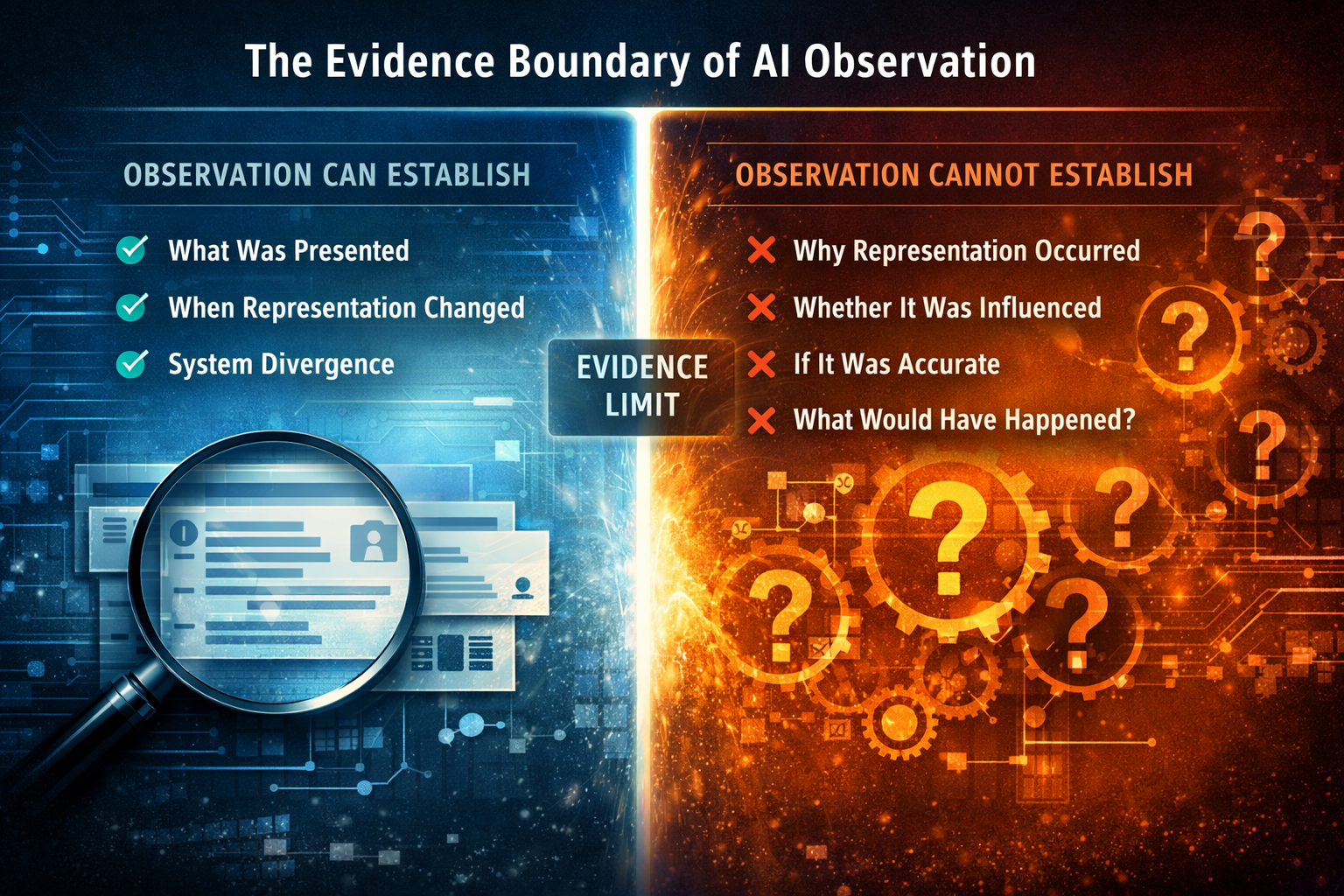

What Observation Establishes

Uncontaminated observation, meaning observation conducted without influencing the environment being studied, can establish three categories of evidence with reasonable confidence.

1. What Was Presented

Observation can document what AI systems returned for specific queries at specific times under defined execution conditions.

This creates a durable record of:

• Which entities appeared

• In what sequence

• With what framing

• With what comparative positioning

This record is evidentiary rather than interpretive. It captures what the system actually generated, not what observers believe it might have generated.

2. When Representation Changed

Longitudinal observation enables identification of shifts in how entities are represented across time.

This allows detection of:

• Substitution, where entity A is replaced by entity B

• Omission, where entity A disappears from outputs

• Reframing, where entity A is presented with altered attributes

• Instability, where entity A appears inconsistently across equivalent queries

These patterns are observable behavioural facts. They do not require inference regarding cause.

3. Whether Divergence Exists Across Systems

Cross-system observation can document whether different AI systems present the same entity differently.

This reveals:

• Representational inconsistency

• Competitive positioning variance

• Authority signal conflict

These observations describe externally visible system behaviour. They do not constitute claims regarding internal model mechanics.

What Observation Does Not Establish

Uncontaminated observation has defined epistemic boundaries. It cannot determine several categories of causal or counterfactual knowledge.

Recognising these limitations is essential to preserving evidentiary integrity.

1. Why Representation Occurred

Observation records outputs. It does not expose causal logic.

External AI systems typically do not reveal:

• Signal weighting

• Training dataset influence

• Retrieval prioritisation logic

• Policy constraint application

Observation can demonstrate that entity substitution occurred. It cannot prove whether that substitution resulted from training differences, retrieval source availability, policy filtering, competitor optimisation, or stochastic model variation.

Causation remains structurally opaque.

2. Whether Representation Was Influenced

Even with preserved observation, it is rarely possible to determine whether outputs reflect organic model behaviour or the accumulated effects of optimisation activity by any party.

AI systems do not label outputs as influenced or uninfluenced. They do not distinguish between first-party signals, competitor signals, or ambient information ingestion.

Observation can demonstrate that representation changed. It cannot reliably establish whether the decision environment was already influenced before observation began.

3. Whether Representation Is Accurate

Observation documents what was presented. It does not validate whether those presentations are factually correct, complete, or proportionate.

An AI system may produce internally coherent outputs derived from incomplete, adversarial, or distorted information signals. Observation preserves the output record, not the truth value of the underlying information.

Verification remains analytically and procedurally distinct from observation.

4. What Would Have Happened Otherwise

Observation captures realised system behaviour. It cannot establish counterfactual outcomes.

Questions framed as:

"What would this system have presented if X had not occurred?"

remain inherently speculative, regardless of longitudinal observation depth.

Observation produces historical evidence. It does not generate alternate behavioural timelines.

The Forensic Value of Partial Evidence

These epistemic limitations do not weaken the value of observation. They clarify its function.

Uncontaminated observation is not a truth-determination mechanism. It is an evidence-preservation mechanism.

Within governance and legal frameworks, preserved evidence performs a specific role: it constrains debate to documented facts rather than contested recollections or retrospective inference.

This enables:

• Testing contested claims against preserved outputs

• Establishing decision-relevant conditions at the moment reliance occurred

• Constraining causal arguments to observable behavioural boundaries

Observation cannot prove why representation occurred. It can eliminate unsupported claims about what systems presented.

Why Methodological Discipline Matters

If observational capability is to serve governance functions, it must meet procedural integrity standards.

Three methodological requirements are foundational.

Non-Interference

Observation must avoid introducing signals that alter system behaviour.

This requires:

• Query execution that mirrors authentic decision contexts

• Absence of optimisation signal injection during observation windows

• Operational separation between measurement infrastructure and influence activity

Without this separation, monitoring itself becomes an intervention. The evidentiary record is compromised.

Temporal Preservation

AI outputs are inherently ephemeral. Once a session concludes, exact output reconstruction is frequently impossible.

Observation must therefore create durable, reviewable records that preserve decision-relevant outputs at the moment they occur.

Temporal preservation converts transient outputs into evidentiary artefacts.

Reproducibility Constraints

Observation must document the conditions under which outputs were generated, including:

• Query structure

• Execution environment

• Model version identifiers where available

• Temporal context

Reproducibility does not require identical outputs to be regenerated. AI systems are probabilistic by design.

Reproducibility requires that observation procedures themselves can be evaluated for methodological soundness.

The Strategic Constraint Observation Imposes

Organisations operating under optimisation-first models often perceive observation as delay.

It is more accurately understood as sequencing discipline.

Observation does not prevent intervention. It establishes a reference state against which intervention outcomes can be evaluated.

This reference does not establish causation. It constrains speculation about baseline conditions.

In certain cases, observation may demonstrate that intervention introduces greater risk than benefit.

Restraint is a valid and governance-aligned outcome of structured observation.

From Capability to Governance Requirement

As AI systems transition from advisory tools to decision infrastructure, the absence of uncontaminated observation increasingly constitutes a governance failure.

Organisations cannot credibly demonstrate responsible oversight if they cannot establish what information environments stakeholders encountered at the moment decisions were influenced.

Observation before intervention is therefore not an optimisation best practice.

It is an evidentiary prerequisite.

Observation does not solve governance risk. It establishes the evidentiary foundation upon which risk evaluation, challenge, and intervention can occur with defensible integrity.

Conclusion

Uncontaminated observation can establish:

• What was presented

• When representation changed

• Whether divergence exists across systems

It cannot establish:

• Why representation occurred

• Whether representation was influenced

• Whether representation was accurate

• What would have happened under alternative conditions

These limitations do not diminish observational value. They define it.

In environments where AI-mediated representation increasingly shapes economic, clinical, and regulatory decisions, the capacity to preserve evidence of what systems actually presented is likely to become the minimum defensible starting point for organisational governance.

This article concludes a three-part series establishing the governance case for observational infrastructure. Future AIVO Journal analyses will examine sector-specific application models and emerging supervisory expectations.