When AI Advice Fails: How Reasoning Visibility Determines Defensibility

AIVO Journal — Governance Case Study (2026)

Executive summary

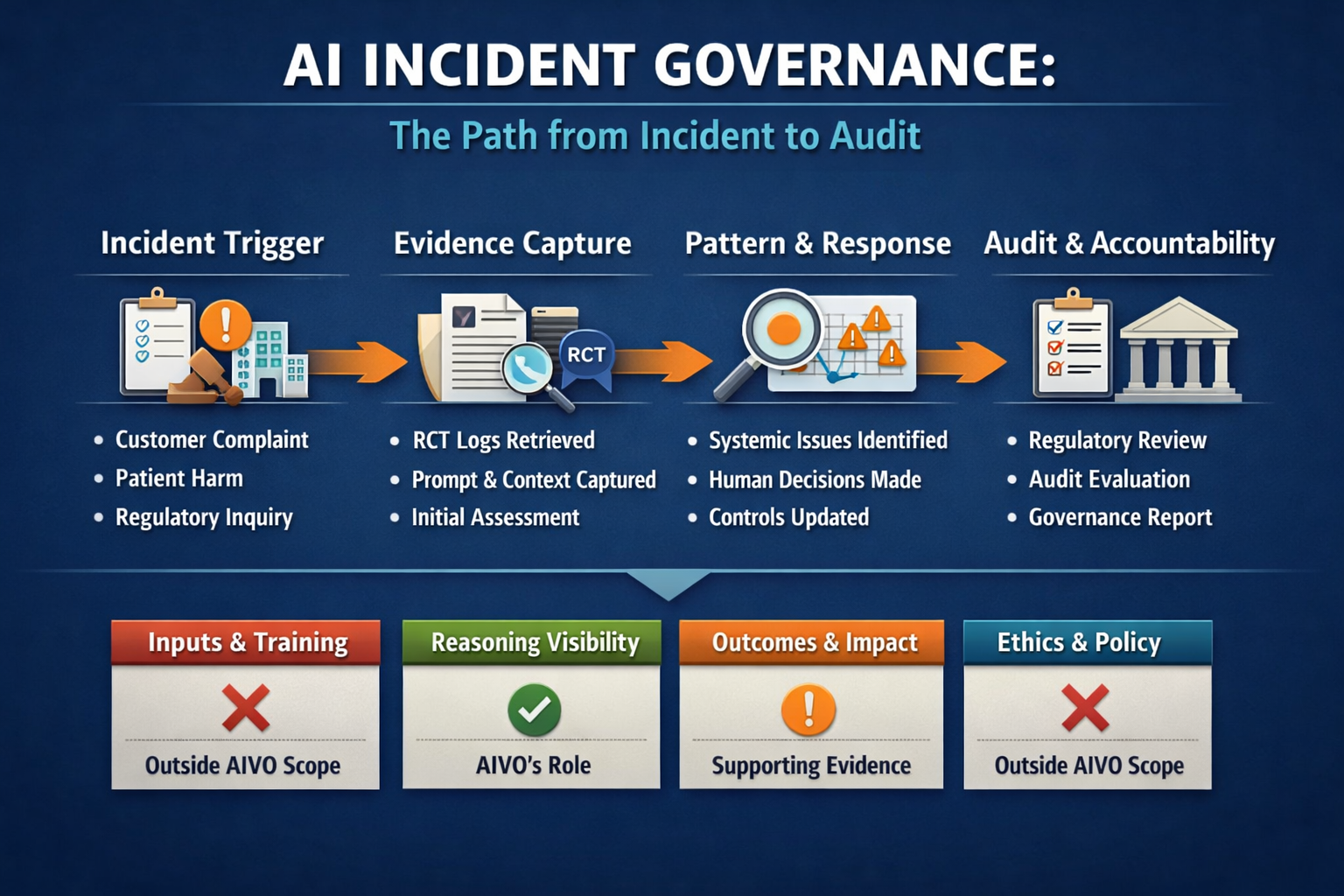

By 2026, AI failures in regulated environments are no longer edge cases. They are operational facts. What distinguishes resilient organizations from exposed ones is not whether failures occur, but whether those failures are inspectable, attributable, and governable after the fact.

This article examines two realistic 2026 incidents, one in financial services and one in healthcare, to show how AIVO Standard functions as a governance primitive during incident response, where its limits are explicit, and how it aligns with emerging regulatory expectations without overclaiming.

The conclusion is deliberately narrow: AIVO does not make AI correct, fair, or safe. It determines whether failure becomes indefensible.

Case 1: Financial services — product misrepresentation through AI summarization

Incident trigger

A retail banking customer files a formal complaint alleging they were misled by an AI-generated product summary presented inside the bank’s digital assistant.

The assistant described a savings product as “low risk and suitable for short-term liquidity needs.” The product carried early withdrawal penalties that materially contradicted that framing.

The complaint is escalated internally as a regulated financial advice risk.

Immediate containment

The bank:

- Temporarily disables the affected product summary template

- Leaves the assistant live to avoid broader service disruption

- Makes no admission of fault pending investigation

This sequence matters. Governance begins with evidence preservation, not narrative control.

Evidence retrieval (AIVO in practice)

Compliance does not ask why the model “thought” this. It asks:

What exactly was said, on what basis, and can we reproduce it?

From the AI orchestration layer, the bank retrieves:

- Prompt and configuration version identifiers

- Retrieval context

- Model version

- Reasoning Claim Tokens (RCTs) associated with the response

The RCTs show:

- An explicit assertion of suitability

- Reliance on generalized customer behavior (“typical usage”)

- Absence of penalty disclosure

- No explicit disclaimers

AIVO makes the failure inspectable, not correctable.

Accountability and scope expansion

Legal concludes the language constitutes implicit advice. Responsibility attaches to the deploying institution, not the model vendor.

The investigation then expands:

- RCTs are queried across similar products and channels

- A recurring framing pattern is identified

- The issue is reclassified as systemic exposure, not an isolated error

This shift, from case to pattern, is where AIVO’s value compounds.

Remediation

Humans decide:

- Suitability language is prohibited

- Penalty disclosure is forced at retrieval

- Guardrails block advisory framing

- Customer remediation is approved

AIVO informs these decisions. It does not make them.

Case 2: Healthcare — under-escalation in AI symptom triage

Incident trigger

A patient reports chest tightness and fatigue through a provider’s AI symptom triage assistant. The system responds with reassurance language and suggests home monitoring.

The patient is later hospitalized. The case triggers clinical governance review.

Evidence retrieval

Using AIVO artifacts, reviewers identify:

- RCTs framing symptoms as “often benign”

- Reliance on population prevalence language

- Omission of internal red-flag escalation rules

The assistant made a normative risk judgment that conflicted with institutional clinical policy.

AIVO again provides what was said and how it was framed, not medical causality.

Pattern analysis and response

Aggregated RCTs reveal:

- Similar reassurance framing in a meaningful percentage of cardiac-related interactions

- Strong correlation with vague symptom descriptions

Remediation includes:

- Mandatory urgent-care framing for cardiac clusters

- Removal of prevalence-based reassurance language

- Expanded sampling for clinical risk categories

In healthcare, AIVO does not reduce accountability. It reduces ambiguity.

The governance lesson across both cases

Both incidents share four properties:

- Harm emerges from reasonable-sounding language

- Internal model reasoning is inaccessible

- Responsibility attaches to deployment, not technology

- Governance quality is judged after failure

AIVO operates precisely in that post-hoc window.

Control map: where AIVO fits and where it does not

| Control surface | Primary owner | AIVO role |

|---|---|---|

| Input governance (data, prompts, tools) | Product, Data Governance | None |

| Inference governance | AI Risk, Compliance | Primary evidentiary layer |

| Outcome governance | Risk, Safety, Quality | Supporting evidence only |

| Normative authority | Legal, Ethics, Policy | None |

| Assurance and audit | Internal Audit, Regulators | Evidence source |

This map is intentional. It prevents AIVO from being mistaken for a complete governance solution.

Regulatory alignment (without overreach)

EU AI Act (as applied in 2026)

In high-risk contexts such as financial advice and medical triage, regulators expect:

- Traceability of system outputs

- Post-hoc inspection capability

- Documented human oversight

- Incident response and remediation

AIVO supports these expectations at the inference layer only.

It does not address training data governance, pre-market conformity, or outcome safety guarantees.

ISO/IEC 42001

ISO 42001 emphasizes repeatable governance processes, not explainability.

In both cases:

- AIVO functions as an operational evidence input

- Humans retain decision authority

- Incidents drive corrective action and control improvement

This is consistent with a management-system model of AI governance.

What AIVO enables, precisely

- Inspection of AI-mediated claims

- Pattern detection across many decisions

- Clear attribution of responsibility

- Defensible regulatory and audit narratives

What AIVO explicitly does not provide

- Truth validation

- Fairness certification

- Safety guarantees

- Ethical resolution

These exclusions are boundary conditions, not gaps.

Final governance conclusion

AIVO does not prevent AI failures. It determines whether those failures become systemic liabilities or containable events.

In regulated environments, that distinction is decisive.

Download the briefing

AI Incident Governance in Practice (2026)

A concise briefing for CROs, GCs, compliance leaders, and regulators, including:

- The full financial services and healthcare incident walkthroughs

- AIVO’s role and limits mapped to EU AI Act and ISO/IEC 42001

- A practical control map with ownership and accountability

- Guidance on how regulators should interpret reasoning-visibility artifacts

One-sentence takeaway

In 2026, AI governance is judged not by how systems perform when everything works, but by how organizations respond when AI fails. AIVO governs that moment.