When AI Health Advice Fails, the Failure Is Not Accuracy. It Is Evidence.

AIVO Journal — Governance Commentary

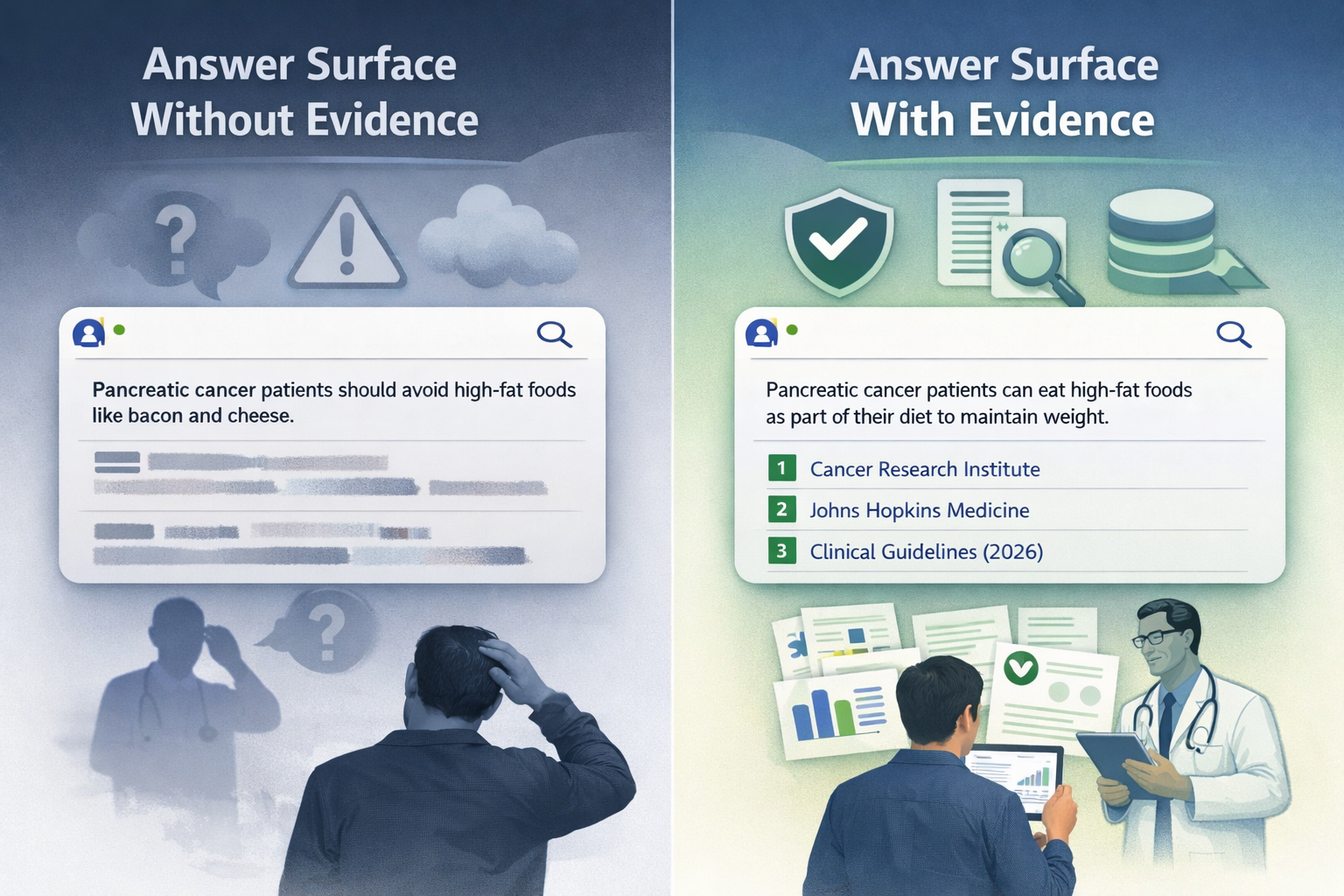

On January 2, 2026, The Guardian reported multiple cases in which Google’s AI Overviews delivered misleading or potentially harmful health information to users. The examples were unexceptional: dietary guidance presented to pancreatic cancer patients, interpretations of liver test results, and descriptions of routine screening procedures. These were not adversarial prompts or edge cases. They were ordinary health queries posed through a mass distribution interface.

The importance of these incidents does not lie in whether the advice was right or wrong. It lies in what could not be established afterward.

Once challenged, neither users nor the platform could reliably reconstruct what had been shown, why it had been shown, or which claims and sources were operative at the moment the overview was delivered. The discussion shifted to screenshots, recollections, and general assurances about quality controls.

That outcome reflects a governance failure, not a media controversy and not a narrow technical defect.

AI as a medical representation surface

AI Overviews occupy a privileged position above traditional search results. They are presented as synthesized answers rather than exploratory summaries, and they are consumed as such. In health related contexts, this presentation crosses a threshold. The system is no longer merely retrieving information. It is delivering a representation that can influence patient behavior and downstream clinical conversations.

At that point, the relevant governance question changes. It is no longer whether the system is usually accurate. It becomes whether a specific representation can be reconstructed, inspected, and defended if it is later questioned.

In the reported cases, that condition was not met.

The reconstruction gap

Google responded that AI Overviews are generally accurate, rely on reputable sources, and that some examples were based on incomplete captures. These statements may be correct. They do not resolve the underlying issue.

Without a contemporaneous evidence artifact, it is not possible to establish with confidence:

- The exact wording shown to the user.

- The specific medical claims that were made.

- The citations or sources displayed at that moment.

- Whether warnings or disclaimers were present.

- Whether the output was stable across repeated queries.

In regulated domains, the inability to answer these questions constitutes a reconstruction gap. Once a representation is challenged, the absence of reconstructable evidence prevents meaningful investigation, remediation, or supervisory review.

Accuracy cannot be governed retroactively

Healthcare guidance is contextual. Recommendations depend on patient characteristics, comorbidities, and clinical intent. When an AI output is detached from its generation context, disputes over correctness become interpretive rather than analytical.

This is why accuracy is an insufficient primary control objective. Systems are governable not because they never err, but because errors can be examined with evidence.

Similar control transitions have occurred in other regulated settings. In financial advice, algorithmic trading, and automated credit decisions, the decisive shift did not occur when systems became error free. It occurred when regulators required records that allowed decisions to be reconstructed. The present situation is analogous in structure, not identical in substance.

Mapping the failure to AIVO Standard

AIVO Standard is an AI Verification and Oversight framework focused on external representations and evidentiary control. Under this framework, the incidents reported in January 2026 would be classified as external representation failures lacking reconstructive evidence.

Specifically:

- A medically actionable representation was delivered.

- No time indexed evidence artifact was captured at generation.

- No structured claim map existed to distinguish supported from unsupported assertions.

- No stability record existed to assess whether the output drifted.

- Responsibility for the representation could not be clearly attributed.

The failure occurs prior to harm. The system should not present such representations in that form unless evidence capture is possible.

Reasoning Claim Tokens as evidence artifacts

Reasoning Claim Tokens, or RCTs, are defined within AIVO Standard as claim bearing evidence artifacts. They are not truth validators and they do not expose proprietary model internals. Their function is to record observable system behavior at the moment an AI mediated representation is delivered.

For health related outputs, an RCT bundle would minimally include:

- The exact user query and system context.

- The full text of the response as rendered.

- A structured extraction of actionable claims.

- The provenance surface visible to the user.

- A timestamp and stability fingerprint.

With such an artifact, governance questions become answerable. Without it, they do not.

Importantly, RCTs rely only on externally observable data. This makes them operationally feasible and compatible with regulatory expectations that do not require access to internal model reasoning.

Why disclaimers do not resolve the risk

Disclaimers alter liability framing. They do not create evidence.

A system that prominently presents a synthesized answer while stating that it is not medical advice is still making a representation that users act upon. In regulatory and supervisory contexts, the presence of a disclaimer does not substitute for the ability to reconstruct what was presented.

Without evidence, disclaimers increase ambiguity rather than control.

A quiet reclassification

What the January 2026 reporting illustrates is an early stage of reclassification. AI health advice failures are increasingly treated not as model anomalies, but as internal control weaknesses once specific outputs are challenged.

This pattern has appeared before. Once reconstruction becomes technically feasible, the absence of evidence is no longer viewed as inevitable. It becomes a governance decision.

The takeaway

The issue exposed by AI Overviews is not unique to one platform and it is not solvable through incremental accuracy improvements alone.

Once AI systems operate as answer surfaces in regulated domains, contemporaneous evidence capture becomes a prerequisite for legitimacy. Systems that cannot produce inspectable artifacts will face growing resistance, regardless of average performance metrics.

Governance does not begin with better models. It begins with better records.