When AI Meeting Notes Become Legal Evidence

Why BIPA-style lawsuits expose an evidence gap, not a transcription problem

The recent Illinois Biometric Information Privacy Act case against Fireflies.AI Corp has largely been framed as a biometric privacy dispute. That framing is legally correct but analytically incomplete. The more consequential issue is not voice recognition accuracy or transcription quality. It is evidence failure in systems that now routinely record, summarize, and store human speech.

AI meeting assistants have crossed a quiet threshold. Their outputs are no longer disposable productivity aids. In legal, compliance, HR, and audit contexts, transcripts and summaries are increasingly relied upon as records. Once that reliance exists, scrutiny shifts. Regulators and plaintiffs stop asking whether the AI was accurate and start asking whether the organization can reconstruct what actually happened.

Most cannot.

The reliance threshold regulators are probing

The risk does not arise simply because a meeting was recorded. It arises because downstream artifacts are treated as authoritative.

In the Fireflies complaint, the most damaging allegations are not about errors in transcription. They focus on the absence of provable answers to basic questions:

- What exactly was captured during the meeting

- Whether biometric identifiers were derived, implicitly or explicitly

- Who was informed and who was not

- What artifacts were stored, reused, or shared

- Under what declared controls these actions occurred

Policies, privacy notices, and vendor assurances do not answer these questions after the fact. Logs alone rarely help either. AI meeting systems are non-deterministic and context dependent. You cannot replay the meeting to prove compliance. By the time a claim surfaces, intent is irrelevant. What matters is whether there is reconstructable evidence of execution.

This is why BIPA-style cases are so destabilizing. They convert silent data exhaust into contested legal evidence.

Similar theories have already been raised against other AI transcription providers, reinforcing that this is a pattern, not a one-off.

Why traditional AI governance fails under litigation pressure

Most enterprise AI governance programs emphasize prevention: model reviews, accuracy testing, consent language, and internal policies. These controls matter, but they fail in adversarial settings for one reason.

They are not evidence.

Courts and regulators do not ask whether an organization had a policy. They ask what happened in a specific interaction. In AI meeting systems, that interaction is the moment of capture and derivation.

Once a transcript or summary is relied upon, the organization must be able to show, credibly and precisely:

- what inputs were captured

- what derivatives were produced

- what was exposed downstream

- what was relied upon as a record

Without that, even a technically compliant deployment becomes legally fragile.

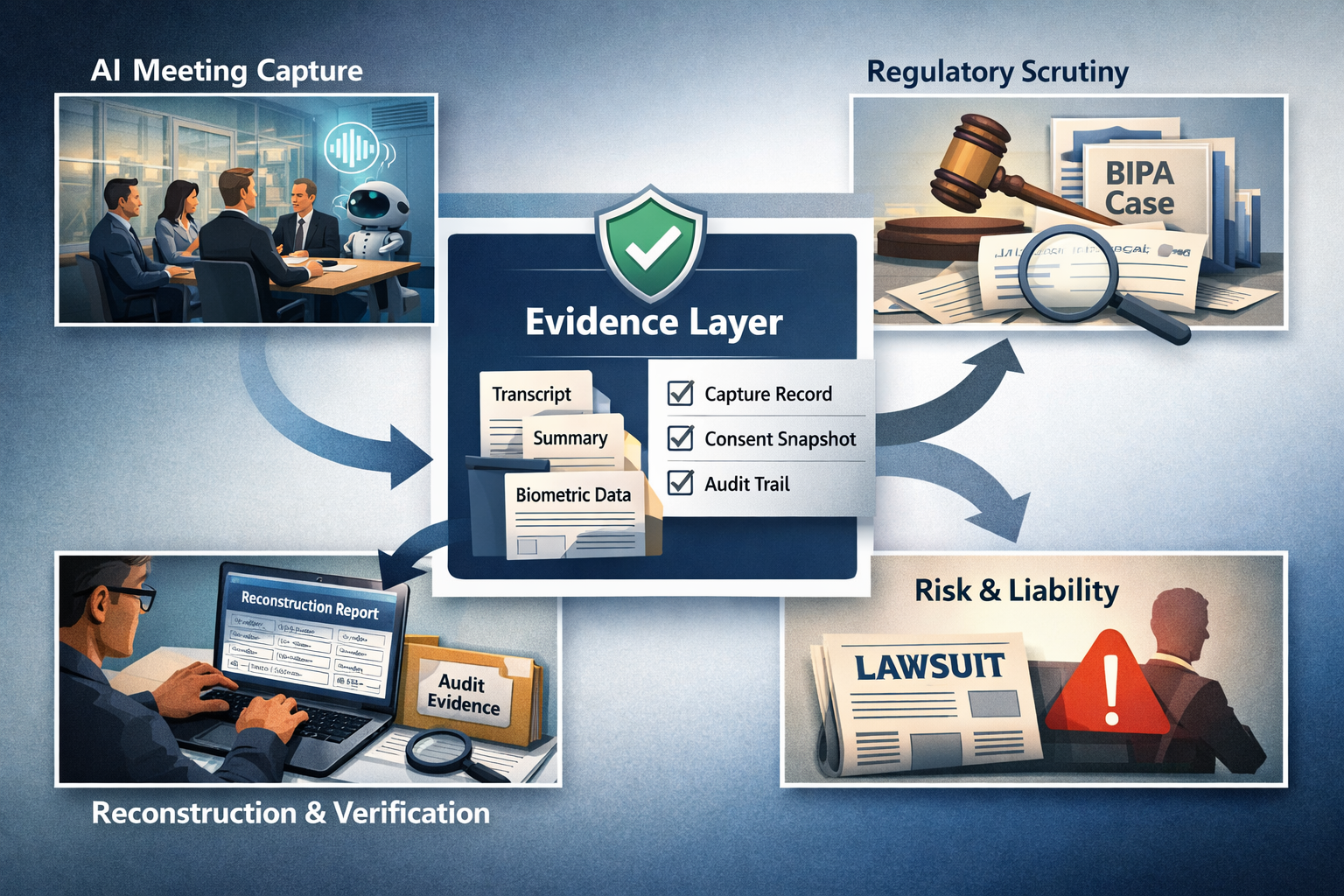

Where an evidence layer changes the risk shape

This is where AIVO’s original governance doctrine applies cleanly, without expansion or overreach.

Implemented precisely as a post-incident evidence service, not as a privacy or consent platform, AIVO targets the exact gap exposed by BIPA-like suits.

Its role is narrow by design:

- Treat AI meeting capture as an evidence-generating event

- Record declared capture scope and derivative creation at the moment it occurs

- Snapshot consent state as evidence, not as user experience

- Classify outputs so transcripts, summaries, and any biometric derivatives are distinct artifacts

- Track exposure and reliance rather than internal model behavior

- Bind retention and deletion to evidentiary attestations rather than assumptions

This does not prevent lawsuits. It materially changes what happens when they arrive.

Instead of reconstructing events from fragmented logs and vendor statements, the organization can answer the questions regulators actually ask using its own neutral evidence record. That difference often determines whether an incident becomes a contained legal matter or a reputational escalation.

Why this is viable now

This approach would not have been viable several years ago. It is viable now for three reasons.

First, reliance has surged. AI meeting artifacts now influence decisions, audits, and formal records in regulated environments.

Second, regulatory attention has shifted. Enforcement is moving away from abstract AI safety claims toward inspectable evidence trails and post-event reconstruction.

Third, non-users are implicated. External participants and silent attendees are now legally relevant, even when they never interacted with the tool.

These forces create demand for something most organizations lack: credible proof of what their AI systems captured, derived, and exposed at the moment it mattered.

This pattern will not remain confined to Illinois or to biometric law. Post-market monitoring and record-keeping duties emerging in other regimes are converging on the same evidentiary question.

What this is not

Clarity here is essential.

This is not a full privacy solution.

It is not a consent management platform.

It does not determine legal compliance or provide safe harbor.

It does not replace statutory obligations under biometric or wiretapping laws.

Over-claiming would destroy credibility.

The value is forensic readiness. It is about making AI-generated meeting artifacts defensible once they exist and are relied upon.

Practical adoption guidance

For organizations already using AI meeting assistants, this should be approached as a targeted pilot, not a blanket rollout.

The rational starting point is in high-risk contexts:

- legal team meetings

- compliance and audit discussions

- investigation-adjacent or regulatory calls

These are the settings where transcripts are most likely to become evidence and where the absence of reconstruction capability is most damaging.

Pilots should be scoped explicitly as evidence readiness exercises, not as compliance deployments. The objective is to observe whether the organization can reconstruct events under pressure, not to redesign meeting behavior.

CTA: evidence before exposure

If your organization uses AI meeting tools, the relevant question is no longer whether they improve productivity. It is whether you can prove what they did when it mattered.

AIVO’s evidence layer is designed for that moment.

If you are concerned about BIPA-style exposure or discovery risk from AI meeting artifacts, consider piloting AIVO as a post-incident evidence control in a limited, sensitive scope first. Start where reliance already exists. Expand only if the evidence proves its value.

In governance, credibility compounds quietly. Evidence is where it starts.