When AI Procurement Fails, What Evidence Exists?

Recent reporting has surfaced concrete cases in which AI-generated representations influenced enterprise procurement judgment, with material consequences when those representations proved wrong. A recent article in Fortune describes how AI systems mischaracterized financial, governance, and safety attributes in enterprise decision contexts, triggering commercial and reputational fallout.

The significance of these cases does not lie primarily in model accuracy. It lies in what happens after reliance has occurred.

Once an organization relies on an AI-mediated representation to inform a governed decision, a narrower and more consequential question emerges:

What evidence exists of what was actually presented, relied upon, and under what constraints?

Accuracy Is Not the Enforcement Question

Most enterprise discussions of AI risk remain anchored in accuracy, bias, or hallucination rates. Those concerns matter ex ante. They are not, however, the questions regulators, courts, insurers, or auditors ask ex post.

Post-incident scrutiny tends to focus on reconstructability:

- What information was presented to decision-makers?

- In what form was it presented?

- Was that representation preserved?

- Can it be examined independently of the system that generated it?

Re-running a non-deterministic system does not answer those questions. Technical logs, prompts, or model cards describe system behavior, not the outward-facing representation that influenced judgment.

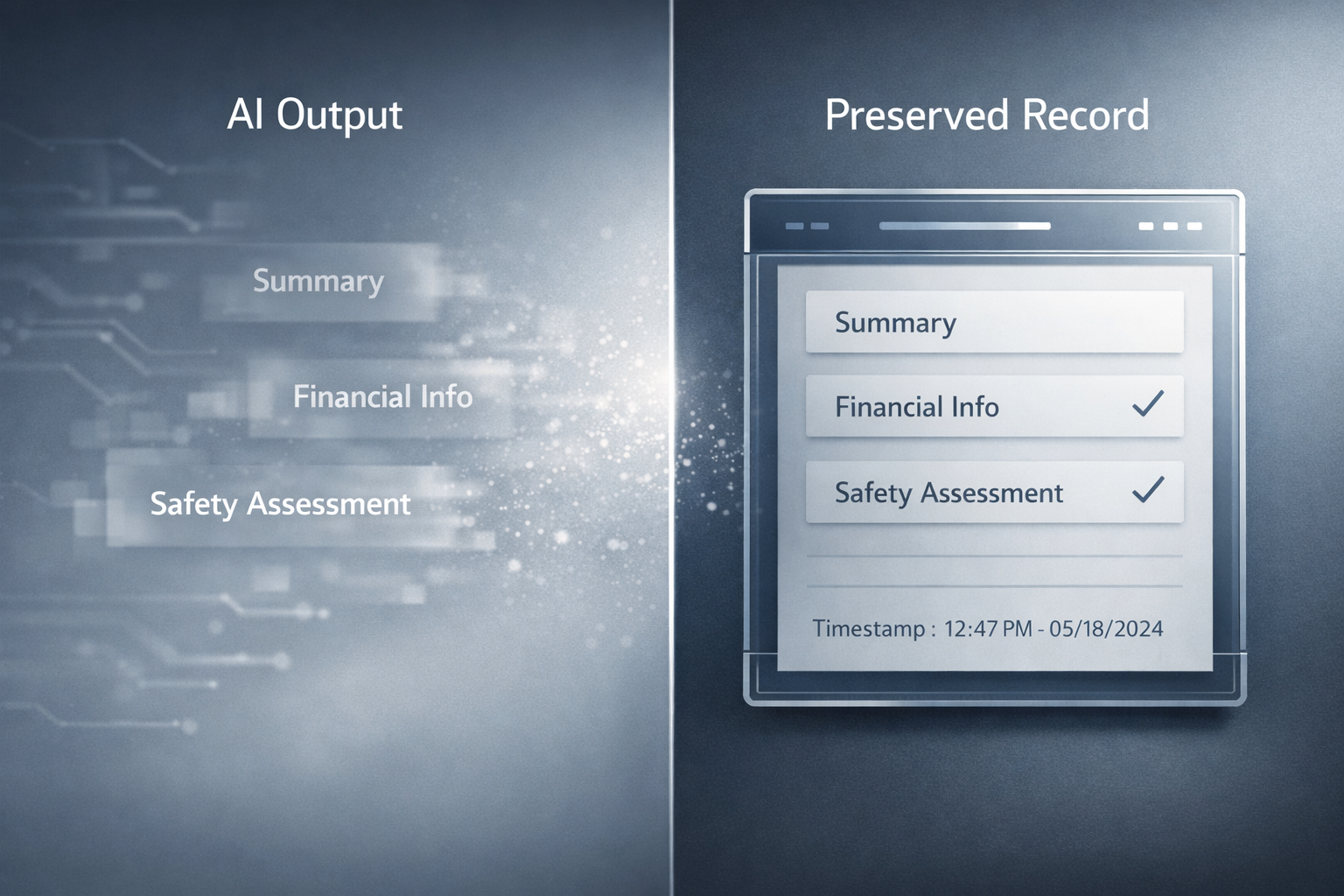

The Evidentiary Gap

The cases now entering the public record expose a recurring failure mode.

In many AI-mediated workflows:

- Outputs are generated dynamically.

- Representations are ephemeral.

- No immutable record of the relied-upon statement is preserved.

When challenged later, organizations may be able to show that a system was configured “appropriately” or that controls existed in theory, yet still be unable to reconstruct what was actually asserted at the moment reliance occurred.

This is not a technical limitation. It is a procedural control failure.

Asymmetric Evidence Risk

A further complication arises when AI systems are externally hosted or third-party mediated.

In those settings:

- The AI provider may retain internal logs.

- The enterprise may not possess the record of what its own decision-makers saw.

Under scrutiny, that asymmetry becomes decisive. Accountability does not follow who generated the output; it follows who relied on it and who can evidence that reliance.

Preservation Does Not Cure Error, but It Makes Error Examinable

Preserving AI-mediated representations does not make them accurate, fair, or compliant. It does something more basic and more defensible: it makes them examinable.

Without preservation:

- Variance cannot be distinguished from deficiency.

- Normal system behavior cannot be separated from failure.

- Assertions cannot be tested, challenged, or contextualized.

With preservation:

- Errors can be interrogated rather than inferred.

- Disputes can focus on substance rather than speculation.

- Accountability can be grounded in evidence rather than reconstruction by narrative.

From Model Governance to Representation Governance

The public cases now emerging mark a subtle but important shift.

The risk is no longer confined to how AI systems are built or tuned. It extends to how their outward-facing representations are treated once they enter governed decision processes.

That shift does not require new doctrine. It requires applying existing evidentiary and records expectations to AI-mediated representations once reliance is established.

A Narrow but Necessary Control Boundary

The lesson from recent failures is not that AI procurement should stop, nor that accuracy can be guaranteed.

It is that organizations relying on AI-mediated representations must be able to answer a basic question when challenged:

Can we evidence what the system asserted at the moment we relied on it?

Where the answer is no, the risk is not hypothetical anymore. It is procedural, discoverable, and increasingly visible.