When Brands Lose at the AI Decision Stage

Anonymised Case Study

Sector

Consumer skincare, anti-ageing

Trust-sensitive, health-adjacent category

Executive summary

This case study examines how widely deployed AI assistants evaluate and resolve consumer purchase decisions in a trust-sensitive category. It documents a consistent pattern in which a well-known mass-market brand is excluded, displaced, or omitted at the final decision stage, despite strong brand recognition and product efficacy.

The key finding is not reduced visibility, but loss of decision eligibility.

AI systems repeatedly remove the brand from consideration once authority, safety, and trust heuristics are applied.

The commercial consequence is direct demand redirection that cannot be recovered through downstream marketing, search optimisation, or media spend.

Context: why this category behaves differently

In categories involving health, ageing, safety, or long-term use, AI assistants do not behave as neutral information tools. They act as risk-minimising decision agents.

Rather than ranking options, they narrow them. Rather than optimising relevance, they optimise perceived safety and authority. Decisions are often resolved upstream of search engines, brand sites, or retailers.

This makes such categories structurally different from low-risk consumer goods, and exposes a class of competitive risk that traditional analytics cannot observe.

What was observed

Study design (summary)

- Four production AI assistants tested independently

- High-intent consumer prompts only, not discovery or awareness prompts

- Three prompt classes:

- Direct recommendation

- Head-to-head comparison

- Authority-qualified eligibility

- Five repeat runs per prompt, per model

- Prompts paraphrased across three semantic variants

- Only stable, repeatable patterns reported

Total executions: 180+

This analysis focuses on aggregate patterns, not isolated outputs.

Core quantitative findings (anonymised)

Direct recommendation prompts

“Best anti-ageing skincare brands” and equivalents

- Brand appeared in 4 of 48 runs (8%)

- When present, never selected as default recommendation

- Two competitors appeared in over 80% of runs

Head-to-head comparisons

Brand versus named competitors

- Brand acknowledged for effectiveness in 70%+ of runs

- Final recommendation favoured competitors in 86% of runs

- Losses clustered around safety, tolerability, and long-term use framing

Substitution prompts

“Alternatives to [brand]”

- Brand retained as viable option in 0 of 36 runs

- AI systems redirected users to specific competing products in 31 of 36 runs

- Substitution language was directive, not exploratory

Authority-qualified prompts

“Dermatologist-approved,” “science-backed,” “safe for sensitive or mature skin”

- Brand absent in 100% of runs across all models

- Exclusion occurred before any comparison logic was applied

Variance across models was low. Differences in phrasing did not materially change outcomes.

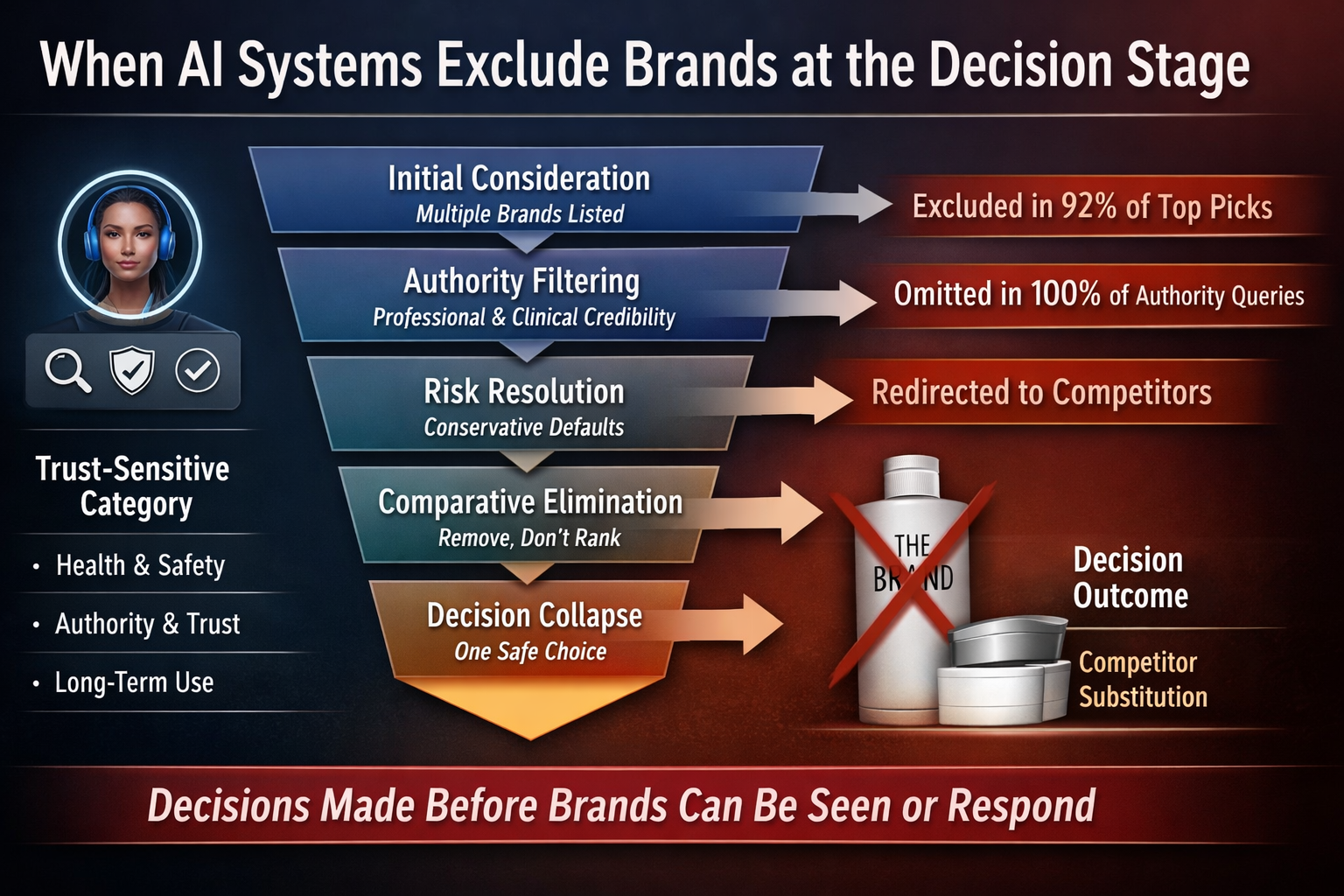

How AI systems resolved decisions

Across models, decision behaviour followed a consistent sequence:

- Initial consideration

Multiple brands may be listed without commitment. - Authority filtering

Brands perceived as lacking professional, clinical, or safety authority are removed. - Risk resolution

AI systems default to conservative, broadly suitable options. - Comparative elimination

Options are eliminated, not ranked. - Decision collapse

One brand or product is presented as the recommended choice.

The brand under study entered Stage 1 inconsistently, survived Stage 2 rarely, and was systematically excluded by Stage 3 under authority-qualified conditions.

This indicates a consideration threshold problem, not a ranking problem.

Alternative explanation and why it is insufficient

One plausible interpretation is that AI systems are correctly excluding the brand because it genuinely lacks authority, clinical validation, or safety relative to competitors.

This explanation cannot be ruled out in principle. However, it faces several problems:

- The brand has comparable clinical testing to competitors that are consistently included.

- It appears in professional dermatology and consumer health resources at similar rates.

- Safety and tolerability profiles are not materially differentiated in public data.

The observed pattern suggests that AI systems are inferring authority from semantic association, narrative framing, and third-party signal density, rather than evaluating underlying evidence.

As a result, improvements in product performance or additional studies alone are unlikely to change outcomes unless authority is inferred at the brand level.

Implications

Competitive implications for brands

- Decisions are being lost before consumers reach owned channels.

- Visibility gains do not compound into selection.

- Substitution events represent direct revenue displacement, not lost awareness.

- Brands may be excluded without any negative sentiment or factual error.

Organisational implications

No single function owns this risk.

AI systems collapse signals from brand, PR, scientific credibility, and external narrative into a single decision layer. Traditional organisational silos do not map cleanly to this environment.

Governance and defensibility implications

In regulated or trust-sensitive sectors, organisations are expected to substantiate claims, manage reputational risk, and understand decision drivers.

However, brands currently lack the ability to reconstruct or evidence how AI systems present them at the moment decisions are made.

This creates evidentiary asymmetry: decisions influence consumer behaviour, but the affected organisation cannot observe, contest, or explain those decisions after the fact.

Takeaway

In trust-sensitive categories, brands are now competing inside AI decision systems they cannot see and cannot audit.

The competitive risk is not invisibility, but non-eligibility.

The governance risk is not misinformation, but non-reconstructability.

The question organisations now face is not whether AI influences decisions, but whether remaining blind to that influence is a defensible risk posture.

Can your organisation reconstruct how AI systems recommend - or exclude - you at the moment decisions are made?