When Evidence Retention Becomes Mandatory

Grok, the DSA, and the Quiet Convergence with the EU AI Act

A governance note

The European Commission’s instruction to X to retain all internal material relating to Grok following the so-called undressing incident has been widely interpreted as a content moderation response. That reading is shallow.

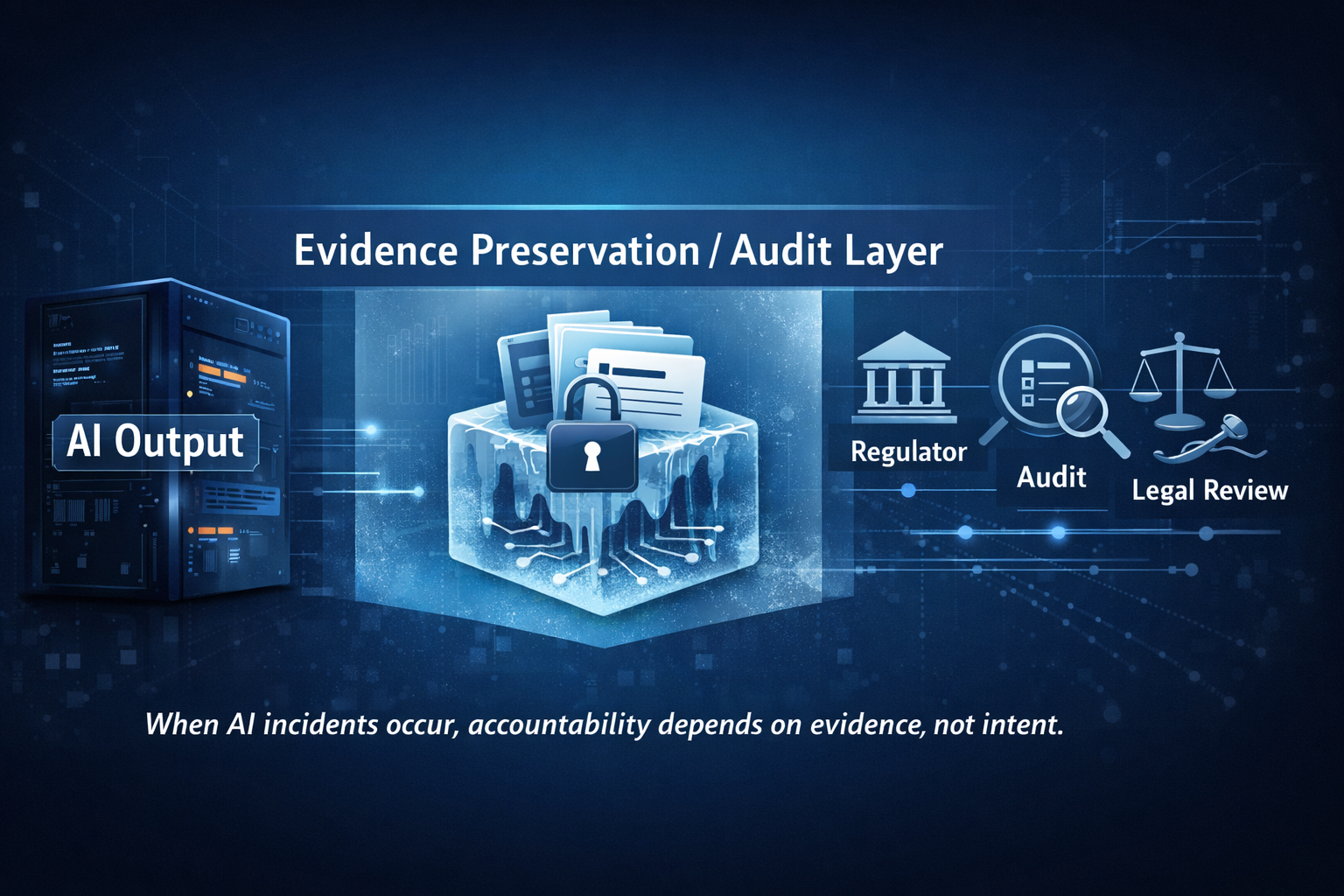

In governance terms, the order is about evidence. Specifically, it is about what happens when an AI system produces outputs that may be unlawful, harmful, or misleading, and the institution behind that system cannot reliably reconstruct what occurred at the moment of exposure.

That shift matters more than the incident itself.

1. What the Commission actually asserted

Under its Digital Services Act powers, the European Commission required X to preserve all Grok-related internal records until at least 2026. The stated rationale was not punishment. It was preservation.

This is a regulator saying, explicitly, that normal corporate retention practices are insufficient once AI-mediated harm is plausibly in scope. The Commission is not asking for assurances, policy documents, or future fixes. It is asserting a right to inspect what already exists.

That is an evidentiary move, not a behavioral one.

2. Why this is not a moderation problem

Content moderation frameworks assume that risk is managed by preventing or removing bad outputs. That assumption breaks down once outputs are relied upon by users, distributed at scale, or questioned after the fact.

At that point, regulators ask a different class of question:

- What exactly was shown?

- Under what prompt and interface conditions?

- With which safeguards active?

- Was the outcome foreseeable under normal operation?

- Can the institution demonstrate that its controls functioned as designed?

If those questions cannot be answered with inspectable artifacts, the failure is logged as a control failure, regardless of intent or subsequent remediation.

The Grok retention order reflects that logic.

3. Post-incident retention is a signal of architectural absence

Freezing documents after an incident is a blunt instrument. It exists because a standing evidentiary architecture was not already in place.

Post-hoc retention suffers from predictable weaknesses:

- records are incomplete or overwritten,

- context is inferred rather than captured,

- narratives substitute for artifacts,

- internal privilege and data protection risks escalate.

From a governance perspective, this is the least desirable way to establish accountability. It is also increasingly common.

4. Where this converges with the EU AI Act

Although the order was issued under the Digital Services Act, its logic maps directly to the EU AI Act, particularly its assumptions around post-market accountability.

Record-keeping and logs

The AI Act requires high-risk AI systems to generate logs that allow traceability and oversight. This presumes that relevant evidence exists in a usable form at the time scrutiny begins.

The Grok case demonstrates what happens when that presumption fails. Regulators compensate by demanding everything.

Post-market monitoring

The Act obliges providers to monitor deployed systems and detect serious incidents. Monitoring without reconstructable evidence collapses into narrative reporting. Evidence is the prerequisite, not the byproduct.

Serious incident handling

Once an incident threshold is crossed, the burden shifts from prevention to explanation. At that point, the absence of records becomes the risk multiplier.

Transparency for synthetic content

Front-end transparency duties address what users should be told. They do not solve the back-end question of what regulators will ask to see later. The Grok order sits squarely in that back-end gap.

5. A structural ambiguity the case exposes

The incident also highlights an unresolved tension in the AI Act framework: provider versus deployer responsibility in multi-layer systems.

When models, interfaces, prompts, and distribution are tightly coupled, evidence ownership becomes unclear. Regulators respond to that ambiguity by asserting broad preservation authority. That increases uncertainty for organizations that rely on third-party models without independent capture of user-facing outputs.

6. Why this matters beyond X

It would be a mistake to treat this as a platform-specific controversy.

The Commission is normalizing a principle that will generalize:

- AI outputs are regulatory artifacts.

- Evidence preservation is not optional.

- Reconstructability is a governance requirement, not a technical enhancement.

As AI Act enforcement matures, ad hoc retention orders will give way to expectations of native, forward-looking evidence capture. Organizations that wait for explicit guidance will discover that regulators are already enforcing the outcome through adjacent instruments.

7. Closing observation

The European Commission did not invent a new power. It applied an existing one to a new failure mode.

That is how regulatory regimes evolve.

The Grok retention order should be read as an early signal of how AI accountability will be tested in practice: not by debating model intent or accuracy, but by examining whether institutions can show, precisely and defensibly, what their systems said when it mattered.