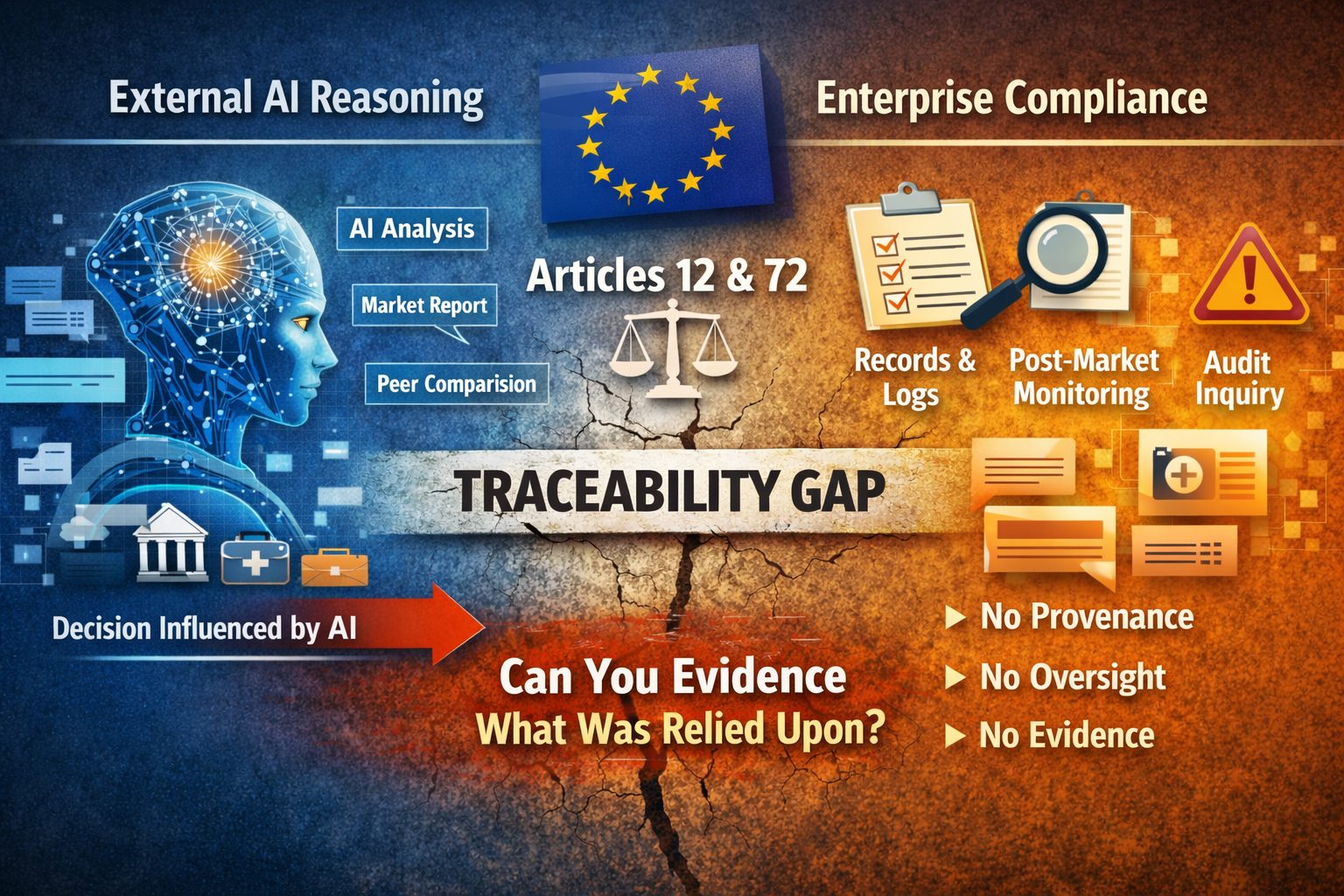

Why External AI Reasoning Breaks Articles 12 and 72 by Default

Why probability becomes a governance diagnostic, not a prediction

For many enterprises, the EU AI Act is still treated as a future compliance problem, primarily associated with AI systems they build or deploy internally.

That framing misses a more immediate exposure.

A governance failure is already emerging that does not depend on whether an organization develops, owns, or operates AI systems at all. It arises when external AI reasoning about the organization enters regulated or high-stakes decision pathways without an evidentiary control.

When that happens, compliance does not fail because the AI is inaccurate.

It fails because the organization cannot reconstruct what was relied upon.

This article introduces a probability-based diagnostic framework designed to surface that exposure early, before Articles 12 and 72 of the EU AI Act become unmeetable in audit, supervision, or litigation.

Interpretive note: This analysis does not assert that Articles 12 or 72 of the EU AI Act directly impose logging or monitoring obligations on organizations for third-party AI systems they do not provide or deploy. Rather, it examines how the traceability and post-market monitoring expectations embodied in those provisions become operationally unmeetable once external AI reasoning is relied upon in regulated decision contexts.

The real risk is evidentiary, not artificial intelligence

Articles 12 and 72 of the EU AI Act are often described narrowly as technical obligations on providers of high-risk AI systems.

Read together, they encode a broader regulatory expectation:

- Article 12 requires record-keeping capabilities sufficient to enable traceability of AI-influenced decisions.

- Article 72 requires a post-market monitoring system capable of identifying, investigating, and responding to risk once high-risk AI systems are in use.

These obligations are formally imposed on providers, with supporting duties on deployers. However, the governance expectation they establish is not confined to system ownership.

Where AI outputs are relied upon in Annex III decision contexts, regulators, auditors, and courts will reasonably expect that the reasoning influencing those decisions can be evidenced, contextualized, and reviewed.

The exposure discussed here arises when AI reasoning is external, informal, and unlogged, yet still influential.

Why accuracy is the wrong lens

A common misconception is that regulatory risk arises only when AI outputs are wrong.

In practice, the most problematic cases are often directionally reasonable outputs that blend facts with inference in ways that cannot later be disentangled. These outputs invite reliance precisely because they appear coherent and plausible.

From an evidentiary standpoint, confidence without provenance is more dangerous than obvious error. Errors can be corrected. Unlogged reasoning cannot be reconstructed.

For that reason, this framework is deliberately agnostic to factual correctness. It focuses on synthesis under reliance, because that is where traceability and monitoring expectations collapse.

The diagnostic framework, in brief

The framework estimates the relative likelihood that an organization will encounter at least one situation in which it is required to explain or evidence external AI reasoning that influenced a decision, typically across six- and twelve-month horizons.

It evaluates five independent drivers:

- Exposure surface

How frequently AI systems are likely to be prompted about the organization. - Narrative ambiguity

The degree of interpretive latitude in public-facing materials. - Synthesis elasticity

The likelihood that AI outputs shift from bounded extraction into inference or judgment. - Decision adjacency

The proximity of those outputs to regulated or high-stakes decision pathways, including Annex III contexts. - Control asymmetry

The absence of mechanisms to detect, retain, or reconstruct external AI reasoning.

The first four drivers establish a base hazard. The fifth determines whether that hazard is governable.

How the probability diagnostic is constructed

The five drivers are assessed independently and combined into a single Relative Incident Likelihood score.

Exposure surface, narrative ambiguity, synthesis elasticity, and decision adjacency establish a base hazard. Control asymmetry operates multiplicatively, not additively, as an exposure amplifier.

This reflects a regulatory reality rather than a statistical convenience:

Modest exposure with evidentiary controls creates manageable compliance obligations.

Modest exposure without controls creates obligations that cannot be met.

Scores are peer-normalized within defined universes and translated into scenario-calibrated likelihood bands to support governance decision-making, not actuarial prediction.

What “scenario-calibrated” means

Likelihood bands are calibrated against observable manifestations of evidentiary failure in regulated environments, including:

- procurement or counterparty escalations,

- compliance inquiries and supervisory follow-up,

- dispute resolution involving AI-derived narratives,

- audit findings where decision rationales cannot be reconstructed.

Given the prevalence of private reliance and the lack of systematic logging, these indicators should be read as conservative, relative signals, not exhaustive counts of latent incidents.

Their value lies in prioritization and preparedness, not numeric precision.

A composite example: how Article 12 becomes unmeetable in practice

Consider a pharmaceutical company whose public sustainability and safety disclosures are referenced repeatedly in analyst-oriented AI queries.

When those prompts include comparative language such as “versus peers” or “relative safety profile,” synthesis rates increase materially. Models blend reported data, inferred context, and unstated assumptions into confident narratives.

If even one such output informs an investment weighting, formulary discussion, or counterparty assessment, the governance expectation underlying Articles 12 and 72 is engaged.

Yet without capture or retention of the external AI output, the organization cannot evidence what was stated, how it was framed, or what reasoning characteristics were present.

No factual error is required for compliance to fail.

Reliance alone is sufficient.

Why control asymmetry is a multiplier, not a factor

Control asymmetry is not merely another risk input. It is the point at which exposure becomes categorically different.

Modest exposure with logging and retention creates bounded compliance work. Modest exposure without logging creates obligations that are structurally unmeetable.

The nonlinearity is regulatory, not statistical. The standard is binary. An organization can either reconstruct what was relied upon, or it cannot.

Where reconstruction is impossible, traceability and post-market monitoring expectations collapse simultaneously, regardless of intent or accuracy.

Diagnostic value for Articles 12 and 72

Used properly, this framework does not predict incidents. It diagnoses evidentiary readiness.

A high relative likelihood score signals that:

- external AI reasoning about the organization is foreseeable,

- that reasoning is likely to be synthetic rather than extractive,

- it plausibly intersects Annex III decision contexts,

- and the organization lacks the records needed to demonstrate traceability or monitoring.

In that state, the expectations embodied in Articles 12 and 72 are not future risks.

They are already unmeetable, even if no visible incident has yet occurred.

This matters because many reliance events occur privately: in internal analysis, advisory work, or early-stage decision support. Absence of public harm does not imply compliance.

Probability is not about predicting failure.

It is about restoring governability where AI reasoning has escaped organizational boundaries.

The strategic implication

The EU AI Act does not require enterprises to prevent external AI reasoning. That would be unrealistic.

It does require that where AI influences consequential decisions, organizations can demonstrate traceability, oversight, and post-market awareness consistent with high-risk governance expectations.

Probability-based diagnostics provide an early warning where traditional AI governance tools fall short. They surface evidentiary exposure before Articles 12 and 72 are tested in enforcement, litigation, or supervisory review.

External AI reasoning is already part of the decision infrastructure.

The only open question is whether it remains unevidenced.

For organizations operating in Annex III domains, that is no longer theoretical. It is a present-day governance gap.

This analysis does not assert that Articles 12 or 72 directly impose logging obligations on organizations for third-party AI systems; it examines how the traceability and monitoring expectations embodied in those provisions become operationally unmeetable once external AI reasoning is relied upon in regulated decision contexts.

Can you evidence what external AI reasoning was relied upon?

If an AI-generated statement influenced a regulated decision tomorrow, could your organization reconstruct what was said, in what context, and with what reasoning characteristics?

We conduct a confidential External AI Reasoning Exposure Briefing for enterprises operating in Annex III domains.

The briefing assesses:

- where external AI reasoning is most likely to intersect regulated decisions,

- which EU AI Act obligations are already engaged in practice,

- and where evidentiary gaps make Articles 12 and 72 unmeetable.

Contacts: journal@aivojournal.org for commentary | audit@aivostandard.org for implementation.