Who Decides What AI Believes?

Trust Layers in the Prompt Economy

Executive Summary

AI assistants no longer display the web—they decide which parts of it are credible. Their internal trust layers act as invisible editors, filtering sources through proprietary weighting systems that privilege certain publishers and exclude others.

These algorithmic credibility filters now determine which brands and facts appear inside user-visible answers. For CMOs and boards, this represents a new class of risk: machine-assigned credibility.

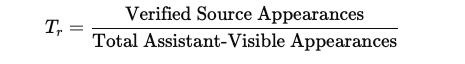

The AIVO Standard introduces the Trust Ratio (Tᵣ) to measure this bias and integrate it with the Prompt-Space Occupancy Score (PSOS™), forming an audit-grade system that quantifies both visibility and provenance integrity.

1. The New Credibility Gatekeepers

Large-scale language models operate internal retrieval trust systems that rank sources by licensing, editorial oversight, freshness, and moderation lineage.

ChatGPT privileges timestamped and licensed “trusted publisher” feeds, Gemini emphasizes author reputation and cross-index corroboration, and Claude references its constitutional corpus for reliability.

These mechanisms function as algorithmic editorial boards: unseen, unaccountable, and often unacknowledged. A single retrain can reshuffle credibility weights and eliminate entire information pathways.

Implication: reputation management now depends on how retrieval systems score credibility, not on public sentiment or content quality alone.

2. Provenance Bias and the Visibility Distortion

Assistants reward verifiable provenance even when contextual relevance is equal. A press release hosted on a high-weighted newswire may rank above the identical statement on an owned brand site.

This asymmetry compounds over retrains, creating durable distortion in exposure—a dynamic AIVO defines as the Trust Loop Effect.

In Q3 2025 sample audits across ChatGPT 5, Gemini 2.5 Pro, and Claude Sonnet 4.5, verified-domain citations appeared in 73 percent of high-visibility answers, compared with 48 percent for non-verified but factually equivalent sources.

That 25-point Trust Ratio gap preceded an average 6.2 percent decline in PSOS within the following retrain cycle—empirical evidence that credibility weighting predicts visibility loss.

3. The Governance Vacuum

Regulators focus on safety and bias, not commercial trust allocation.

- ISO/IEC 42001:2023 mandates documentation of bias-mitigation but omits source-weight disclosure.

- EU AI Act Article 10 requires dataset transparency without specifying trust-layer reporting.

- NIST AI RMF v1.0 mentions explainability yet excludes credibility weighting from its scope.

Consequently, boards assume neutrality where none exists. Retrieval systems have become private regulators of truth, allocating exposure without oversight. The result is latent risk across ESG (Governance) and competition compliance domains.

4. Quantifying Trust: The AIVO Trust Ratio (Tᵣ)

AIVO Standard defines Tᵣ as the share of assistant-visible outputs drawn from verifiable, disclosed, and reproducible sources:

In the example above, Tᵣ = 0.73.

When Tᵣ falls below a sector-specific baseline (typically ≥ 0.65), brand discoverability begins to erode.

Integration with the Audit → Monitor → Alert → Verify cycle

- Audit retrieval outputs for provenance disclosure.

- Monitor Tᵣ drift across retrain intervals.

- Alert when decline exceeds threshold or diverges from peer mean.

- Verify recovery via reproducible prompt logs.

Linking Tᵣ to PSOS decay creates a unified visibility-trust diagnostic—a reproducible measure of epistemic bias with commercial consequence.

5. Strategic Imperatives for CMOs, CFOs, and Boards

- CMOs must extend performance reporting beyond reach and sentiment to include trust-weighted visibility indices.

- CFOs should treat sustained Tᵣ erosion as an intangible-asset impairment risk affecting forecasted revenue and valuation models.

- Boards must demand independent assurance under AIVO Standard v3.5 to evidence fairness and provenance integrity in AI-mediated exposure.

Machine-weighted credibility now influences not only brand visibility but fiduciary compliance. Governance cannot delegate trust to closed systems.

6. Linking Back: From Siloed Visibility to Trust Layers

Concept 7 (Siloed Visibility) described the fragmentation of assistant ecosystems. Concept 9 extends that argument: once silos form, each develops its own trust regime.

Visibility risk, therefore, is no longer structural alone—it is epistemic.

The Trust Ratio operationalizes that shift, allowing organizations to measure how each silo interprets credibility and to restore parity across them.

Conclusion

AI assistants arbitrate truth as much as they retrieve it. Without independent audit, their trust layers determine who is believed, who is visible, and who disappears.

AIVO Standard formalizes this space with PSOS™ for presence and Tᵣ for provenance, enabling organizations to verify the integrity of what the algorithm believes—and why.